How Wall Street views "Stargate": NVIDIA and AI infrastructure are the winners, and the narrative of a peak in 2025 should no longer be mentioned

Market feedback on "Stargate" shows that NVIDIA and AI infrastructure are seen as winners, and concerns about the peak in 2025 have been alleviated. Although ORCL's prospects raise doubts, especially regarding its profit margin issues, the revenue increment brought by Stargate is still viewed positively. The market's attention to Deepseek indicates an acceleration in the commercialization process of models

After a day of digesting information, the market/institutions' feedback on Stargate is starting to come out slowly; here is a brief summary.

NVDA + AI infrastructure is a consensus winner; previous debates about "scaling laws" / "capex peak" / "ASIC share transfer" can take a break for a while; the incremental $100 billion versus the previous 25-year $300 billion capex consensus has provided the short-term visibility/certainty everyone wanted. Simply put, a large company/large hyper-scaler has emerged overnight, and the narrative of the 25-year peak should no longer be mentioned (the "25 peak concerns off the table") - TSMC AVGO MRVL ARM ANET DELL HPE ALAB CRDO COHR CIEN MU HYNIX VRT VST PSTG LITE CLS APH are all on this beneficiary list*.

The sentiment towards ORCL has become somewhat uncertain ("Skeptical"); last quarter, there were concerns about OCI's backlog and the management's mention of declining operating margins during the bus tour; this Stargate is certainly a shot in the arm, bringing in huge revenue increments, but the profits it generates may not be as imaginative; Microsoft's retreat to the second line raises further concerns about "profit margins" (if it's a big piece of meat, Microsoft would eat it themselves); low margins + costs before revenue are also reasons for some bears' uncertainty. There were also voices about ORCL acquiring TikTok; if such capex investments are made, ORCL may not have enough cash flow for such additional transactions.

180K: An interesting point is that many companies have mentioned Deepseek; everyone uses Deepseek to prove that the commercialization process of models seems to be faster than expected (some point out that China Deepseek LLM is essentially trained on Open AI, so LLM training commoditizes quickly);

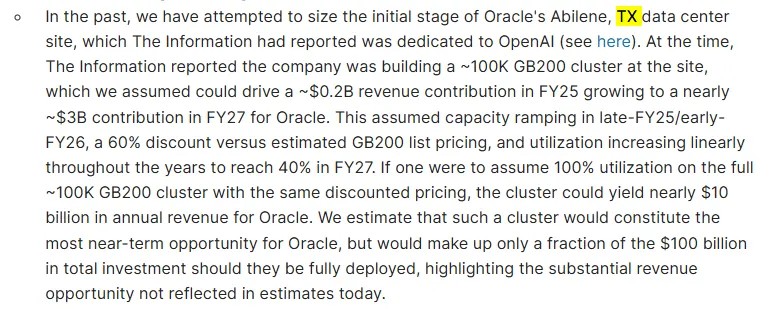

MS has made some calculations for the Texas cluster, estimating that 100K GB200s could generate $10 billion in revenue; details in the image below.

The feedback on MSFT is also quite "mixed"; the positive aspect is that Microsoft does not need to get bogged down in a $100 billion investment for Open AI as a "training customer"; MSFT still has API access + OpenAI's inference revenue (continuing to drive Azure's monetization); the downside is that after OpenAI and Microsoft have distanced themselves, Microsoft no longer has a leading large model (although there is a low-profile Phi model);

In the end, Azure may follow the path of AWS, which is to host various models (a host for leading models); this brings us back to our discussion on the "commoditization" of models, which can be referenced in some discussions from my public account on December 9th; the future path of major cloud providers may be more "integrated," providing a variety of models for everyone to call freely (180K: for example, if your software needs a cost-saving module, you can call deepseek, and for other functional modules, you can call other LLMs); the question left for GOOGL, META & xAI is how they will respond to OAI's 100B challenge? How much capex needs to be increased?

Although Microsoft has lost OAI's training revenue, Microsoft's current cooperation with OAI is still only in the form of credit drawdown (approximately 4B remaining limit);

UBS's view is that Microsoft's retreat to the second line is a "self-preservation" from the perspective of investment vs return; previous media reports indicated that OAI's goal is to increase revenue from 3.7B in 2024 to 100B in 2026 (a 30-fold "pie"); although OAI's training demand is unlikely to grow 30-fold in the coming years, matching the GPU expenditure under this ambition will still be enormous. Microsoft may choose to allocate spending to the inference layer (continuing to serve OAI + other enterprise users) as a more practical approach.

Many buy-side feedbacks are also focused on whether this 100B is NVIDIA? If this Stargate is executed immediately, NVDA is almost the only option; although OAI and AVGO have cooperation in ASIC, the production of ASIC is at least 12-18 months away; ORCL currently has no ASIC news, and SoftBank's progress is even further away (although SoftBank's ARM cannot be ignored).

Regarding the previously mentioned deepseek / model commoditization, let me elaborate a bit more.

Here are some viewpoints from Ben, which are well written.

Source: 180K, Original Title: "Foreign Capital Trading Desk | Some Feedback from Institutions on Stargate (January 23)"

Risk Warning and Disclaimer

The market has risks, and investment requires caution. This article does not constitute personal investment advice and does not take into account the specific investment objectives, financial situation, or needs of individual users. Users should consider whether any opinions, views, or conclusions in this article are suitable for their specific circumstances. Investment based on this is at your own risk