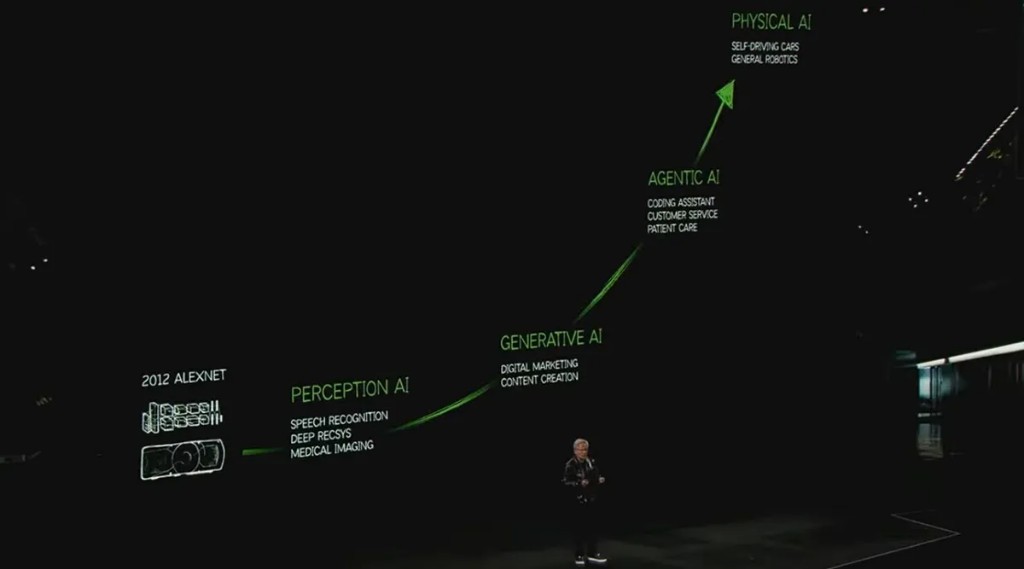

NVIDIA opens the "Physical AI" era, directly targeting the robot "ChatGPT moment"

Physical AI empowers robots with stronger environmental perception, understanding, and interaction capabilities. Jensen Huang stated at the CES conference that physical AI will fundamentally change the $50 trillion manufacturing and logistics industries, saying, "The 'ChatGPT moment' in the field of robotics is coming soon."

Author: Zhang Yaqi

Source: Hard AI

Are robots about to 迎来 the "ChatGPT moment"? NVIDIA will launch the Cosmos world foundational model platform at CES 2025, which may trigger a "physical AI" revolution.

This platform is considered a key step in accelerating the development of "physical AI," aiming to elevate the fields of autonomous vehicles and robotics to a higher level.

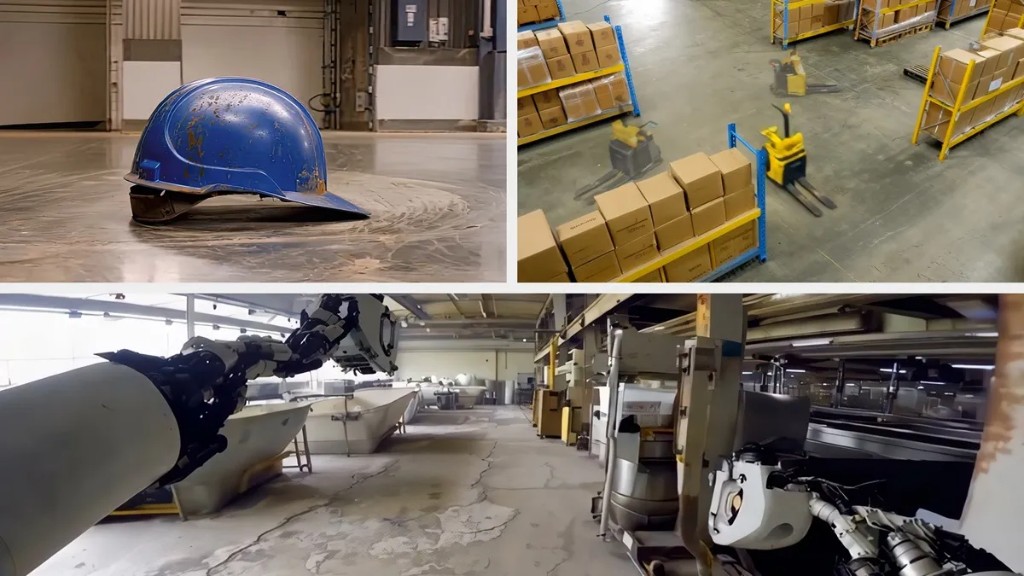

Physical AI endows robots with stronger environmental perception, understanding, and interaction capabilities. Advances in physical AI will greatly promote the development of industries that require high physical scene requirements, such as autonomous driving and robotics. Jensen Huang stated at the CES conference that physical AI will fundamentally change the $50 trillion manufacturing and logistics industries, with all moving things from cars and trucks to factories and warehouses being automated and driven by AI.

According to NVIDIA's official website, the physical AI system includes key components such as Omniverse, Cosmos, and Isaac Sim. The Cosmos platform utilizes over 20 million hours of video training data, aiming to "teach AI to understand the physical world."

What is Physical AI?

Physical AI, also known as generative physical AI, is a technology that enables autonomous machines (such as robots and self-driving cars) to perceive, understand, and execute complex operations in the real physical world.

It extends traditional generative AI to understand spatial relationships and physical behaviors in the 3D world. In simple terms, the content generated by artificial intelligence must conform to the laws of physics.

For example, in text-to-image or text-to-video models, if physical considerations are ignored, the generated content will lack details such as gravity and optics. By incorporating physical knowledge, the generated content will be more realistic.

Jensen Huang emphasized earlier this year that "the new wave of AI is physical AI."

Physical AI will give robots stronger environmental perception, understanding, and interaction capabilities. Traditional robots can only perform tasks according to preset programs, while robots equipped with physical AI can better understand their surroundings and respond according to physical laws. They can better recognize objects, predict motion trajectories, and navigate and operate in complex environments.

"Physical AI will fundamentally change the $50 trillion manufacturing and logistics industries," Jensen Huang stated at this year's CES International Consumer Electronics Show:

"From cars and trucks to factories and warehouses, all moving things will be automated and driven by AI. NVIDIA's Omniverse digital twin operating system and Cosmos physical AI are the cornerstones of driving the digitalization of global physical industries."

NVIDIA has built a complete physical AI ecosystem. According to NVIDIA's official website, the physical AI system includes key components such as Omniverse, Cosmos, and Isaac Sim.

Omniverse: Accelerating 3D Content Creation and Physical Simulation

Omniverse is an open platform for building and connecting 3D worlds. It provides a range of tools, APIs, and SDKs that enable developers to easily create high-fidelity, physics-based virtual environments for training and testing AI models The core of Omniverse is the Universal Scene Description (OpenUSD), which allows for data interoperability between different 3D tools. Omniverse has also been further expanded in this release, for example, through NVIDIA Edify SimReady generative AI models, which can automatically add physical effects or material properties to existing 3D assets, greatly accelerating the creation and preparation process of 3D content.

Shenwan Hongyuan stated that NVIDIA's vision for the future relies on the development of robotics technology, which depends on three core computers.

One is used for training AI, one is used to control the testing AI in a physical simulation environment, and one is a simulation environment computer installed inside robots or smart vehicles, supporting physical AI algorithms.

One of the currently applied scenarios is to verify the reliability of program logic in a simulation environment; the second is to obtain data that is difficult to acquire from the real world to continuously train AI models. Currently, many large companies are adopting this approach. From a software perspective, Ansys, a leading company in the simulation field, allows access to its simulation products through NVIDIA's Omniverse, enhancing NVIDIA DRIVE's high-fidelity and scalable 3D environment with Ansys' physical solvers aimed at cameras, LiDAR, and radar sensors, which is crucial for the development of autonomous driving systems.

In this way, all data during future driving processes can be fed back in real-time for decision-making, while generating more similar data to simulate more scenarios, accelerating the improvement of training effects and breaking through the bottleneck of data acquisition.

Shenwan Hongyuan believes that NVIDIA's significant investment in Omniverse indicates that its computing power will primarily focus on large model AI generation, robotics, and intelligent driving in the future.

Cosmos WFMs: A Key Step for AI to Understand the Physical World

The development of physical AI is extremely complex, requiring massive amounts of real-world data and long testing periods, with high development costs.

NVIDIA's Cosmos platform is designed to address this pain point by providing physical simulation data generation capabilities through its generative world foundation models. Cosmos WFMs enable developers to quickly generate high-fidelity data based on real physical laws, reducing the reliance on expensive real-world data.

Jensen Huang pointed out in his keynote speech that the Cosmos platform utilizes over 20 million hours of video training data, aiming to "teach AI to understand the physical world."

These models generate diverse physical environment scenarios, such as driving in snow or crowded warehouses, by combining text, images, videos, and robot sensor data, thereby providing critical support for autonomous driving and robotics development Cosmos uses NVIDIA's NeMo Curator framework and CUDA-accelerated data processing pipelines, completing the processing of 20 million hours of video in just 14 days, a task that would take 3.4 years in a traditional CPU environment.

Cosmos Tokenizer, as a state-of-the-art visual tokenizer, can convert images and videos into efficient visual tokens, achieving a 12-fold increase in processing speed and an 8-fold improvement in compression efficiency.

Jensen Huang stated: “The 'ChatGPT moment' in robotics is coming.” Just as large language models (LLMs) have driven advancements in natural language processing, Cosmos WFMs are considered foundational tools for the development of robotics and autonomous driving:

“We created Cosmos to democratize physical AI, allowing every developer access to general robotic technology.”

It can be said that the release of Cosmos completes an important part of NVIDIA's physical AI system for 'understanding the world.'

Industry Giants Embrace Cosmos

Several leading companies have become the first users of Cosmos, including 1X, Agile Robots, Waabi, Uber, and others. These companies are leveraging the Cosmos platform to drive advancements in robotics and autonomous driving technology.

Taking Uber as an example, by integrating its rich driving data with the capabilities of the Cosmos platform and NVIDIA DGX Cloud, Uber is collaborating with NVIDIA to accelerate the development of safe and scalable autonomous driving solutions.

Uber CEO Dara Khosrowshahi stated:

“Generative AI will power the future of mobility, which requires rich data and very powerful computing capabilities. By partnering with NVIDIA, we believe we can help accelerate the development of safe and scalable autonomous driving solutions for the industry.”

Agility CTO Pras Velagapudi stated in a statement:

“Data scarcity and variability are key challenges for successful learning in robotic environments. Cosmos's text, image, and video-to-world capabilities enable us to generate and enhance realistic scenes for various tasks, which we can use to train models without incurring the high costs of capturing real-world data.”

Currently, Cosmos WFMs are available for download through NVIDIA NGC and Hugging Face platforms, allowing developers to use these models and their fine-tuning frameworks. Additionally, Cosmos will enable rapid deployment through NVIDIA's DGX Cloud and provide comprehensive support for enterprise users