AMD has launched the MI300X accelerator, which can increase performance by up to 60% compared to Nvidia's H100, greatly expanding market expectations.

AMD 發佈了全新的 MI300 系列 AI 芯片,包括 MI300A 和 MI300X 芯片,旨在挑戰英偉達在人工智能加速器市場的地位。MI300X 芯片的性能最多可以提升 60%,內存是英偉達 H100 產品的 2.4 倍,內存帶寬是 H100 的 1.6 倍。AMD 預計 2027 年的 AI 加速器市場規模將增長近兩倍。這次發佈對於 AMD 來説是歷史上最重要的一次之一。

週三,AMD 發佈備受矚目的全新 MI300 系列 AI 芯片,包括 MI300A 和 MI300X 芯片,瞄準這一英偉達主導的市場。此類芯片比傳統計算機處理器更擅長處理人工智能訓練所涉及的大型數據集。

本次新品發佈是 AMD 公司長達 50 年曆史上最重要的一次之一,有望挑戰英偉達在炙手可熱的人工智能加速器市場上的地位。

AMD 發佈的新款芯片擁有超過 1500 億個晶體管。MI300X 加速器支持高達 192GB 的 HBM3 存儲器。

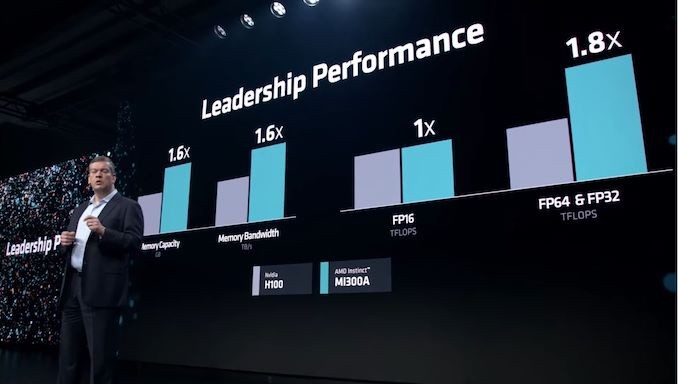

MI300X 內存是英偉達 H100 產品的 2.4 倍,內存帶寬是 H100 的 1.6 倍,進一步提升了性能。

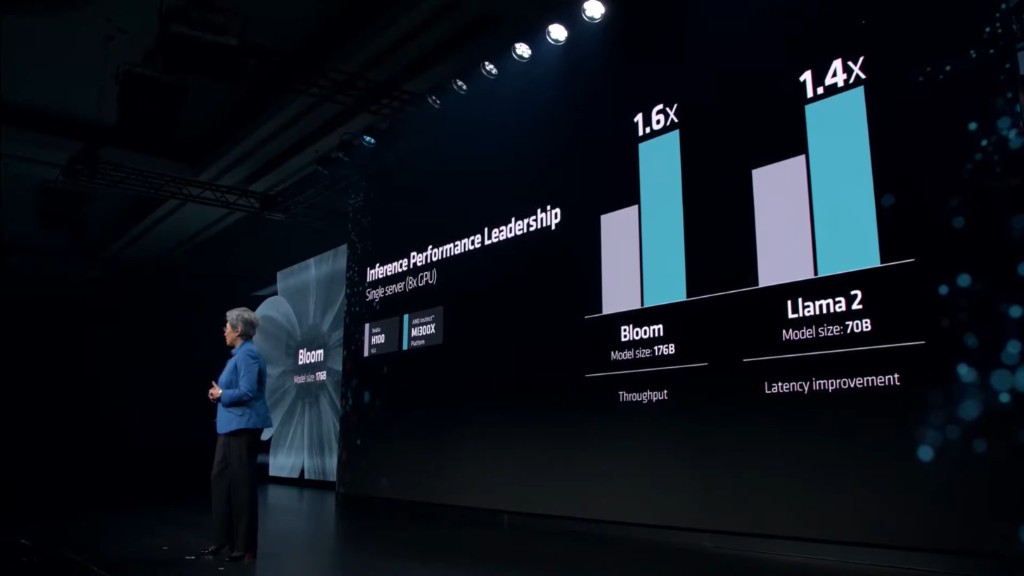

MI300X 新款芯片較英偉達的 H100 性能最多可以提升 60%。在與 H100(Llama 2 70B 版本)的一對一比較中,MI300X 性能提高了高達 20%。在與 H100(FlashAttention 2 版本)的一對一比較中,性能提高了高達 20%。在與 H100(Llama 2 70B 版本)的 8 對 8 服務器比較中,性能提高了高達 40%。在與 H100(Bloom 176B)的 8 對 8 服務器比較中,性能提高了高達 60%。

AMD 公司 CEO Lisa Su 表示,新款芯片在訓練人工智能軟件的能力方面與 H100 相當,在推理方面,也即軟件投入實際使用後運行該軟件的過程,要比競品好得多。

伴隨着人工智能的火爆,市場對高端芯片需求量極大。這令芯片製造商們瞄準這一利潤豐厚的市場,加快推出高品質的 AI 芯片。

雖然整個 AI 芯片市場競爭相當激烈,AMD 在週三對未來市場規模給出了大膽驚人的預測,認為 AI 芯片市場將迅猛擴張。具體來説,預計人工智能(AI)芯片市場的規模到 2027 年將達到超過 4000 億美元,這較其 8 月時預計的 1500 億美元上調將近兩倍,凸顯人們對人工智能硬件的期望正在快速變化。

AMD 越來越有信心其 MI300 系列能夠贏得一些科技巨頭的青睞,這可能會讓這些公司花費數十億美元的支出,投向 AMD 的產品。AMD 表示,微軟、甲骨文和 Meta 等都是其客户。

同日消息顯示,微軟將評估對 AMD 的 AI 加速器產品的需求,評估採用該新品的可行性。Meta 公司將在數據中心採用 AMD 新推的 MI300X 芯片產品。甲骨文表示,公司將在雲服務中採用 AMD 的新款芯片。

此前市場預計 AMD 的 MI300 系列在 2024 年的出貨約為 30~40 萬顆,最大客户為微軟、谷歌,若非受限台積電 CoWoS 產能短缺及英偉達早已預訂逾四成產能,AMD 出貨有望再上修。

AMD 推出 MI300X 加速器消息發佈後,英偉達股價下跌 1.5%。今年英偉達股價暴漲,使其市值超過 1 萬億美元,但最大的問題是,它還能獨享加速器市場多久。AMD 看到了屬於自己的機會:大型語言模型需要大量計算機內存,而這正是 AMD 認為自己的優勢所在。

為了鞏固市場主導地位,英偉達也正在開發自己的下一代芯片。H100 將於明年上半年被 H200 取代,後者於上個月推出,能夠提供新型高速內存,在 Llama 2 上的推理速度比 H100 快一倍。此外,英偉達將在今年晚些時候推出全新的處理器架構。