The frenzy of AI chip purchases shows no signs of stopping! Tesla laments: It costs billions of dollars every year.

Tesla CEO Elon Musk said that AI competition is like a high-stakes poker game, requiring billions of dollars in investment each year to stay competitive. Tesla will spend over $500 million on NVIDIA this year alone, but still needs billions of dollars in hardware investment to catch up with its competitors. Global tech giants are scrambling to acquire NVIDIA, as it is crucial for applications such as AI chatbots. The AI chip market has vast potential, and staying competitive in this field requires substantial financial investment.

Zhitong App has learned that Elon Musk, CEO of Tesla and founder of AI startup xAI, compares the AI arms race among tech companies to a high-stakes "poker game," where companies need to invest billions of dollars annually in AI hardware to stay competitive. The billionaire stated that by 2024, Tesla alone will spend over $500 million on NVIDIA's AI chips, but he warned that Tesla will still need "billions of dollars" worth of hardware to catch up with some of the largest competitors.

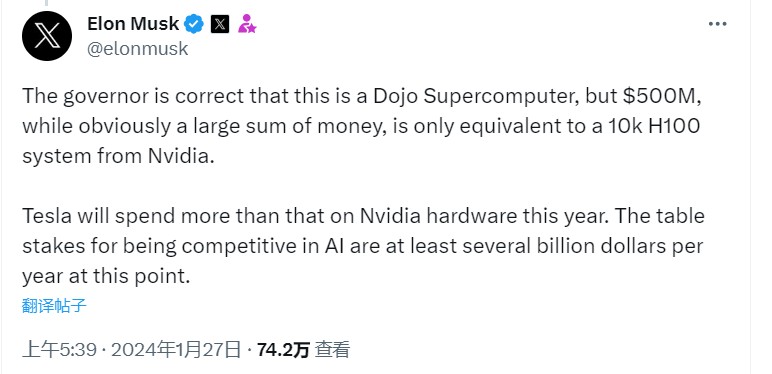

In a post on the social media platform X (formerly known as Twitter), Musk said, "Half a billion dollars, that's obviously a lot of money, but it's only equivalent to 10,000 NVIDIA-based AI systems."

"Tesla's spending on NVIDIA hardware this year will exceed that number. Currently, the minimum cost to stay competitive in the field of AI is billions of dollars annually," Musk added, stating that if an AI company wants to maintain competitiveness in the long term, it may need to invest "billions of dollars" in AI chips every year.

Tech giants are rushing to buy AI chips

Currently, the world's largest tech companies are scrambling to buy as many NVIDIA H100 AI chips as possible, even at a significant premium in the second-hand market. NVIDIA AI chips excel at handling the heavy workloads required for AI, making them crucial "underlying infrastructure" for building and training large language models (LLMs) that power popular AI chatbots like ChatGPT and Google Bard.

With the emergence of groundbreaking generative AI like ChatGPT, the world is gradually entering a new era of AI. This has led to a surge in demand for NVIDIA GPU chips, specifically the A100/H100 chips used for AI training and inference, not only in the tech industry but across various sectors worldwide. As a result, NVIDIA (NVDA.US) achieved astonishingly strong performance data in 2023.

According to statistics, the price range for each NVIDIA H100 AI chip is between $25,000 and $30,000, with prices on eBay reaching as high as $40,000. Earlier this month, Mark Zuckerberg, the CEO of Meta Platforms, the parent company of social media platforms Facebook and Instagram, revealed in a media interview that Meta is building a massive inventory of AI chips. The tech giant aims to have a total of over 600,000 chips by the end of this year, some of which may include NVIDIA H100 and the upcoming new AI chips from NVIDIA. If Meta pays at the lower end of the price range, the hardware expenditure could reach nearly $9 billion.

Global cloud service giants Oracle and Microsoft are also investing heavily in purchasing NVIDIA AI chips. Oracle's founder and chairman, Ellison, stated in June 2023 that they will spend "billions of dollars" to acquire NVIDIA chips to meet the cloud computing service demands of the new wave of AI tech companies. Microsoft has established a deep partnership with NVIDIA to jointly build a powerful AI supercomputer supported by Microsoft Azure's advanced supercomputing infrastructure, NVIDIA AI chips, network technology, and AI software stack.

Tesla and xAI will also be among the largest buyers of NVIDIA chips. Musk's goal is to establish a large chip reserve, and he stated in an article on X that Tesla will purchase a large number of AI chips from NVIDIA and its competitor AMD this year.

The billionaire previously stated on X that Tesla is a technology company based on AI and robotics, not just an electric vehicle manufacturer. Earlier this year, he announced that the electric vehicle giant plans to invest over $1 billion in building a giant "Dojo" supercomputer.

However, Musk has recently expressed doubts about Tesla's AI plans. In an article on X, he mentioned feeling very uneasy about establishing Tesla's AI system without having more actual control. Musk posted on X that he would rather build products outside of Tesla if he didn't have 25% voting rights.

Musk officially announced the establishment of a new AI company called xAI in the second half of last year. Its goal is to understand the depth and fundamental nature of the universe and collaborate with engineering teams from US tech companies to challenge industry leaders and build an alternative solution to ChatGPT. Although the company is an independent entity, it will have close collaborations with SpaceX, X company, Tesla (TSLA.US), and other companies, all led by Musk.

The core team members of xAI have impressive backgrounds, having previously worked at renowned institutions such as DeepMind, OpenAI, Google Research, Microsoft Research, and Tesla. They have made significant contributions to important projects such as DeepMind's AlphaCode and OpenAI's GPT-3.5 and GPT-4 chatbots. As a result, Musk seems to position xAI as a competitor to AI leaders such as OpenAI, Google, and Anthropic, which have developed globally popular AI chatbots like ChatGPT, Bard, and Claude.

In November last year, xAI released its own AI chatbot, Grok. According to media reports citing insiders, the company is raising funds with an estimated valuation of around $20 billion, although Musk has denied this claim.

The future of AI chips is incredibly promising! AMD significantly raises the market size of AI chips

Due to the increasing importance of generative artificial intelligence (generative AI) in various industries worldwide, and driven by the trend of global enterprises deploying AI technology, investors have high expectations for the technology industry, especially for chip companies. After all, all AI technologies rely on computing power, which is based on AI chips as the underlying hardware. It is precisely due to the booming demand for AI chips that TSMC achieved a historically high level of total revenue in the fourth quarter of 2023, which was basically on par with the same period last year and significantly exceeded market expectations.

According to the latest forecast from market research firm Gartner, the market size of AI chips is expected to grow by 25.6% from the previous year, reaching $67.1 billion by 2024. It is projected that by 2027, the market size of AI chips will be more than twice the size in 2023, reaching $119.4 billion.

However, AMD, the strongest competitor to NVIDIA, has even more optimistic expectations for the future AI chip market. At the "Advancing AI" conference, AMD, the strongest competitor to NVIDIA, suddenly raised its global AI chip market size expectation for the period ending in 2027 from the previous expectation of $150 billion to $400 billion, while the market size expectation for 2023 is only about $30 billion.

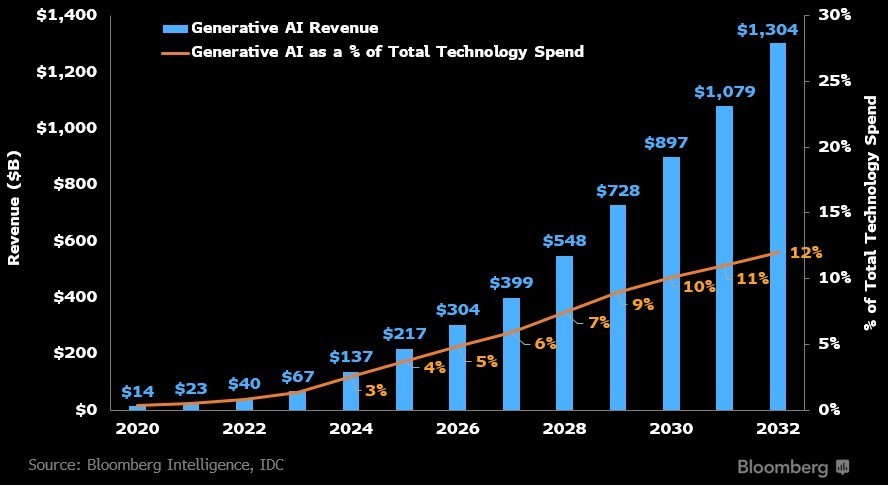

With the successive emergence of generative AI applications such as ChatGPT and Google's Bard, which are consumer-centric, and the increasing participation of technology companies in the AI technology trend, a decade-long era of AI prosperity may be driven. According to a recent report by Bloomberg industry research analyst Mandeep Singh, it is estimated that by 2032, the total revenue of the generative AI market, including AI chips required for training AI systems and AI software and hardware for application ends, will increase from $40 billion last year to $13 trillion. This market is expected to grow 32 times in the next 10 years. Growing at a rapid compound rate of up to 42%.