2024 may be the year of the "liquid cooling" outbreak? Under the leadership of NVIDIA, the demand for "liquid cooling" embarks on a rapid growth path

2024 年,英偉達領導下的液冷需求蓬勃發展,Vertiv 股價暴漲超 600%。華爾街分析師認為,在英偉達的推動下,液冷在高性能 AI 服務器領域將成為必選解決方案。Vertiv 業績強勁,Q1 總訂單同比增長 60%,預計 2024 年銷售額同比增長約 12%。液冷技術領域的英維克也取得了強勁的第一季度業績。

在全球企業自 2023 年以來佈局 AI 技術的狂熱浪潮刺激之下,英偉達 (NVDA.US) 最強性能 AI GPU 服務器——GB200 AI GPU 服務器的液冷技術解決方案供應商之一 Vertiv (VRT.US) 股價自 2023 年以來已暴漲超 600%,2024 年以來的漲幅已高達 103%。在華爾街分析師們看來,在 AI 芯片領域的絕對霸主英偉達大力推動之下,液冷在超高性能的 AI 服務器領域有望從 “可選” 邁入 “必選”,意味着 “液冷” 解決方案在未來的市場規模無比龐大,而在股價預期方面, Vertiv 等液冷領域領導者股價上行之路可能遠未結束。

而在最新的業績以及業績預期方面,Vertiv 也交出了一份令市場非常滿意的業績,暗示全球 AI 數據中心對於液冷技術的需求激增,同時也從側面顯示出全球企業對於英偉達 AI GPU 的需求仍然極度旺盛。前不久英偉達 GB200 液冷解決方案供應商 Vertiv 業績顯示,該公司第一季度總訂單同比增長 60%,期末積壓訂單金額高達 63 億美元,一舉創下歷史新高。Q1 淨銷售額 16.39 億美元,同比增長 8%,調整後營業利潤高達 2.49 億美元,同比增長 42%。

不僅第一季度訂單和銷售額強勁,Vertiv 還以超市場預期的步伐上調 2024 年全年業績預期,銷售額中值顯示有望在強勁的 2023 年銷售額基礎上同比增長約 12%,調整後營業利潤 13.25 億至 13.75 億美元,預期中值較強勁的 2023 年全年增長約 28%。

在中國 A 股,液冷技術領域領導者英維克 (002837.SZ) 也交出了一份無比強勁的第一季度業績報告。報告期內,英維克實現營業收入 7.46 億元,同比增長 41.36%。歸屬於上市公司股東的淨利潤 6197.52 萬元,同比增長 146.93%。歸屬於上市公司股東的扣除非經常性損益的淨利潤 5430.77 萬元,同比增長 169.65%。

展望液冷未來前景,從 2024 年起,液冷解決方案的滲透規模有望進入 “爆發式增長” 模式。據 Dell'Oro Group 2024 年 2 月的預期測算數據,該機構預計 2028 年數據中心熱管理市場規模 (風冷 + 液冷) 將達 120 億美元,預計屆時液冷規模將達 35 億美元,佔熱管理總計支出的近 1/3,對比目前佔比僅不到 1/10。

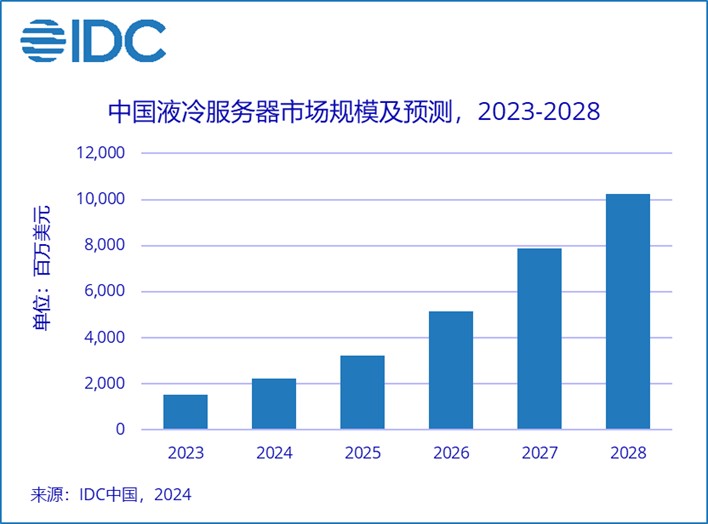

國際知名研究機構 IDC 近日發佈報告稱,中國的液冷服務器市場在 2023 年繼續保持快速增長。2023 全年中國液冷服務器市場規模達到 15.5 億美元,與 2022 年相比增長 52.6%,其中 95% 以上均採用冷板式液冷解決方案。IDC 預計,2023-2028 年,中國液冷服務器市場年複合增速將達到 45.8%, 2028 年市場規模有望達到 102 億美元。

液冷——逐漸從 AI 服務器散熱模塊的 “可選項” 踏入 “必選項”

目前,全球採用英偉達 H100 AI GPU 的 AI 服務器在散熱解決方案選擇上呈現多樣化,但風冷仍然是主流選擇。儘管液冷因其在高性能計算中的優勢 (如更有效的熱管理和能效) 正在逐漸普及,但液冷服務器的部署並未完全普及到所有使用英偉達 H100 GPU 的系統中。

在英偉達全新的 Blackwell 架構 GPU(即 B100\B200\GB200 AI GPU ) 時代,由於 AI GPU 性能激增,從理論技術層面的角度來看,風冷散熱規模幾乎達到風冷能力極限,液冷散熱時代拉開序幕。隨着在 AI 服務器領域液冷從 “可選” 到 “必選”,將大幅提升市場空間,成為 AI 算力領域的重要細分賽道之一。整體來看,液冷不僅保證 AI GPU 服務器在最佳性能下高效率 24 小時無間斷運行,還有助於延長硬件使用壽命。

英偉達 GB200 超算服務器性能則可謂 “全球獨一檔” 算力系統的存在。英偉達基於兩個 B200 AI GPU 以及自研 Grace CPU 所打造的 AI 超算系統 GB200,基於大語言模型 (LLM) 的推理工作負載性能則瞬間能夠提升 30 倍,同時與上一代 Hopper 架構相比,GB200 成本和能耗大幅度降低約 25 倍。在具有 1750 億參數級別的 GPT-3 LLM 基準上,GB200 的推理性能是 H100 系統的 7 倍,並且提供了 4 倍於 H100 系統的訓練速度。

如此強大的性能提升,意味着風冷散熱模塊已不足以支撐算力系統正常散熱運作,這也是英偉達選擇在 9 月份量產的 GB200 AI GPU 服務器大規模採用液冷解決方案的重要因素。

隨着 AI 和機器學習算法變得越來越複雜,相應的 AI 算力需求也在快速增長。特別是在訓練 AI 大模型或進行大規模 AI 推理進程時,AI 服務器需要高性能 GPU 來處理這些計算密集型任務。這些高性能 AI GPU(如英偉達的 GB200) 在運行時會產生大量熱量,需要有效的散熱解決方案以維持運行效率和硬件壽命。液冷系統可以更迅速、更有效地將熱量從 GPU 等熱源傳輸到散熱器,從而減少了熱積聚可能性,使得晶體管出現燒損的可能性大幅降低,保持 GPU 長期以高性能運作。

從技術路線而言,業內主流觀點認為,冷板式間接液冷有望先於直接液冷獲得全面滲透與推廣。液冷系統可以根據液體與硬件之間的接觸方式分為直接液冷和間接液冷,直接冷卻包括浸沒式和噴淋式,間接液冷主要是冷板式液冷解決方案。冷板式液冷技術工藝成熟,不需改變現有服務器的形態,加工難度低,成本較低,且冷卻功耗可以滿足 AI 服務器需求,有望率先獲得推廣。

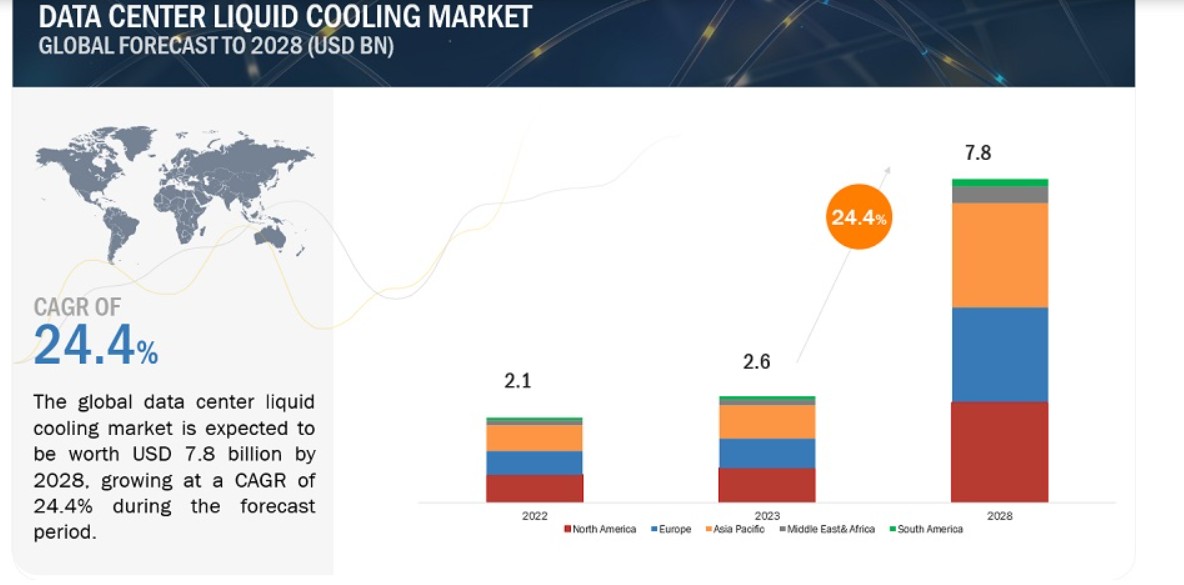

知名機構 Markets And Markets 研究報告顯示,預計全球數據中心液體冷卻市場將從 2023 年的 26 億美元增長到 2028 年的至少 78 億美元,在預測期內的複合年增長率為 24.4%。Markets And Markets 表示,由於人工智能服務器、邊緣計算和物聯網 (oT) 等設備的發展,需要緊湊而有效的冷卻解決方案,液冷優點則是能夠通過冷卻小型設備和小型服務器,有效地處理具有挑戰性的情況下的大批量數據。總體而言,現代數據中心處理天量級別數據的強勁需求之下,數據中心液冷市場主要受到提高冷卻效率、節能、可擴展性、可持續性和更高性能 GPU 等硬件要求的驅動。

華爾街分析師們普遍樂觀地認為,全球企業對人工智能技術的龐大投資規模將支持數據中心的容量規模不斷擴張。這對 Vertiv 來説可謂是一大利好,該公司的大部分營收規模來自數據中心電力管理和數據中心使用的 IT 液冷以及混合冷卻系統等產品的銷售額,該公司主營業務集中於為全球範圍的數據中心提供電源管理和各類冷卻技術。

Vertiv 當前則致力於開發 AI 數據中心先進液冷解決方案,有公開資料顯示,Vertiv 和 AI 芯片霸主英偉達 (NVDA.US) 合作開發的下一代 NVIDIA AI GPU 加速數據中心先進液冷解決方案有望適用於 GB200,Vertiv 的高能量密度電源和冷卻解決方案旨在支持英偉達下一代 GPU 以最佳性能和高可用性安全地運行計算最密集的 AI 工作負載。

機構彙編的數據顯示,華爾街分析師們對 Vertiv 給出了 8 個 “買入” 評級,1 個 “持有” 評級,沒有出現 “賣出” 評級,共識評級為 “強力買入”,最樂觀目標價高達 102 美元 (週四收於 97.940 美元這一歷史高位)。 來自 Oppenheimer & Co.的分析師諾亞•凱伊 (Noah Kaye) 強調 “人工智能大趨勢” 正在擴大 AI 數據中心容量的潛在市場,並且預計到 2026 年,僅僅 Vertiv 高密度計算市場就將達到 250 億美元。

這家來自中國的液冷技術領導者獲華爾街大行高盛青睞

華爾街大行高盛認為,人工智能這一全球股票市場的 “股票動力燃料” 遠未耗盡。該機構在近期發佈的最新預測報告中表示,全球股市目前僅僅處於人工智能引領的投資熱潮的第一階段,這股熱潮將繼續擴大至第二、第三以及第四階段,提振全球範圍內越來越多的行業。

“如果説英偉達代表了人工智能股票交易熱潮的第一階段——即最直接受益的 AI 芯片階段,那麼第二階段將是全球其他公司幫助建立與人工智能相關的基礎設施。” 該機構寫道。“預計第三階段是將人工智能納入其產品以增加營收規模的公司,而第四階段是與人工智能相關的生產效率全面提高,而這一預期能夠在全球許多企業中實現。”

在人工智能投資熱潮的第二階段,聚焦於除英偉達之外其他參與 AI 基礎設施建設的公司,包括阿斯麥、應用材料等半導體設備商、芯片製造商、雲服務提供商、數據中心 REITs、數據中心硬件和設備公司、軟件安全股以及公用事業公司。而在這一階段,高盛在研究報告中專門提到了一家中國上市公司,即專注於服務器、數據中心和能源存儲系統的精密液體冷卻技術的英維克 (Shenzhen Envicool)。

知名機構 IDC 預計,2023-2028 年,中國液冷服務器市場年複合增長率將達到 45.8%,2028 年市場規模將達到 102 億美元。IDC 數據顯示,基於行業需求和政策推動,2023 年中國液冷服務器市場規模進一步加大,並且參與液冷生態體系的合作伙伴也越來越豐富,表明市場對於數據中心液冷解決方案的態度是非常積極。隨着中國人工智能企業和組織對智算中心無論是建設要求還是算力供給需求越來越高,導致此類數據中心的 IT 設備能耗大幅上升,更加需要高效的液體冷卻系統來維持適宜的操作温度,否則將對大模型產品的週期管理和運維難度產生巨大挑戰。

液冷技術風靡全球,暗示英偉達 AI GPU 需求無比強勁

Vertiv 以及英維克等液冷領域領導者紛紛交出無比強勁的業績數據,以及分析師們對於 Vertiv 的看漲預期升温,暗示全球數據中心,尤其是 AI 數據中心對於液冷散熱解決技術的需求呈激增之勢,同時也從側面顯示出全球企業對於英偉達基於 Hopper 架構以及最新發布的 Blackwell 架構的 AI GPU 需求極度旺盛。

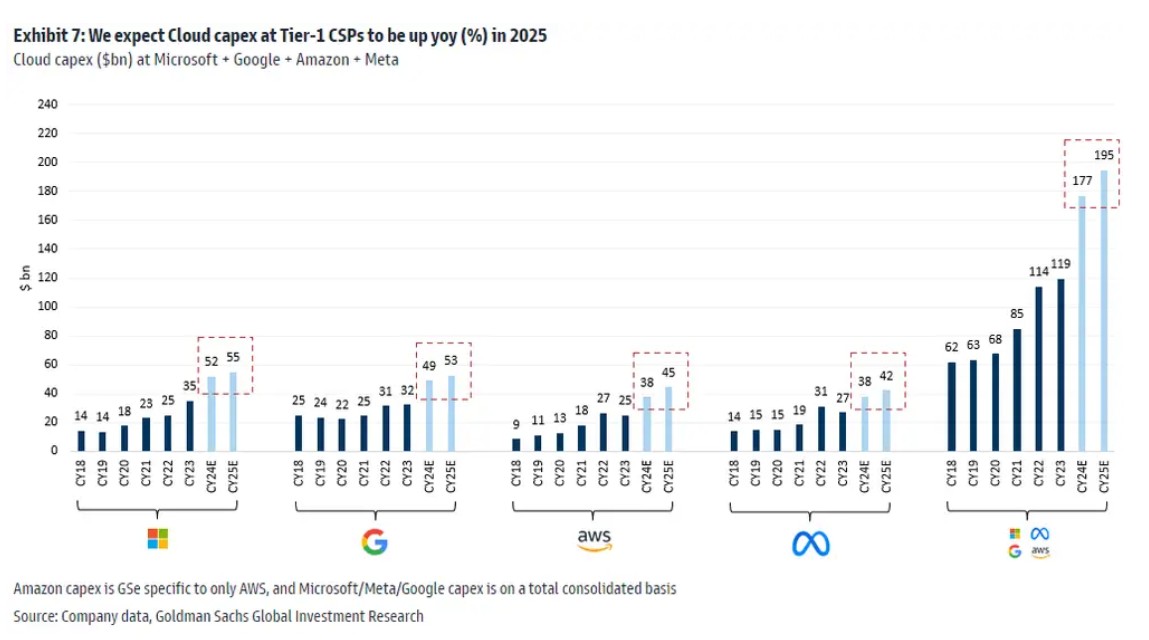

高盛預計,微軟、谷歌、亞馬遜旗下 AWS、Facebook 母公司 Meta 這四家大型科技公司今年在雲計算方面的資本投入高達 1770 億美元,遠遠高於去年的 1190 億美元,而 2025 年將繼續增至驚人的 1950 億美元。

據媒體報道,微軟與 OpenAI 正在就耗資高達 1000 億美元的超大型全球數據中心項目規劃進行細節層面的談判,該項目將包含一台暫時命名為 “星際之門”(Stargate) 的 AI 超級計算機,這將是兩家 AI 領域的領導者計劃在未來六年內建立的一系列 AI 超算基礎設施中最大規模的超算設施。

毋庸置疑的是,這個巨無霸級別的 AI 超算將配備 “數以百萬計算” 的核心硬件——英偉達不斷升級的 AI GPU,旨在為 OpenAI 未來更為強大的 GPT 大模型以及比 ChatGPT 和 Sora 文生視頻等更具顛覆性的 AI 應用提供強大算力。

雖然隨着供應瓶頸逐漸消除,AI GPU 這一核心硬件需求增量可能趨於穩定,但是底層硬件的市場仍將不斷擴張,英偉達旗下高性能 AI GPU 的供不應求之勢可能在未來幾年難以徹底緩解。這也是高盛等華爾街大行看好英偉達未來一年衝擊 1100 美元大關的重要邏輯 (週四英偉達收於 887.47 美元)。

尤其是 AI 大模型以及 AI 軟件不得不面臨的技術情景——即更新迭代趨勢的刺激之下軟件開發端勢必將不斷採購或升級 AI GPU 系統,因此未來幾年 AI 硬件市場規模仍然顯得無比龐大。根據市場研究機構 Gartner 最新預測,到 2024 年 AI 芯片市場規模將較上一年增長 25.6%,達到 671 億美元,預計到 2027 年,AI 芯片市場規模預計將是 2023 年規模的兩倍以上,達到 1194 億美元。