DeepSeek V3.1 Base launched with a surprise! Defeated Claude 4 with programming performance off the charts, the whole internet is waiting for R2 and V4

DeepSeek officially released the new V3.1 version, supporting a context length of 128k, with 685B parameters, and outstanding programming capabilities among open-source models, scoring 71.6% on the Aider programming benchmark, surpassing Claude Opus 4. It has added native search support, removed the "R1" label, and may adopt a hybrid architecture in the future. The cost for each programming task is only $1.01, and DeepSeek's fan base has exceeded 80,000, with users looking forward to the release of R2

Just last night, DeepSeek officially launched the new V3.1 version, expanding the context length to 128k.

The open-source V3.1 model has 685 billion parameters and supports various precision formats, from BF16 to FP8.

Based on public information and the practical tests by domestic expert karminski3, the highlights of the V3.1 update include:

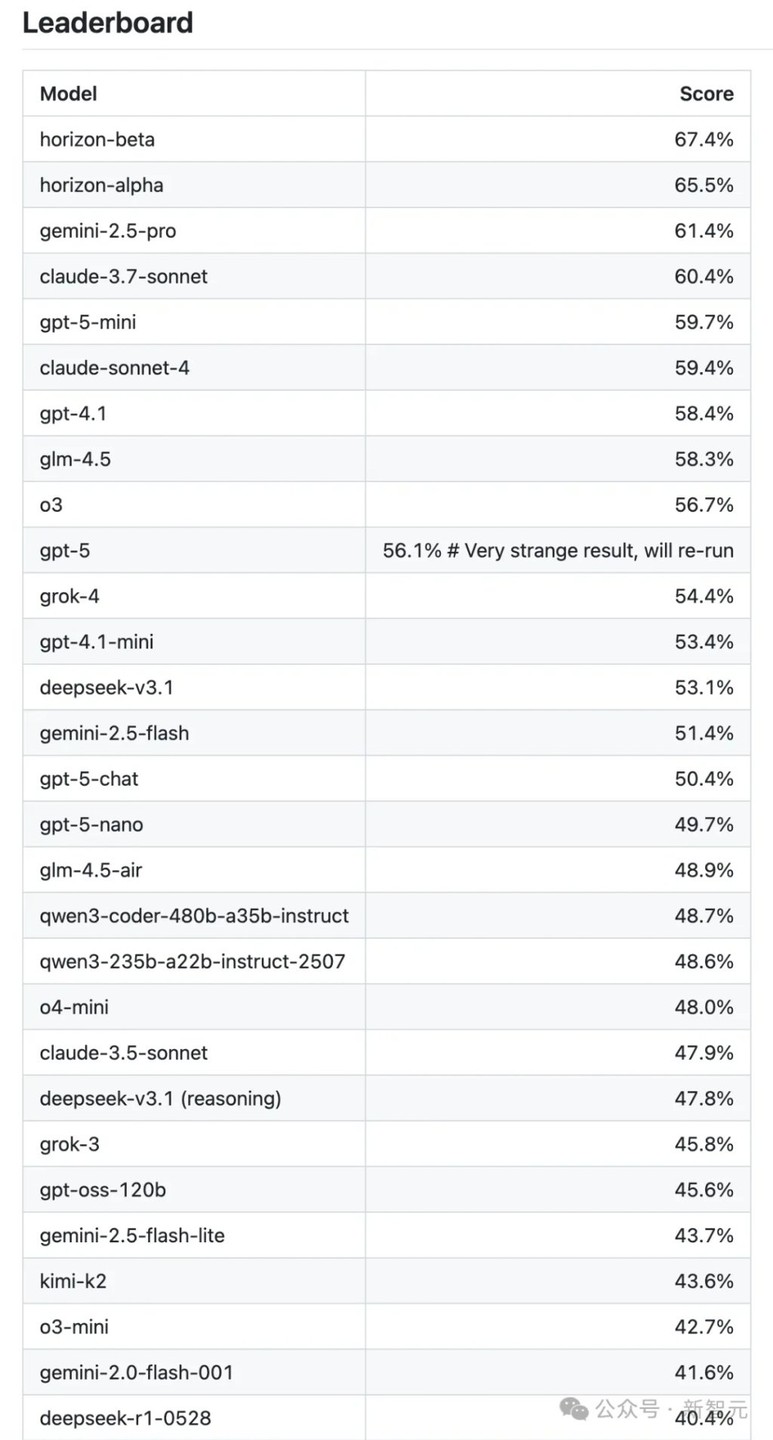

- Programming capability: Outstanding performance, ranking at the top among open-source models based on community usage Aider test data.

- Performance breakthrough: V3.1 achieved a high score of 71.6% in the Aider programming benchmark test, surpassing Claude Opus 4, while also being faster in inference and response speed.

- Native search: Added support for native "search token," which means better support for searches.

- Architectural innovation: The online model removed the "R1" designation, with analysis suggesting that DeepSeek is likely to adopt a "hybrid architecture" in the future.

- Cost advantage: Each complete programming task only costs $1.01, which is just one-sixtieth of proprietary systems.

It is worth mentioning that the official group emphasized the expansion to 128K context, which was already supported in the previous V3 version.

The enthusiasm for this update is quite high among everyone.

Even without the release of the model card, DeepSeek V3.1 has already ranked fourth on the trending list of Hugging Face.

DeepSeek's fan count has surpassed 80,000.

Seeing this, netizens are even more looking forward to the release of R2!

Hybrid reasoning, programming beats Claude 4

The most noticeable change this time is that DeepSeek has removed the "R1" from deep thinking (R1) in the official APP and web version

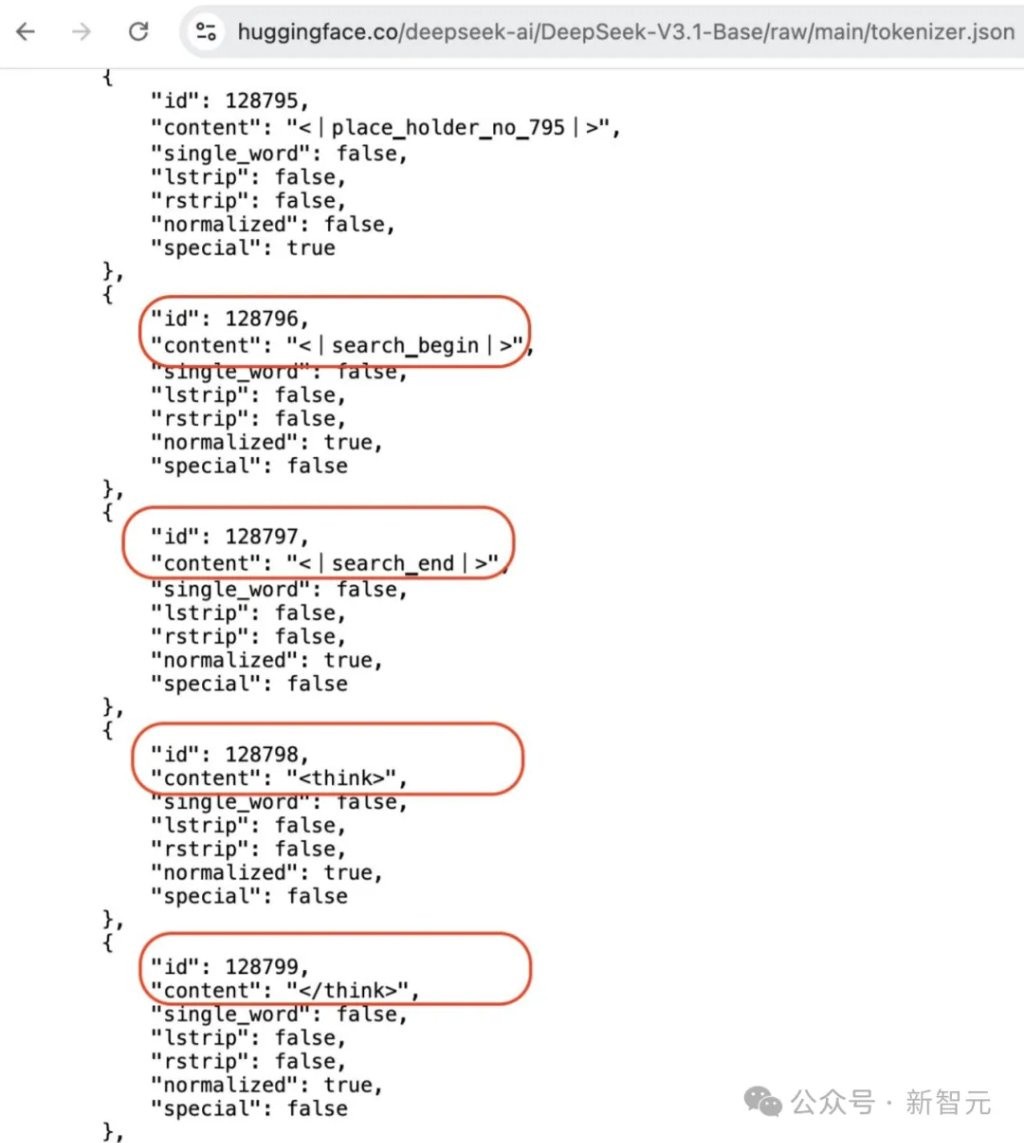

At the same time, compared to V3-base, DeepSeek V3.1 has added four special tokens:

- <|search▁begin|> (id: 128796)

- <|search▁end|> (id: 128797)

- (id: 128798)

- (id: 128799)

In this regard, there are speculations that this may suggest a fusion of reasoning models and non-reasoning models.

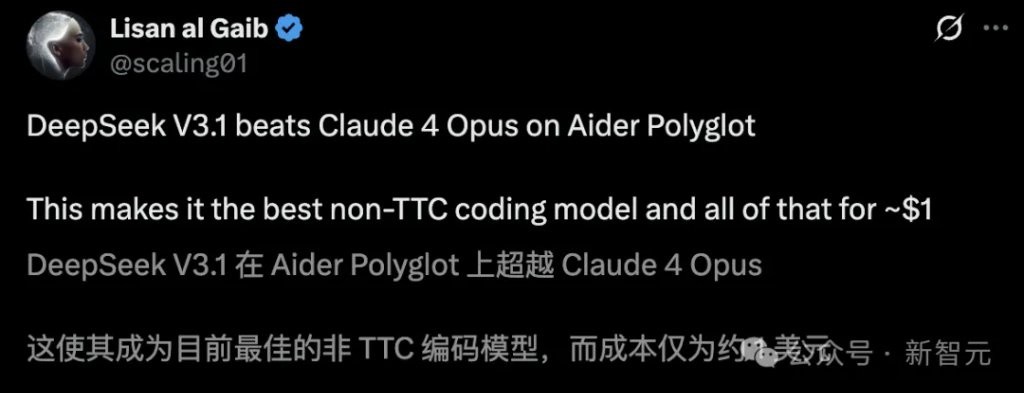

In terms of programming, according to results revealed by netizens, DeepSeek V3.1 scored 71.6% in the Aider Polyglot multilingual programming test, defeating Claude 4 Opus and DeepSeek R1.

Moreover, its cost is only 1 dollar, making it the SOTA among non-reasoning models.

The most striking comparison shows that V3.1's programming performance is 1% higher than Claude 4, while its cost is 68 times lower.

In the SVGBench benchmark, V3.1's strength is second only to GPT-4.1-mini, far surpassing the capabilities of DeepSeek R1.

In MMLU multi-task language understanding, DeepSeek V3.1 is on par with GPT-5. However, in programming, graduate-level benchmark Q&A, and software engineering, V3.1 has a certain gap compared to it

A netizen conducted a physical test simulating the free fall of small balls in a hexagon, and DeepSeek V3.1 showed a significant improvement in comprehension.

First-Hand Testing

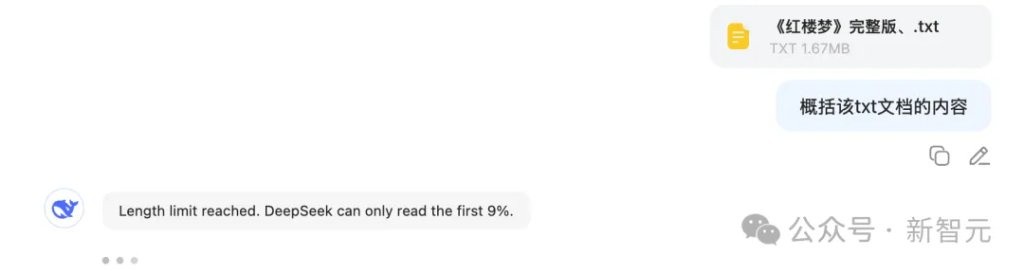

We conducted a real-time test on V3.1, focusing first on the key update of this model: context length.

Assuming that for Chinese, 1 token ≈ 1–1.3 Chinese characters, then this 128K tokens ≈ 100,000–160,000 Chinese characters.

This is equivalent to 1/6–1/8 of the entire text of "Dream of the Red Chamber" (about 800,000–1,000,000 characters), or one article.

The actual test was also quite accurate; DeepSeek told us it could only read about 9%, which is roughly one-tenth.

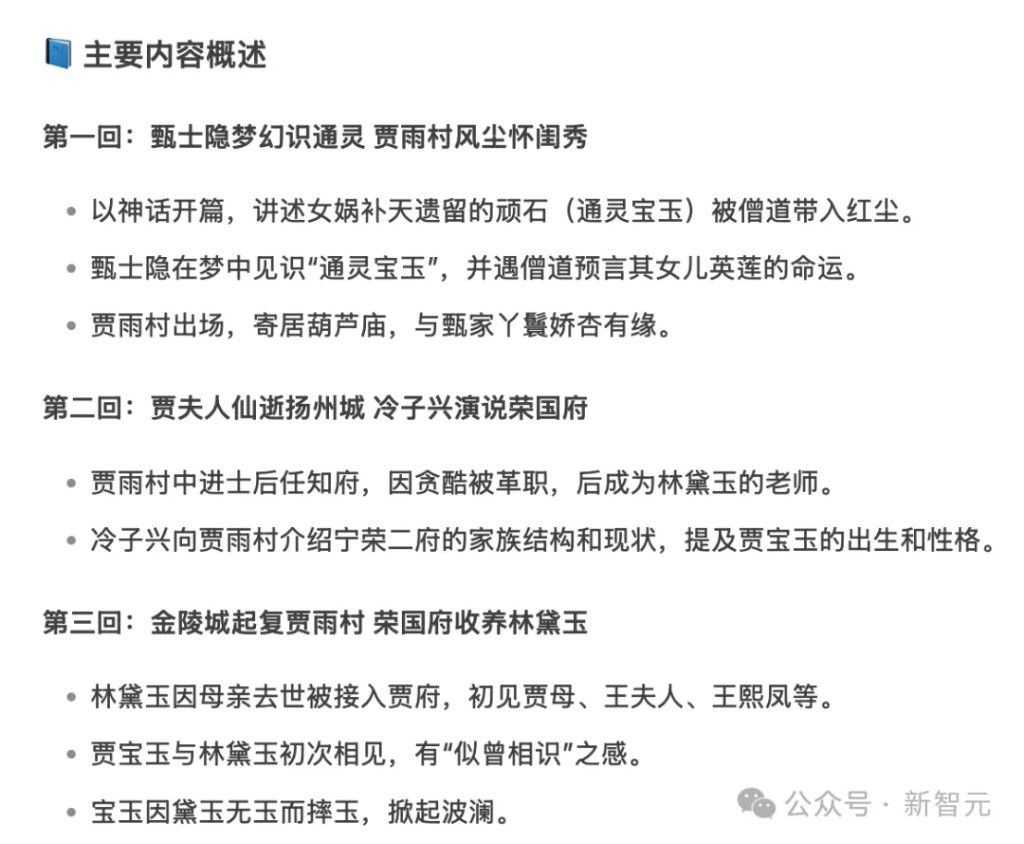

Due to the summary being too long, we extracted the first three chapters. What do you think of this summary?

In the 128K context test, DeepSeek-V3.1's output speed has significantly improved compared to before, and some optimizations have been made in engineering.

In this update, DeepSeek emphasized support for context.

The whole task put some pressure on DeepSeek-V3.1, asking it to output as much content as possible based on the character "dream," trying to reach the context limit.

However, in the end, it only outputted about 3,000 characters before the model stopped outputting.

Let's take a look at reasoning ability.

Let's take a look at reasoning ability.

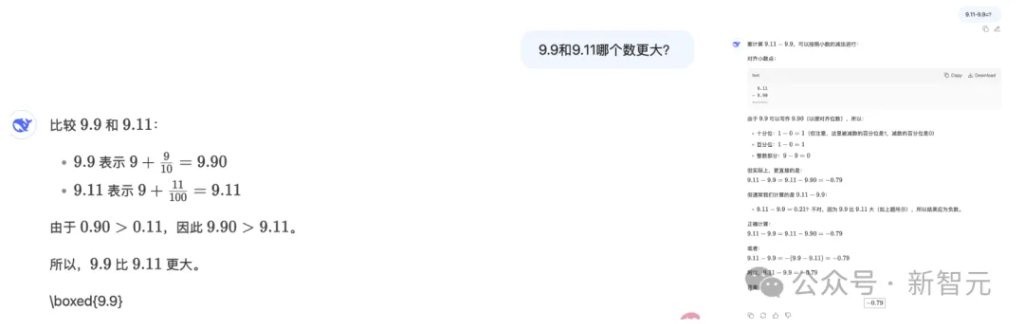

The classic comparison question of 9.11 and 9.9 can be answered correctly in both inquiry methods.

One major feeling from this update is that the speed has increased significantly.

Finally, let's examine programming ability.

DeepSeek's previous model was R1-0528, which focused on programming capabilities.

Let's see if this time V3.1 has a greater improvement.

The final result can only be rated at 80 points; it meets the basic requirements, but the visual style and color-changing functions have not been perfectly implemented.

Moreover, compared to the results of R1-0528, there is still some gap between the two, but which is better or worse depends on personal preference.

Here are the results after activating the thinking mode; which one do you think is better?

Next, let's see if DeepSeek V3.1 can replicate the French learning app from the GPT-5 launch event.

We will also let V3.1 create its own SVG self-portrait; the two effects are indeed somewhat abstract.

Risk Warning and Disclaimer

The market has risks, and investment requires caution. This article does not constitute personal investment advice and does not take into account the specific investment goals, financial situation, or needs of individual users. Users should consider whether any opinions, views, or conclusions in this article align with their specific circumstances. Investing based on this is at one's own risk