【In-depth Interview】Chen Tianqi: A Card Runs Large Models, iPhone Runs 70B, Overcoming NVIDIA's GPU Computing Power Dilemma

算力不是問題了?

最近,很多人都在为算力发愁。

Big tech 和初创公司们正在疯狂囤积英伟达 GPU,VC 和媒体们正如统计核库存般仔细盘点 GPU 的供需,互联网上分析 GPU 短缺的文章,亦如雨后春笋般涌现。

不过,如果我们可以用 A 卡代替 N 卡,甚至不需要 GPU 就可以训练大模型,一切又会发生怎样的变化呢?

说到这里,就不得不提到一位大神——TVM、MXNET、XGBoost 作者,卡内基·梅隆大学助理教授,OctoML CTO 陈天奇。

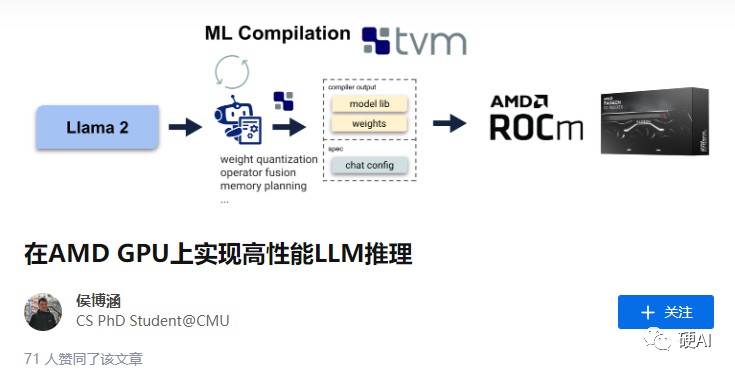

最近,由陈天奇带领的 CMU 机器学习编译小组(MLC)释出了使用 AMD 显卡进行大模型推理的新方案,立刻获得了机器学习社区的广泛关注。

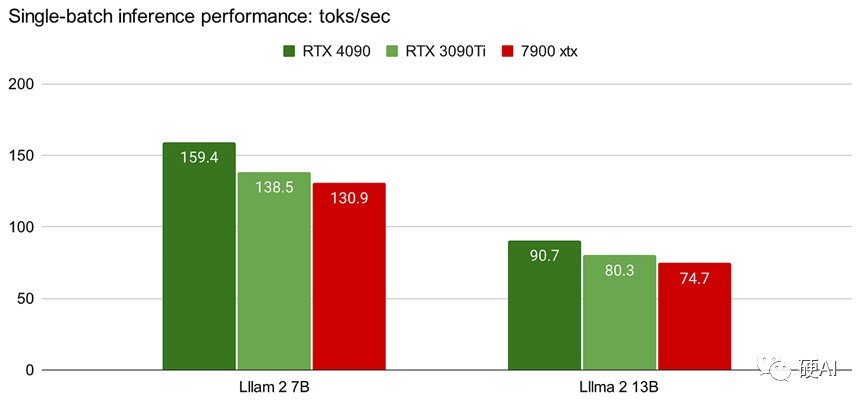

通过这种优化方法,在最新 Llama2 的 7B 和 13B 模型中,用一块 AMD Radeon RX 7900 XTX,速度已可达到英伟达 RTX 4090 的 80%,或是 3090Ti 的 94%。

8 月 11 日,陈天奇同风险投资公司 Decibel Partners 的合伙人兼首席技术官 Alessio、Latent Space 主理人 Swyx 展开了深度交流,

围绕 MLC、XGBoost、WebLLM、OctoAI、自优化计算等话题展开了深度讨论。

以下为全文内容,大家 enjoy~ ✌️

(由 ChatGPT 翻译,经略微修改)

目录:

● XGboost 的创建历程

● 对比 | 基于树的模型与深度学习模型

● TVM、ONNX 概述

● MLC 深度研究

● 使用 int4 quantization 进行模型推理

● 比较 | MLC 与其他模型优化项目

● 在线运行大语言模型

1、XGBoost 的创建历程

Alessio:

谈及 XGBoost,很多听众可能知道它是一个梯度提升库,而且可能是最受欢迎的一个。

它之所以如此流行,是因为很多人开始在机器学习竞赛中使用它。我猜,甚至可能有一个维基百科页面列出了所有使用 XGBoost 的最先进模型,上面的名单可能非常长。

在你构建 XGBoost 时,你是否能预测它会如此流行,或者在创造它时,你的预期是什么?

陈天奇:

实际上,我们构建这个库最初的原因是当时刚刚出现了深度学习,那是 AlexNet 刚刚问世的时候。

我和我的导师 Carlos Guestrin 的一个雄心勃勃的使命是思考我们是否能找到替代深度学习模型的方法?

那个时候,还有其他替代方案,比如支持向量机、线性模型,当然还有基于树的模型。

我们的问题是,如果我们构建这些模型,并且使用足够大的数据来训练它们,我们是否能够获得相同的性能?

当然,现在看来,那是一个错误的假设,但作为副产品,我们发现大多数梯度提升库并不足够高效,无法满足我们测试这一假设的需求。

恰好,过去我有很多构建梯度提升树及其变体的经验。所以 Effective Action Boost 实际上是这一假设测试的副产品。那个时候,我也在一些数据科学挑战中竞争,比如我参与了 KDD Cup,后来 Kaggle 变得更大了,所以我认为也许这对他人有用。

我的一个朋友说服我尝试为其做一个 Python 绑定,事实证明这是一个很好的决定,通过 Python 绑定,我们还有了 R 绑定。

随后,它开始变得有趣了,人们开始贡献不同的观点,如可视化等。所以我们开始更多地推动构建分布式支持,确保它在任何平台上都能正常工作等等。即使在那个时候,当我与导师 Carlos 交谈时,他说他从未预料到它会取得如此成功。

实际上,我之所以推动梯度提升树,有趣的是,当时他也持不同意见。他认为也许我们应该选择核函数机器。事实证明,我们在某种程度上都是错误的,深度神经网络才是最强的。但至少梯度提升树的方向带来了一些成果。

Alessio:

当涉及到这些改进时,我总是好奇设计过程是什么样的,以及多少是与你一起工作的其他人合作的结果,还是试图在学术领域中,通常是以论文为导向的研究驱动的结果。

陈天奇:

我会说,那时候的 Extra Boost 改进更多是我自己尝试去解决的问题。

在此之前,我曾经在矩阵分解方面的一些其他库上工作过,那是我第一次的开源经验。没有人知道它,因为如果你搜索 “SVD 特征包”,你可能会找到某个 SVN 仓库。

但实际上,它被用于一些推荐系统包。我试图将之前的一些经验应用在这里,并将它们结合起来。后来的项目,如 MXNet 和 TVM,在更大程度上是协作性的。

当然,Extra Boost 已经变得更大了。当我们启动该项目时,我和一些人一起工作,惊讶于看到有人加入。Michael 曾是一名律师,现在也在 AI 领域工作,他贡献了可视化方面的内容。现在我们社区的人们贡献了不同的东西。

即使是今天,Extra Boost 仍然是一个由共同维护者推动的社区项目。因此,这绝对是一项合作的工作,不断地提高一些内容,以便更好地为我们的社区服务。

2、「基于树的模型」VS「深度学习模型」

Swyx:

我想说,我希望能在某个时候讨论一下基于树的 AI 或机器学习与深度学习之间的比较,因为我认为有很多人对将这两个领域融合在一起非常感兴趣。

陈天奇:

实际上,我们测试的假设是部分错误的,因为我们想要测试的假设现在是,你能否在图像分类任务中运行基于树的模型,而在今天,深度学习对于这一领域显然是无与伦比的。

但如果你试图在表格数据上运行它,你仍然会发现大多数人选择基于树的模型。

这是有原因的,因为当你看基于树的模型时,决策边界自然是你在看的规则,它们还有很好的特性,比如对输入尺度的不可知性以及自动组合特征的能力。

我知道,有些人尝试构建适用于表格数据的神经网络模型,我有时也会关注它们。我觉得在建模领域保持一些多样性是好的。

实际上,在我们构建 TVM 时,我们为程序构建了成本模型,实际上我们也在其中使用了 XGBoost。我仍然认为基于树的模型仍然会相当相关,因为首先,很容易让它在开箱即用的情况下工作。此外,你将能够获得一些互操作性和单调性控制等。

有时候,我也会不断回来思考,我们是否可以在这些模型之上构建一些潜在的改进。毫无疑问,我觉得这是一个未来可能具有潜力的领域。

Swyx:

目前有哪些项目可以被称为有前途,比如试图融合这两个方向?

陈天奇:

我认为,有一些项目尝试将变换器类型的模型应用于表格数据。

我不记得具体是哪些项目,但即使在今天,如果你看看人们正在使用的东西,基于树的模型仍然是他们的工具之一。

所以我认为最终可能不是替代,而是一种可以调用的模型集合,完美。

3、「TVM、ONNX 概述」

Alessio:

接下来,在构建 XGBoost 大约三年之后,你构建了一个名为 TVM 的东西,它现在是一个非常流行的模型编译框架。

让我们谈谈,这差不多是在 ONNX 出现后的时间。也许你可以为我们提供一些关于这两者如何一起工作的概述。因为它有点像模型,然后转到 ONNX,然后再到 TVM。但我认为很多人不太理解其中的细微差别。你能给出一些背景故事吗?

陈天奇:

实际上,这是一个相当古老的历史。

在 XGBoost 之前,我在深度学习领域工作了两年或三年。在我的硕士学位期间,我的论文集中在将卷积限制玻尔兹曼机应用于 ImageNet 分类上,那是在 AlexNet 时代之前。

那会,我必须手工制作 NVIDIA CUDA 内核,我记得是在 GTX 2070 卡上。我有一块 2070 的卡。我花了大约六个月的时间才让一个模型工作。最终,那个模型效果不太好,我们应该选择一个更好的模型。那是一个非常古早时期的历史,真的让我进入了这个深度学习领域。

当然,最终我们发现它行不通。所以在我的硕士学位期间,我最终在推荐系统上工作,这使我发表了一篇论文,然后我申请并获得了博士学位。但我总是想回到深度学习领域工作。

因此,在 XGBoost 之后,我开始与一些人一起在这个名为 MXNet 的项目上工作。那时,框架如 Caffe、Caffe2 和 PyTorch 还没有出现。我们努力优化在 GPU 上的性能。在那时,我发现即使在 NVIDIA GPU 上也很困难,这花了我六个月的时间。

然后,看到在不同的硬件上优化代码是多么困难,这真是令人惊讶。因此,这让我思考,我们能否构建一些更通用和自动化的东西?这样我就不需要一个完整的团队来构建这些框架。

所以这是我开始在 TVM 上工作的动机。在支持我们感兴趣的平台上,实际上对于深度学习模型来说,需要非常少的机器学习工程方面的内容。我认为这更多是出于想要获得乐趣的态度。

我喜欢写代码,我非常喜欢编写 CUDA 代码。当然,能够生成 CUDA 代码很酷,对吧?但现在,在能够生成 CUDA 代码之后,好吧,顺便说一下,你也可以在其他平台上做到,这难道不是令人惊奇吗?所以这更多是那种态度让我开始的。

我还看到,现在我们看不同的研究人员,我自己更像是一个问题解决者类型。所以我喜欢看问题,然后说,好的,我们需要什么样的工具来解决这个问题?所以不管它们是否解决问题,肯定会去尝试。

我也看到,现在这是一个普遍的趋势。如果你想要解决机器学习问题,不再仅限于算法层面,你需要同时从数据和系统的角度来解决。这整个机器学习系统领域,我认为它正在兴起。现在已经有一个关于它的会议。看到越来越多的人开始研究这个领域,确保在这里继续创新。

4、「MLC 深度研究」

Alessio:

接下来,在构建 XGBoost 大约三年之后,你构建了一个名为 TVM 的东西,它现在是一个非常流行的模型编译框架。

让我们谈谈,这差不多是在 ONNX 出现后的时间。也许你可以为我们提供一些关于这两者如何一起工作的概述。因为它有点像模型,然后转到 ONNX,然后再到 TVM。但我认为很多人不太理解其中的细微差别。你能给出一些背景故事吗?

Swyx:

您在机器学习系统(MLSys)领域的最新创业项目,是今年四月将 MLC LLM 引入手机领域。我已经在我的手机上使用了它,效果很好。我正在运行 Lama 2,Vicuña 版本。

我不清楚你们提供了哪些其他模型,但也许你可以描述一下你们研究 MLC 的过程。我不知道这与你在卡内基梅隆大学的工作有何关联,这是工作的一种延伸吗?

陈天奇:

我认为,这更像是我们在机器学习编译领域想要的集中努力。

这和我们在 TVM 中建立的东西有关。我们建立 TVM 是在五年前,对吧?发生了很多事情。我们构建了端到端的机器学习编译器。

从中我们也吸取了很多教训。因此,我们正在构建名为 TVM Unity 的二代产品。它使我们能够让机器学习工程师能够快速应用新模型,并为它们构建优化。

而 MLCLLM 则类似于 MLC。它更像是一个垂直驱动的组织,我们会制作教程并构建项目,如 LLM 方案。你可以把机器学习编译技术应用起来,带来一些有趣的东西。

它可以在手机上运行,这真的很酷。但我们的目标不至于此,而是使其能够普遍部署。我们已经在 Apple M2 到 Mac 上跑了,而且运行的是 170 亿参数模型。

实际上,在单个批次推理中,最近在 CUDA 上,我认为在 4 位推理上已经获得了最佳性能。

在 AMD 卡上,我们刚刚取得了一项成果。在单个批次推理中,实际上我们可以在最新的 AMD GPU 上运行。这是一张消费级显卡。它可以达到大约 80% 的 4090——这是 NVIDIA 目前最好的消费级显卡。

虽然它还没有达到同等水平,但考虑到多样性以及你可以在该显卡上获得的先前性能,未来你可以用这种技术做的事情真的很令人惊讶。

Swyx:

有一件事让我有些困惑,大多数这些模型都是基于 PyTorch 的,但你们在 TVM 内部运行这些模型。我不知道是否需要进行任何根本性的改变,或者这基本上就是 TVM 的基本设计?

陈天奇:

事实上,TVM 具有称为 TVM 脚本的程序表示,其中包含了计算图和操作表示。

最初,我们确实需要花一些精力将这些模型引入 TVM 支持的程序表示中。通常有各种方式,具体取决于您查看的模型类型。

例如,对于视觉模型和稳定扩散模型,通常可以通过追踪将 PyTorch 模型引入 TVM。该部分仍在稳固中,以便我们能够引入更多模型。

在语言模型任务中,我们所做的是直接构建一些模型构造函数,并试图直接从 Hugging Face 模型进行映射。目标是,如果您有一个 Hugging Face 配置,我们将能够将其引入并对其进行优化。

因此,有关模型编译的有趣之处在于,您的优化不仅仅发生在软件语言层面,对吧?例如,如果您正在编写 PyTorch 代码,您只需尝试在源代码级别使用更好的融合运算符。Torch 编译可能会在其中帮助您做一些事情。

在大多数模型编译中,优化不仅仅发生在初始阶段,还会在中间应用通用转换,同样通过 Python API。因此,您可以对其中一些进行微调。这部分的优化对于提高性能和环境可移植性非常有帮助。

另外,我们还具有通用部署功能。因此,如果将 ML 程序转换为 TVM 脚本格式,其中包含接受张量和输出张量的函数,我们将能够编译它。因此,他们将能够在 TVM 支持的任何语言运行时中加载函数。您可以在 JavaScript 中加载它,那将是一个可以接受张量并输出张量的 JavaScript 函数。如果您加载 Python,当然还有 C++ 和 Java。

总的来说,我们的目标是将 ML 模型带到人们关心的语言,并在他们喜欢的平台上运行。

Swyx:

令人印象深刻的是,我之前曾与许多编译器领域的人交谈过,但您并没有传统的编译器背景。您正在创造一门称作机器学习编译(MLC)的全新学科。

您认为,未来这将成为一个更大的领域吗?

陈天奇:

首先,我确实也与从事编译工作的人合作。所以我们还从该领域的许多早期创新中汲取了灵感。

例如,TVM 最初从 Halide 中汲取了很多灵感,那是一个图像处理编译器。当然,从那时起,我们已经取得了很多进展,现在专注于与机器学习相关的编译。

如果您查看一些我们的会议出版物,您会发现,机器学习编译已经是一个子领域。如果您查看机器学习会议、MLC 会议以及系统会议的论文,每年都会有围绕机器学习编译的论文。在编译器会议 CGO 中,还有一个称为 C4ML 的研讨会,也在努力关注这个领域。

所以它肯定已经引起关注,正在成为一个领域。我不会声称我发明了这个领域,但我肯定在这个领域与许多人合作。当然,我尝试提出一个观点,尝试从编译器优化中学习,同时将机器学习和系统的知识结合进来。

Alessio:

在前几集播客中,我们请到了 George Hotz,他对 AMD 和他们的软件有很多看法。当您考虑 TVM 时,您是否仍然在某种程度上受到底层内核性能的限制?

如果你的目标是 CUDA 运行时,你仍然可以获得更好的性能,不管 TVM 是否能帮助你达到目标,但你不会在意那个级别,对吧?

陈天奇:

首先,有底层运行时,如 CUDA 运行时,对于 NVIDIA 来说,很多情况下是来自于它们的库,如 Cutlass、CUDN 等等。而且对于专门的工作负载,实际上您可以对其进行优化。因为很多情况下您会发现,如果您进行基准测试,这非常有趣。例如,两年前,如果您尝试基准测试 ResNet,通常情况下,NVIDIA 库给出了最佳性能,很难超越它们。

但是一旦您开始将模型更改为某种变体,不仅限于传统的 ImageNet 检测,还可以用于潜在检测等等,就会有一些优化的空间,因为有时人们会在基准测试上过度拟合。这些人会去优化东西,对吧?人们在基准测试上进行了过多的优化。所以这是最大的障碍,获得低级别内核库是能够做的最好的事情。从这个角度来看,TVM 的目标实际上是我们试图在通用层面上同时利用库。在这方面,我们不受他们提供的库的限制。

这就是为什么我们将能够在 Apple M2 或 WebGPU 上运行,因为我们在某种程度上是自动生成库。这使得支持支持不太好的硬件更加容易,例如,WebGPU 就是一个例子。从运行时角度来看,AMD 在其 Vulkan 驱动程序之前并不受支持。

最近,它们的情况变得很好。但即使在那之前,通过称为 Vulkan 的 GPU 图形后端,我们也可以支持 AMD。虽然性能不如 CUDA,但它可以为你提供良好的可移植性。

5、使用 int4 quantization 进行语言模型推理

Alessio:

我知道,我们还要谈论其他关于 MLC 的内容,比如 WebLLM,但我想先谈谈您正在进行的优化。有四个核心要点,对吧?您能否简单解释一下。

陈天奇:

内核融合,意味着,您知道,如果您有一个运算符,如卷积,或者在变换器的情况下是 MOP,后面跟着其他运算符,对吧?您不想要启动两个 GPU 内核。您希望能够以智能的方式将它们放在一起。

而内存规划更多地涉及到,您知道,嘿,如果您运行 Python 代码,每次生成新数组时,您实际上都在分配新的内存片段,对吧?当然,PyTorch 和其他框架会为您优化。因此,在幕后有一个智能内存分配器。但实际上,在许多情况下,事先静态分配和规划所有内容会更好。

这就是编译器可以介入的地方。首先,实际上对于语言模型来说更加困难,因为形状是动态的。因此,您需要能够做我们称之为符号形状跟踪。我们有一个符号变量,告诉您第一个张量的形状是 n 乘以 12。第三个张量的形状也是 n 乘以 2 乘以 12。虽然您不知道 n 是多少,但您将能够知道这种关系,并能够用它来推理融合和其他决策。

除此之外,我认为循环变换非常重要。实际上,这是非传统的。最初,如果您简单地编写代码并希望获得性能,是非常困难的。例如,如果您编写一个矩阵乘法器,您可以做的最简单的事情是使用 for i、j、k,c[i][j] += a[i][k] * b[k][j]。但是这段代码比您可以获得的最佳代码慢 100 倍。

因此,我们进行了许多转换,例如,能够采用原始代码,将事物放入共享内存中,并利用张量调用、内存复制等等。实际上,所有这些事情,我们也意识到,不能都做到。因此,我们还将 ML 编译框架作为 Python 软件包,以便人们可以以更透明的方式不断改进工程的这一部分。

我们发现,这对于我们能够在一些新模型上迅速获得良好性能非常有用。例如,当 Lamato 发布时,我们能够查看整体,发现瓶颈,并对其进行优化。

Alessio:

那么,第四个就是权重量化,每个人都想知道这方面的情况。只是为了让人们了解内存节省的情况,如果使用 FB32,每个参数需要四个字节。Int8 需要一个字节。所以您可以真正减小内存占用。

在这方面,有哪些权衡?您如何确定正确的目标?还有精度方面的权衡?

陈天奇:

目前,大多数人在语言模型上实际上大多使用 int4,这实际上大大减小了内存占用。

实际上,最近我们开始考虑,至少在 MOC 中,我们不希望对我们想要引入的量化类型持有强烈观点,因为该领域有如此多的研究人员。因此,我们可以允许开发人员自定义他们想要的量化,但我们仍然为他们带来了最佳代码。因此,我们正在进行一项名为 “自带量化” 的工作。实际上,希望 MOC 将能够支持更多的量化格式。而且肯定有一个开放的领域正在被探索。您能否带来更多的稀疏性?能否尽量量化激活等等?这将是一个在相当长一段时间内都会相关的领域。

Swyx:

您提到了一些让我想再确认一下的事情,即大多数人在语言模型中实际上大多使用 int4。对我来说,这实际上并不明显。您是在谈论 GGML 类型的人,还是研究模型的研究人员也在使用 int4?

陈天奇:

抱歉,我主要在谈论的是推理,不是训练,对吧?因此,在进行训练时,当然,int4 是更难的,对吧?

也许,在某种程度上可以在推理中采用一些混合精度的方法,我认为,在很多情况下,int4 是非常适用的。实际上,这确实在很大程度上降低了内存开销,等等。

6、比较:MLC 与其他模型优化项目

Alessio:

好的,很棒。让我们稍微谈一下可能是 GGML,然后是 Mojo。人们应该如何考虑 MLC?所有这些因素是如何相互作用的?

我认为,GGML 专注于模型级别的重新实现和改进。Mojo 是一种语言,非常强大。您更多地处于编译器层面。您们一起工作吗?人们是否在它们之间进行选择?

陈天奇:

我认为,在这种情况下,我认为可以说生态系统变得非常丰富,有很多不同的方式。在我们的情况下,GGML 更像是你正在用 C 从头开始实现某些东西,对吧?

这使您能够自定义每个特定硬件后端。但是然后您将需要编写 CUDA 内核,并从 AMD 等优化。从这个意义上讲,工程化的努力会更加广泛。

至于 Mojo,我还没有详细查看过,但我相信,其背后也会有机器学习编译技术。所以,可以说,它在其中有一个有趣的位置。

至于 MLC,我们的情况是,我们不想对人们想要开发、部署等的方式、位置、语言等发表意见。我们还意识到实际上有两个阶段。我们希望能够开发和优化您的模型。

通过优化,我的意思是,真正引入最佳的 CUDA 内核,并在其中进行一些机器学习工程。然后还有一个阶段,您想将其作为应用程序的一部分进行部署。所以,如果您看看这个领域,您会发现 GGML 更像是,我要在 C 语言中开发和优化。而 Mojo 则是您要在 Mojo 中开发和优化,对吧?然后在 Mojo 中进行部署。

事实上,这是他们想要推动的哲学。在机器学习方面,我们发现,实际上,如果您想要开发模型,机器学习社区喜欢 Python。

Python 是您应该专注的语言。因此,在 MLC 的情况下,我们真的希望能够实现不仅仅可以在 Python 中定义模型,这是非常常见的,还可以在 Python 中进行 ML 优化,例如:工程优化,CUDA 内核优化,内存规划等等。

但是当您部署时,我们意识到人们想要一些通用的风格。如果您是 Web 开发人员,您可能需要 JavaScript,对吧?如果您可能是嵌入式系统人员,您可能更喜欢 C++、C 或 Rust。人们有时在许多情况下确实喜欢 Python。因此,在 MLC 的情况下,我们真的希望有这样的愿景,即在 Python 中进行优化、构建通用的优化,然后在人们喜欢的环境中普遍部署它。

Swyx:

这是一个很好的观点和比较。

我想确保我们涵盖的另一件事是,我认为您是这一新兴学术领域的代表之一,也非常注重您的交付成果。

显然,您把 XGBoost 当作一个产品,对吧?然后现在您发布了一个 iPhone 应用程序,您对此有什么想法?

陈天奇:

我认为有不同的方式可以产生影响,对吧?

肯定有学者在撰写论文,为人们建立见解,以便人们可以在其之上构建产品。在我的情况下,我认为我正在从事的特定领域,机器学习系统,我觉得我们需要让人们能够拿到手,以便我们真正看到问题,对吧?并展示我们可以解决问题。这是一种产生影响的不同方式。还有一些学者正在做类似的事情。

例如,如果您看看柏克莱的一些人,对吧?几年来,他们会推出一些重大的开源项目。当然,我认为有不同的方式来产生影响是一个健康的生态系统,我觉得能够进行不同方式的影响是非常有趣的,而且我觉得能够进行开源并与开源社区合作是非常有意义的,因为在构建我们的研究时,我们有一个真正的问题要解决。

实际上,这些研究成果会汇聚在一起,人们将能够利用它们。我们还开始看到一些有趣的研究挑战,否则我们可能不会看到,对吧?如果您只是试图做一个原型等。所以我觉得这是一个有趣的产生影响、做出贡献的方式。

7、在线运行大语言模型

Swyx:

是的,您肯定在这方面产生了很大的影响。并且拥有以前发布 Mac 应用程序的经验,苹果应用商店是个大挑战。因此,我们绝对想要涵盖的一件事是在浏览器中运行。

您在浏览器中运行了一个拥有 700 亿参数的模型。没错。您可以谈谈这是如何实现的吗?

陈天奇:

首先,您需要一台 MacBook,最新的那款,比如 M2 Max,因为您需要足够大的内存来覆盖它。

对于一个 700 亿的模型,需要大约 50g 的 RAM。所以 M2 Max,上一版,就可以运行它,对吧?它还利用了机器学习编译。

实际上,我们所做的与其在 iPhone 上运行,还是在服务器云 GPU 上运行,还是在 AMD 上运行,还是在 MacBook 上运行,我们都会经历相同的 MOC 管道。当然,在某些情况下,我们可能会为每一种进行一些自定义迭代。然后它在浏览器运行时,通过 WebLM 这个软件包。

我们所做的,实际上就是将原始模型编译为我们称之为 WebGPU。然后 WebLM 将能够选择它。而 WebGPU 是主要浏览器正在发布的最新 GPU 技术。因此,您已经可以在 Chrome 中找到它了。它允许您从浏览器中访问本机 GPU。然后实际上,该语言模型只是通过那里调用 WebGPU 内核。

当 LATMAR2 问世时,最初,我们提出了关于在 MacBook 上是否可以运行 700 亿的问题。首先,我们实际上...Jin Lu 是推动这一切的工程师,他在 MacBook 上运行了 700 亿版本。

在 MLC 中,您将能够...它通过 metal accelerator 运行。因此实际上,您使用金属编程 metal accelerator 语言来实现 GPU 加速。所以我们发现,好的,它在 MacBook 上可以运行。

然后,我们扪心自问,我们有一个 WebGPU 后端。为什么不试一下呢?所以我们就试了一下。真的很神奇,看到一切都能正常运行。实际上,在这种情况下,它运行得非常顺利。因此,我认为在这种情况下,已经有一些有趣的用例,因为每个人都有一个浏览器。

您不需要安装任何东西。我认为在浏览器上运行 700 亿的模型还不太合理,因为您需要能够下载权重等等。但我认为我们正在接近这一点。

实际上,最强大的模型将能够在消费者设备上运行。这真的很惊人。并且在很多情况下,可能会有一些用例。例如,如果我要构建一个聊天机器人,我与它交谈并回答问题,也许某些组件,比如语音到文本,可以在客户端上运行。因此,在客户端和服务器上运行的混合模式中可能有很多可能性。

Alessio:

这些浏览器模型是否具有应用程序连接的方法?所以,如果我使用,比如,您可以使用 OpenAI,也可以使用本地模型。

陈天奇:

当然,现在我们正在构建一个名为 WebILM 的 NPM 软件包。

如果要将其嵌入到您的 Web 应用程序中,您将能够直接依赖于 WebILM,然后您将能够使用它。

我们还有一个与 OpenAI 兼容的 REST API。所以 REST API,我认为现在实际上是在本机后端上运行的。如果 CUDA 服务器在本机后端上,运行更快。但我们还有一个 WebGPU 版本,您可以去运行。

所以是的,我们确实希望能够更轻松地与现有应用程序集成。当然,OpenAI API 肯定是一种方法,这很棒。

Swyx:

实际上,我不知道有一个 NPM 软件包,这使得尝试和使用变得非常容易。我实际上想...我不确定我们会深入研究多少,但是从用户获取模型运行时优化的难度来看,我认为在 API 的概念方面,已经很多了。

Alessio:

我想,一个可能的疑问是关于时间顺序。因为据我所知,Chrome 在您发布 WebILM 的同时发布了 WebGPU。好的,是的。所以您与 Chrome 有秘密聊天吗?

陈天奇:

好消息是,Chrome 在努力进行早期发布。因此,尽管官方的 Chrome WebGPU 发布与 WebILM 同时进行,实际上,您已经可以在 Chrome 中尝试 WebGPU 技术。有一个不稳定的版本叫做 Canary。

我认为,早在两年前,就有了 WebGPU 版本。当然,它变得越来越好。所以两年前,我们确实开始看到它变得成熟,并且性能也在不断提升。因此,我们在两年前就有一个基于 TVM 的 WebGPU 后端。当然,在那个时候,还没有语言模型。它在较不那么有趣但仍然很有趣的模型上运行。

今年,我们真的开始看到它越来越成熟,性能也在不断提升。因此,我们在更大的规模上带入了与语言模型兼容的运行时。

Swyx:

我认为,您同意最难的部分是模型下载。关于一次性模型下载和所有可能使用此 API 的应用程序之间的共享,是否有相关的讨论?这是个很好的问题。

陈天奇:

这是个很好的问题,我认为它已经在某种程度上得到支持。当我们下载模型时,WebILM 会将其缓存在特殊的 Chrome 缓存中。

因此,如果不同的 Web 应用程序使用相同的 WebILM JavaScript 软件包,您就不需要重新下载模型。所以已经有一些内容了。但是当然,您至少需要下载模型一次才能使用它。

Swyx:

好。还有一个问题是,通常来说,以及可以在浏览器中运行的性能来说,从您对模型的优化中获得什么?

陈天奇:

这取决于您对 “运行” 如何定义,对吧?从一方面来说,下载 MOC,就像您下载它一样,您可以在笔记本电脑上运行它,但是还有不同的决策,对吧?

如果您试图提供更大的用户请求,如果请求发生变化,如果硬件的可用性发生变化,现在由于每个人都试图使用现有的硬件,因此很难获得最新的硬件,特别遗憾。

所以我认为,当运行的定义发生变化时,会有更多问题。而且在很多情况下,不仅仅是关于运行模型,还涉及到围绕模型的问题的解决。例如,如何管理您的模型位置,如何确保将模型更有效地靠近执行环境,等等。

因此,在这方面确实有许多工程挑战,我们希望能够解决,是的。而且,如果您考虑未来,我肯定觉得现在的技术,鉴于我们现在拥有的技术和硬件可用性,我们需要利用所有可能的硬件。这将包括降低成本的机制,将某些东西带到边缘和云中,以更自然的方式。因此,我认为我们仍然处于一个非常早期的阶段,但已经可以看到许多有趣的进展。

Alessio:

是的,这很棒。我喜欢,我不知道我们会深入探讨多少,但是您如何从最终用户那里抽象出所有这些内容?你知道他们不需要知道您运行了哪些 GPU,您在云上运行了哪些云。您将所有这些都摆在一边。作为工程挑战,这是怎么样的?

陈天奇:

我认为,在某种程度上,您将需要支持所有可能的硬件后端。

一方面,如果您查看媒体库,您会发现非常令人惊讶,但也不太令人惊讶,大多数最新的库在最新的 GPU 上运行得很好。但是云中还有其他 GPU。所以当然,能够具有专业知识并能够进行模型优化是一回事。

在支持多种硬件的基础设施上,还有一些方面,比如规模化的东西。如何确保用户能够找到他们最想要的东西。如果您现在看看像 NVIDIA,甚至 Google 的一些云 GPU 实例,很可能是大家在用的东西。所以在很大程度上,我认为我们还处于一个时间早期的阶段,但确实有一些有趣的进展。并且随着时间的推移,我认为会有更多的工具出现,使其更加容易,所以我认为我们处于一个变革的时期。

Swyx:

好的。我认为我们已经讨论了这么多内容,但是是否还有什么是您认为非常重要,但是我们尚未涵盖的?

陈天奇:

我认为我们已经涵盖了很多内容,对吧?

我想说的是,事实上,这确实是一个非常令人兴奋的领域。我认为我个人是一个很有幸的人,能够参与这样的事情。而且我认为这是一个非常开放的领域,我认为这是非常重要的。很多东西都被开源,所有东西都在公开发布。

并且,如果您想获得更多信息,您可以查看 GitHub,查看 YouTube 上的一些教程,对吧?或者查看 Twitter 上的一些消息,因此我认为这是一个非常开放的领域,有很多机会让每个人参与进来。

只需尝试一下,看看是否有一些想法,是否有一些东西,您会发现您会有很多惊喜。所以我认为这是一个非常美好的时代。

本文来源:硬 AI

附:

【MLC 项目页面】https://mlc.ai/mlc-llm/docs/