AI 2.0-Networking Era Begins? "Super Ethernet Representative" Arista's Financial Report Exceeds Expectations, Surges 6% Overnight

以太網正在成為 AI 數據中心最關鍵的基礎設施組件之一,Arista 此次上調業績指引,被認為是對未來信心更足的表現。

本文作者:李笑寅

來源:硬 AI

在 AI 訓練需求的提振下,計算機網絡公司 Arista Q1 財報和業績指引均超預期。

財報顯示,Arista 一季度營收同比增長 16.3%,達到 15.71 億美元,超出預期;公司一季度淨利潤同比大增 46%,達到 6.377 億美元。

營收指引方面,該公司對 2024 年收入預期的增幅從 10%-12% 上調至 12%-14%,預計全年總營收在 16.2 億-16.5 億美元之間,超出華爾街普遍預測的 16.2 億美元。

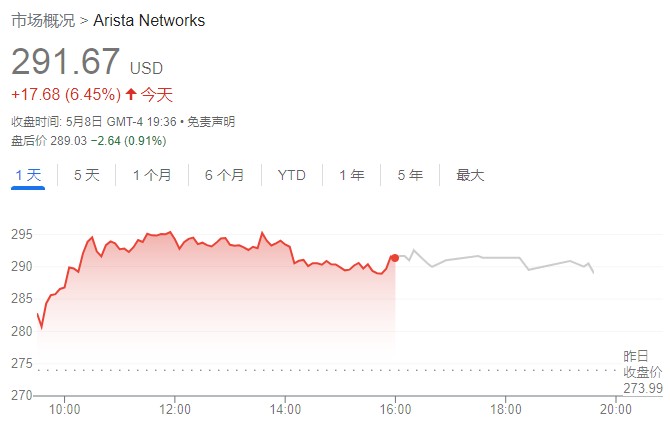

隔夜,公司股價大漲超 6%,報 291.67 美元/股。今年以來,該公司股價累計上漲近 26%。

外界分析指出,Arista 一般不會在 Q1 末就提高全年指引。因此這次上調指引,被解讀為對未來信心更足。

此外,在完成了先前 20 億美元的股票回購計劃後,Arista 此次還宣佈了價值 12 億美元的股票回購計劃。

AI 集羣時代來臨?以太網 “香” 了

Arista 於 2004 年 10 月在特拉華州註冊成立,於 2008 年上市。該公司在數據驅動、客户端到雲端的大型數據中心、園區和路由環境聯網方面處於行業領先地位。

作為一家 to B 的網絡交換器和路由器製造商,Arista 主要服務於包括微軟、Meta 等在內的雲服務商們。

Arista 此次在財報中表示,訓練 AI 大模型對性能的高要求提振了雲服務廠商對該公司硬件產品的需求。

Arista 董事長兼首席執行官 Jayshree Ullal 在財報電話會上表示,以太網正在成為 AI 數據中心最關鍵的基礎設施組件之一。

Ullal 解釋道:

“AI 應用是無法單獨運行的,它們需要計算節點之間的無縫通信。”

“而隨着生成式 AI 訓練的發展,如今需要成千上萬次單獨迭代。(所以)網絡擁塞導致的任何減速都會嚴重影響應用性能,造成效率低下的等待空耗,導致處理器性能下降 30% 甚至更多。”

簡單來説,AI 工作負載無法容忍網絡延遲,因為只有在所有流成功交付到 GPU 集羣后才能完成作業。只要有一個鏈接的出現故障或延遲將限制整個 AI 的工作效率。

減少訓練任務時間就需要構建橫向擴展 AI 網絡,從而提高 GPU 利用率。

而由後端 GPU 和 AI 加速器組成的計算節點以及 CPU 等前端節點以及存儲和 IPWAN 系統之間都需要無縫通信——這就促使大規模以太網成為橫向擴展 AI 訓練工作負載的首選。

有 “超級以太網代表” 之稱的 Arista 在該領域頗具優勢。

華爾街見聞此前提及,在與競爭對手 InfiniBand 的五個 AI 網絡集羣競標中,Arista 贏得了所有四個以太網。

分析指出,針對這四個集羣,Arista 已經從驗證過渡到中試,今年以來連接了數千個 GPU,預計可以到 2025 年生產 1 萬-10 萬個 GPU 節點的連接。

此前,基於其旗艦產品 7800 AI Spine,Arista 還向雲服務客户構建了 2.4 萬個節點的 GPU 集羣,一度引起行業震動。

不僅是硬件,Arista 構建了專門的 AI 架構(NetDL),實現了結構範圍的可見性,集成了網絡數據和 NIC 數據,使運營商能夠識別錯誤配置或行為異常的主機,並查明性能瓶頸。