汽车也要抢内存芯片了

2025 年 10 月 30 日,英偉達 CEO 黃仁勳與三星和現代汽車董事長在首爾聚會,暗示內存芯片供應問題。全球汽車產業轉型為智能計算平台,導致內存芯片需求激增。理想汽車副總裁孟慶鵬警告 2026 年汽車行業將面臨存儲芯片供應危機,滿足率可能不到 50%。智能汽車存儲容量將向 TB 級邁進,市場需求快速升級。

2025 年 10 月 30 日晚上 7 點半,韓國首爾江南區三成洞 “Kkanbu 炸雞” 餐廳,英偉達聯合創始人、總裁兼 CEO 黃仁勳與三星董事長李在鎔、現代汽車董事長鄭義宣一起吃炸雞喝啤酒。

這三位大佬穿圓領衫體驗平民生活的故事被全球社交媒體瘋狂傳播。

聚會結束離開時,黃仁勳對兩位董事長説:“今天是我人生中最美好的一天。” 李在鎔則笑言:“發現幸福其實很簡單——和好的人一起吃點好東西、喝上一杯,就是幸福。”

現在看來,李在鎔的感慨或許是心靈雞湯,但 AI 大佬黃仁勳的美好應該是由衷的,他估計從三星又搞定了一批存儲芯片。鄭義宣看起來沒有發表臨別感言,想必現代汽車集團的內存芯片不會存在保供問題。

安世半導體引發的功率芯片危機尚未完全平息,如今主角又換成了存儲芯片。他們雲淡風輕的交談背後,是 AI 算力爆發式需求引發的全球內存芯片搶奪戰。

“2026 年,汽車行業會面臨前所未有的存儲芯片供應危機,滿足率可能不到 50%。” 12 月 6 日,理想汽車供應鏈副總裁孟慶鵬在 2025 新汽車技術合作生態交流會上的發言,引起了整個行業對存儲芯片供應的關注與擔憂。

汽車為什麼需要內存芯片?

如今,全球汽車產業正經歷從傳統機械交通工具向 “移動智能計算平台” 的歷史性跨越。這種蜕變,使得汽車對數據的產生、處理、存儲和傳輸需求呈現出爆炸式增長。

隨着 BEV+Transformer 大模型成為高階輔助駕駛標配、VLA 端到端大模型向複雜場景滲透,傳統車載存儲在帶寬、時延與功耗方面的短板正逐步暴露。

從當前數據來看,單輛高階智能網聯汽車的閃存容量普遍在 64-256GB 區間。而隨着車載傳感器精度提升、端側大模型推廣及車載娛樂功能豐富,未來智能汽車的存儲容量將向 TB 級邁進。

在此趨勢下,市場對車載存儲產品的需求正朝着更高性能、更大容量、更低成本的方向快速升級。種種因素疊加之下,整個汽車產業對存儲芯片的需求達到了前所未有的高度。

但是,AI 服務器需求暴漲為何會引發汽車產業內存短缺?供應滿足率不到 50% 是否意味着明年車企交付將減半?單車成本與訂單交付又將受到怎樣的影響?

一系列問題接踵而至,中國車企該如何面對正在掀起的 “搶芯大戰”。

AI 引發內存芯片新週期

《汽車商業評論》認為,此次汽車行業面臨的內存芯片供應危機,很大程度上符合存儲芯片行業顯著存在的 3 - 5 年一輪的週期規律。自 2012 年以來,存儲芯片行業已走過三輪完整週期,週期時長基本在 3 - 5 年。

2012 - 2015 年,受益於智能手機普及推動需求上漲,隨後因廠商集中擴產出現供過於求,完成首輪週期;

2016 - 2019 年,3D NAND 技術推廣和 DDR4 內存普及帶動需求,後續又因產能釋放、消費電子需求放緩使價格回落;

2020 - 2024 年,疫情催生的 “宅經濟” 拉動需求,疫情緩解後則因產能過剩和需求疲軟陷入下行。

時間進入 2025 年,當 OpenAI 以 “星際之門” 為名鎖定每月高達 90 萬片 DRAM 晶圓供應(大致相當於全球產量的 40%),當 SK 海力士、三星電子憑藉 HBM 業務創下盈利新高時,預示着內存芯片行業新一輪週期的到來。

存儲芯片作為半導體產業規模最大的分支之一,2024 年全球市場銷售額約 1655 億美元,佔半導體總規模逾四分之一。

從應用形態看,存儲芯片分為易失型(內存,含 DRAM、SRAM)和非易失型(閃存,含 NAND、NOR 等)兩種,其中 DRAM 與 NAND 合計佔據 99% 以上的存儲市場規模。

目前,全球 DRAM 市場由韓國三星、SK 海力士,以及美國美光三家壟斷(合計市佔率超 95%),NAND 市場則由三星、SK 海力士、鎧俠、美光、閃迪等五家企業主導(合計市佔率超 92%)。

內存芯片行業呈現典型的技術與資本雙密集型寡頭格局——巨頭們通過製程微縮、架構創新及年投入數十億甚至上百億美元的研發保持領先,依託巨大產能攤薄成本,借 “反週期投資” 淘汰對手,最終形成壟斷。

過去很長一段時間,三星、SK 海力士等存儲芯片巨頭會依據不同場景需求預測,均衡分配 DRAM 與 NAND 產能,覆蓋消費電子、數據中心、工業控制及汽車等領域。

汽車電子作為重要但增速平穩的細分領域,在內存芯片產能分配中份額相對有限。在這種模式下,汽車製造商通常能通過 Tier1 等傳統渠道獲得穩定的內存供應和價格。

過往,內存芯片需求旺盛的主要驅動力集中在 PC、智能手機和傳統數據中心;現在,硅谷巨頭掀起的 AI 基建狂潮,徹底打破了內存芯片原有的市場結構,正在重構內存芯片供需邏輯。

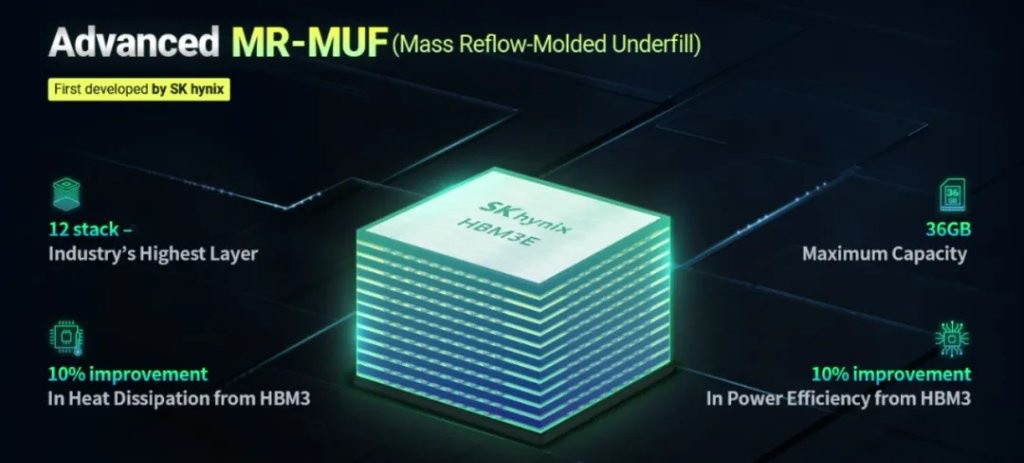

AI 服務器,尤其是用於大模型訓練的 AI 服務器,對高帶寬、大容量內存提出了爆炸式需求。單台 AI 服務器的 DRAM 搭載量是普通服務器的 8-10 倍,而訓練大模型必需的高帶寬內存(HBM),其市場規模正以驚人速度擴張。

Trendforce 提供的數據顯示,2024 年整體 HBM 消耗量達 6.47B Gb,年增 237.2%;2025 年預估整體 HBM 消耗量將達 16.97B Gb,年增 162.2%。

更關鍵的是,HBM 的複雜堆疊結構需消耗三倍於 DDR5 的晶圓產能,這意味着 AI 領域每新增一份內存需求,都要搶佔更多原本可分配給其他領域的產能。

“我們正在見證一個前所未見的市場結構。” 摩根士丹利在研報中表示,本輪內存需求核心是圍繞 AI 數據中心和雲服務商的 “軍備競賽”,這些客户對價格敏感度遠低於傳統消費者,更關心能否獲得足夠算力基礎設施支撐大語言模型和 AI 應用開發。

這意味着,AI 領域在內存芯片的採購上展現出極強的價格承受能力。

以英偉達為例,它 2026 年推出的 Rubin 架構數據中心 GPU 鎖定了大量 HBM4 供應。與 SK 海力士達成的協議顯示,HBM4 單價達 560 美元,較上代 HBM3E 上漲 50% 以上。

英偉達也已開始採購三星的第五代高帶寬內存 HBM3E,用於其最新的 AI 加速芯片 GB300。另據瞭解,英偉達 2026 年 SOCAMM2 內存模組約一半的供貨訂單來自三星。

不知道英偉達花多少錢從三星拿貨。摩根士丹利最新研報數據顯示,2025 年第四季度的服務器 DRAM(內存)報價已飆升近 70%,NAND(閃存)合約價也上漲 20%—30%。

SK 海力士通過 12 層堆疊的 HBM3E 及服務器 DDR5 等高端產品推動 2025 年 Q3 毛利率攀升至 57%,營業利潤首次突破 10 萬億韓元大關;三星也憑藉類似高端產品組合,同期營業利潤環比大增 158.55%。

被忽略的 “前期預警”

不難看出,這場由 AI 引發的內存芯片新週期,本質上是高附加值領域對傳統領域產能的 “擠壓式替代”。

三星電子、SK 海力士、美光科技的產能先前主要分為服務器、PC、手機及汽車等其他領域共計四類,服務器佔比為 55%—60%,其餘三類合計佔 30%—35%。

2025 年,三大原廠產能分配已大幅向服務器傾斜,佔比提升至 70%,其餘類別總量縮減 10%—15%,從而直接導致手機、PC、消費電子及汽車領域的存儲芯片供應收緊。

對三星電子、SK 海力士、美光科技這三大原廠而言,選擇並不複雜——AI 相關的 HBM、DDR5 產品利潤是傳統內存的數倍,自然成為資本支出和先進製程產能的優先項。

在最受關注的 HBM 領域,頭部廠商的擴產動作尤為激進。SK 海力士憑藉先發優勢,2025 年已將 HBM 產能翻番,一舉拿下全球超 60% 的市場份額,幾乎壟斷了英偉達的 HBM 訂單。

為延續這一優勢,其針對英偉達 HBM4 需求的產能擴張正穩步推進——位於韓國忠清北道清州園區的 M15X 工廠,計劃於 2025 年四季度正式投產。

三星電子緊隨其後,將 1Y 納米工藝的 16Gb DDR4 產能轉向 DDR5 後,毛利率較 DDR4 時代提高 12 個百分點。12 月 2 日,三星電子宣佈完成 HBM4 的量產準備認證(PRA),正全力推進進入英偉達供應鏈的進程。

美光科技計劃斥資 1.5 萬億日元(約 96 億美元),2026 年 5 月在日本廣島現有廠區開始新建專門生產 HBM 的工廠,預計 2028 年實現規模化出貨,產品將重點供應 OpenAI、谷歌雲、微軟 Azure 等全球頂級雲服務商與 AI 基礎設施企業。

今年初開始,三星率先傳出減產 DDR4 的消息,並要求客户在 6 月前完成訂單確認。SK 海力士也計劃將現有 DDR4 產能壓縮至總產能的 20% 以下,並計劃 2026 年 4 月停產。而 NAND 市場,廠商也紛紛縮減消費級產能,將產能轉向企業級 3D QLC 產品。

由此,也有頭部車企採購負責人向《汽車商業評論》透露:“其實,半年前(也就是今年 5、6 月)就有預兆了,當時能預判到這個情況並提前佈局,是需要一些魄力的。現在再想解決方案,相當於亡羊補牢,效果有限。”

他説:“像英偉達這些企業要建很多大型主機,需要算力支撐,而算力又必須配套存儲,這兩者是綁定的。所以 AI 行業的玩家下手很早,搶佔了大量產能。畢竟 AI 競賽的核心,一是算力,二是電能,他們得先把這兩樣牢牢掌握住。”

這些大廠的產能調整,直接導致了高端 HBM 與 DDR5 仍舊無法滿足 AI 服務器巨大增量的同時,中低端 DDR4 因減產速度超過需求下降速度,出現嚴重的供給不足。

《汽車商業評論》瞭解到,這使得本已緊張的 DRAM 整體產能徹底雪崩,甚至出現前代產品(DDR4)價格反超新一代產品(DDR5)一倍的 “價格倒掛” 現象。

而 DDR4 內存恰好能滿足當下車載系統對數據處理速度、存儲容量的實際需求。其成熟的技術方案與供應鏈體系,使其佔據當前汽車電子內存市場 60% 以上的份額。

這意味着,在高端車規級芯片上,車企要和那些 “AI 基建狂魔們” 正面硬剛,給高端智能網聯車型搏一個 “未來”,而在 DDR4 這類 “入門產能” 上,車企又要面對三大原廠戰略收縮的窘境。

正面硬剛的結果基本上也是確定的。長期以來,汽車行業給內存芯片的出價根本不能與科技企業相比,而且出貨量也無法與之相比。三大原廠必然更青睞科技企業。

而對於這種戰略收縮,《汽車商業評論》認為,並不排除內存芯片廠商聯合做局抬升 DDR4 之類價格的可能,畢竟過去已經虧得太多。

實際上,這也導致了很多車企忽略了產業端的 “前期預警”,因為彼時 AI 服務器的龐大需求尚未完全釋放,導致很多車企以為內存芯片廠商故意在 “哄抬物價”,從而在當時選擇持續觀望。

2025WNAT-CES 新汽車技術合作生態交流會上,諸多國內主機廠以及 Tier1 表示,內存芯片短缺問題將在 2026 年成為鐵定風險,全年將無法解決,並對高端和旗艦車型影響最大。

道路好像千萬條

《汽車商業評論》瞭解到,除了與聯想、小米等少數企業提前鎖單的產能之外,三大原廠 2026 年的全部產能幾乎已名花有主。

既然缺口已定,北斗智聯科技產品經理李家逵説,按照目前內存芯片漲價幅度來測算,中低端車型的單車成本上漲大約在 500 元以下,而高端智能網聯車型由於對內存需求更大,單車成本上漲可能會在 1000 元左右。

亦有業內著名採購經理人表示,這波行情大概率在明年 Q3 之前不會降温,除非 AI 泡沫破裂。但有車企採購負責人明確指出,接下來兩年 AI 需求肯定是旺盛的,明年分給汽車行業的存儲芯片產能肯定不足。

那麼,內存芯片短缺,從整個行業來看,是否會導致大面積、長週期的交付延遲現象?業內普遍認為不可能,但不排除部分車企產能交付延後。

樂觀理由主要並非來自國產替代。

知行科技董事長宋陽表示,包括他們在內的一些公司已經在用國產存儲芯片做試驗,涉及芯片包括長鑫、江波龍、ISSI、晶存等等。

《汽車商業評論》諮詢了多位業內人士,他們普遍認為,當下國產存儲芯片企業在高端產品上與國際巨頭仍存在明顯技術斷層。而在 DDR4 等中低端產線,以長鑫存儲為代表的國產廠商雖技術相對成熟,但由於面臨 DDR5 的換代升級,其對 DDR4 的產能佈局相對保守。

看起來,主要的路只剩下一條,就是高價掃貨,“沒有中國人掃不到的貨。” 這些業內人士表示。至於背後的原因,探索科技(techsugar)首席分析師王樹一認為,此輪內存芯片短缺,既有 AI 新增需求的系統性因素,也在很大程度上源於三大原廠的市場操控。

他説:“只要利潤足夠大,所有的工業品都可能會供過於求,當然因為目前大宗存儲市場非常壟斷,只有屈指可數的幾個玩家,所以他們操縱價格的能力比較強,不會類似我國光伏一樣出現長時間大面積深度虧損。”

畢竟,前兩年適逢下行週期,甚至直到今年二季度前,三家原廠均維持大面積虧損。從商業邏輯看,在內存芯片這個相對封閉的圈子,哪裏虧了錢,最終還得從哪裏掙回來。三家自 2024 年 Q4 便開始統一延遲出貨、抬高價格。目前,DDR4 和 DDR5 內存條價格相較年初已上漲 5-6 倍,市場形成搶購氛圍。

有業內人士指出,三大原廠通常在每個季度初公佈訂單報價,但今年 Q4 的報價直到 12 月初才公佈。此舉若説沒有待價而沽的心思,恐怕難以令人信服。既然是待價而沽,説明芯片仍可買到,只是可能需要等待,且價格更高,而這些都會直接體現在車企的成本上。

為了解決存儲芯片問題,《汽車商業評論》認為,主機廠可能會在配置上做減法,比如降低算力和存儲配置,原來高配的車型或許會調整,這樣原來生產一台車的存儲芯片,可能能滿足兩台車的需求,這是主機廠自己可以做的調整。

而且,對於車企來説,這場存儲危機的影響並非均質化,而是呈現出明顯的 “車型分化” 特徵。傳統汽車對內存需求相對較低,受影響也較小;而搭載高階智駕的智能旗艦車型則截然相反,所受衝擊明顯更大。

有車企採購負責人表示,之前很多車型用的都是 DDR4,用户使用體驗都很好,並不是必須用最高端的產品。高端存儲芯片能讓車型賣得更貴,作為高端配置存在;但對普通消費者來説,當前的智駕功能已經能滿足日常使用需求,沒有問題。

若從長線佈局,車企也可親自下場,憑藉自身規模與影響力,與國際大廠或國產企業構建穩固合作關係。車企採購負責人表示,存儲芯片圈子有其獨特 “玩法”,價格固然重要,但合作關係及長期穩定的合作模型,同樣決定芯片分配結果。

此前,特斯拉與三星簽訂的那份價值 165 億美元、橫跨 8 年的芯片代工協議,便是典型案例。儘管長期合約可能意味着更高成本,但對特斯拉而言,穩定供應是保障其智能化進程不被打斷的關鍵。

還有一種辦法就是車企可以通過更多影響力較大的 Tier1 提早圈定產品訂單,車企得以擴大供應商基礎,避免對單一來源的過度依賴,從而有效降低斷供風險。

不過,遠水解不了近渴。《汽車商業評論》瞭解到,已經有車企採購負責人前往韓國拜會三星。他們甚至在黃仁勳與李在鎔、鄭義宣吃炸雞的同一個餐廳同一個位置吃飯,祈求能夠獲得更多訂單。

“去總比不去好。” 他説。

風險提示及免責條款

市場有風險,投資需謹慎。本文不構成個人投資建議,也未考慮到個別用户特殊的投資目標、財務狀況或需要。用户應考慮本文中的任何意見、觀點或結論是否符合其特定狀況。據此投資,責任自負。