A major new opportunity for CPUs? When Intel and AMD collide with the new trend of Agents

隨着 AI 產業結構的變革,CPU 需求激增,英特爾和 AMD 面臨漲價挑戰,預計一季度漲幅在 20-30%。台積電的轉產導致 PC CPU 出貨量下降,推動了這一趨勢。CPU 在 AI 領域的重要性日益凸顯,尤其是在高端 AI 服務器配置中,CPU 是系統穩定的核心,能夠處理複雜邏輯運算和非並行化任務。市場對 GPU 的追求掩蓋了 CPU 在能效比中的關鍵地位,計算架構正經歷顛覆式革命。

推理需求爆發,Agent 時代到來,AI 產業結構面臨變革。

硬件採購邏輯將會發生大反轉?

英特爾和 AMD 分別具有哪些關鍵的紅利?

一、發生了什麼?CPU 漲價

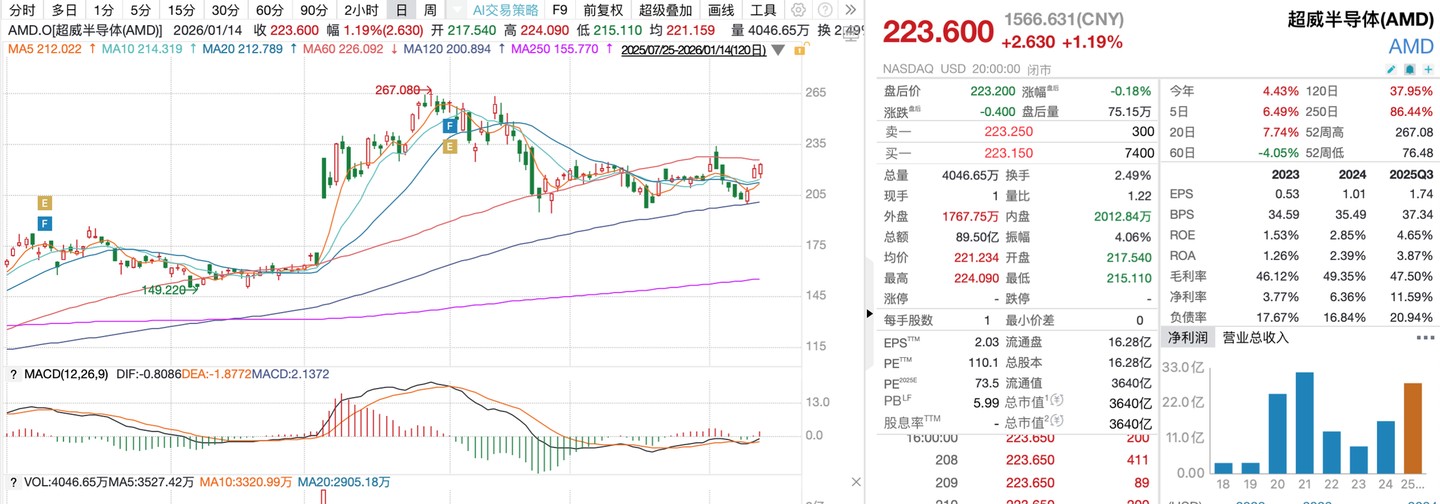

根據我們和產業內人士交流,台積電 n3b 轉產會導致其 pc cpu 出貨量下降,導致中國客户 CPU 面臨漲價挑戰,預期一季度漲價幅度在 20-30%。美股龍頭企業英特爾和 AMD 最近持續跑贏市場和板塊指數。

除了漲價預期驅動外,CPU 還面臨重大的產業邏輯重構機遇,我們在早前的 VIP 文章《DeepSeek 繼續降本!Engram 技術顛覆存儲革命??》中提到,推力需求爆發將提振 CPU 在 AI 端的需求,引入 CPU 作為小語言模型和數據預處理管道的性價比引擎。

作為計算領域的基礎設施,CPU 的重要性也有望進一步凸顯。

①高端 AI 服務器普遍遵循 “每 8 個 GPU 搭配 2 個高端 CPU” 的配置,CPU 是協調硬件、保障系統穩定的核心,無 CPU 則無法完成服務器啓動、監控、故障診斷等系統級任務。

②作為通用處理器,擅長處理序列化任務、複雜邏輯運算,能覆蓋 AI 全流程(數據預處理、模型訓練輔助、推理、後處理),尤其適配 GPU 不擅長的非並行化任務。

③多年積累的軟件生態(操作系統、數據庫、開發工具)均基於 CPU 設計,無需額外適配;處理非 GPU 適配任務時,CPU 成本效益更高,能平衡性能與成本。

在 AI 算力由 “大模型訓練” 向 “全場景推理” 與 “自主 Agent” 跨越的關鍵拐點,計算架構正經歷一場顛覆式革命。過去兩年,市場對 GPU 與 HBM(高帶寬內存)的狂熱追求掩蓋了通用處理器(CPU)在系統級能效比中的核心地位。然而,隨着 DeepSeek 發佈重磅論文《Conditional Memory via Scalable Lookup》(條件記憶:大規模大模型的稀疏化新維度)及其核心模塊 Engram 的推出,硬件需求的底層邏輯被徹底改寫。

二、為什麼重要?Agent 時代到來

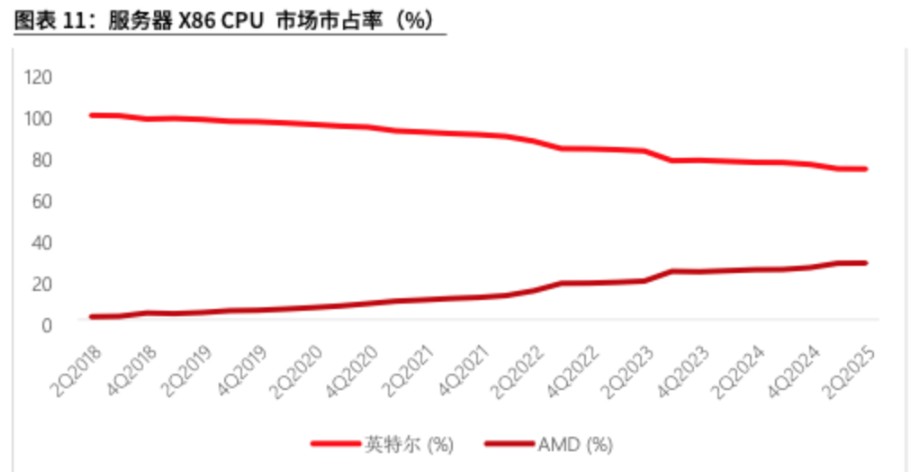

AI Agent 時代的到來使得 CPU 不再僅僅是系統的 “指揮官”,更成為了推理鏈條中的核心 “生產力”。在強化學習(RL)環境構建、工具調用、數據預處理等環節,CPU 正取代 GPU 成為新的系統瓶頸。目前,全球服務器 CPU 市場已進入 “賣方市場”,英特爾與 AMD 的主力型號貨期延長至 20 周以上,2026 年產能幾乎售罄,專家交流紀要顯示,CPU ASP(平均售價)面臨 10%-15% 的系統性上調。英特爾 18A 製程的提前量產與 AMD EPYC 在數據中心市場接近 50% 的市場份額,標誌着雙雄競爭已進入效率驅動的新階段。

資料來源:華興證券

在 AI Agent(智能體)的大趨勢下,模型不再只是輸出文本,而是要通過 Python 解釋器、Web 搜索、數據庫檢索等外部工具(Tools)進行閉環操作。

①環境構建壓力:在進行 Agent 相關的強化學習訓練和推理時,需要 CPU 實時構建海量的模擬工具和 Environment。

②併發瓶頸:CPU 決定了數據能以多快的速度併發生成、評估並餵給 GPU。一旦 CPU 效能不足,就會導致 GPU 利用率大幅下降、Policy Lag(策略滯後)以及訓練收斂變慢。

對於大量不需要頂級 GPU 算力的長尾推理任務(如向量化預處理、小語言模型 SLM 推理),CPU 憑藉其極高的靈活性和極低的部署門檻,成為了首選的性價比引擎。我們觀察到,推理佔 AI 計算的比例正不斷提升,這將重新引入 CPU 作為推理核心的敍事。

根據我們最新的行業調研與供應鏈反饋,服務器 CPU 正面臨自 2021 年以來最嚴重的短缺:

①英特爾(Intel):第四代、第五代可擴展至強系列缺貨嚴重,原因在於 AI 趨勢拉動雲服務商(包括北美頭部互聯網廠商)大批量採購老型號進行推理優化。目前貨期已拉長至 20 周以上,短缺情況預計將貫穿整個 2026 年上半年。

②AMD:從 2025 年 Q4 起,其第四代、第五代 EPYC 處理器的 6-7 個核心型號也出現缺貨。

受 CPU 緊缺及上游存儲介質漲價影響,服務器 OEM 廠商(聯想、浪潮、戴爾等)在 2025 年 Q1 的整機出貨利潤要求較此前上漲了 30%-40%。主流機構(如 KeyBanc)指出,英特爾與 AMD 均在考慮將服務器 CPU 的 ASP 上調 10%-15%。

三、接下去關注?英特爾和AMD

如果 DeepSeek 新版本 V4 之後,將 Engram 技術推上新風口,那將同時利好服務器 CPU 雙雄——英特爾與 AMD。

對 AMD:行業趨勢的強勢受益者

①直接利好:AMD EPYC 處理器憑藉在核心數、內存帶寬和能效上的優勢,已在 AI 推理市場建立良好口碑。Engram 推高 CPU 算力需求,AMD 將憑藉其現有產品力直接獲取市場份額。電話會筆記顯示,AMD 全球服務器 CPU 份額已超 40%,在中國以外市場增長迅猛。

②全棧優勢:AMD 擁有從 CPU(EPYC)、GPU(Instinct)到互聯(Infinity Fabric)的完整解決方案,能為客户提供 Engram 架構的 “一站式” 閉環選擇,這對於希望多元化供應鏈、降低對英偉達依賴的客户具有吸引力。

對英特爾:戰略協同的潛在顛覆者

英特爾的利好更為深刻,具備從 “復甦” 到 “引領” 的潛力,其核心在於獨一無二的戰略協同:

①與 Saimemory 項目的完美契合:英特爾與軟銀合資的 Saimemory 項目,旨在研發低成本、低功耗的堆疊式 DRAM 以替代 HBM。其目標(容量翻倍、功耗降 40-50%)與 Engram 對 “大容量、低成本內存” 的需求高度匹配。這是 AMD 乃至其他競爭者所不具備的獨佔性戰略佈局。

②先進封裝的用武之地:英特爾在 EMIB、Foveros 等先進封裝技術上的積累,可用於優化 CPU 與大容量內存(無論是普通 DRAM 還是未來的 Saimemory)之間的高速互連,進一步降低延遲,提升 Engram 架構的整體效能。

③供應鏈與生態話語權:若 “CPU+Saimemory” 路線成功,英特爾將有望打破當前由三巨頭壟斷的 HBM 市場,在 AI 存儲關鍵環節掌握自主權,提升其在 AI 產業鏈中的生態位,從組件供應商向系統級解決方案提供商邁進。

用户應該關注哪幾個關鍵投資維度?

①CPU 與通用內存條(DDR5/DDR6)的配置反彈

既然 “CPU+DRAM” 能幹 “GPU+HBM” 的活,推理服務器中 CPU 的配比和內存容量將大幅抬升。擁有高性能服務器 CPU、且深度佈局堆疊式 DRAM 技術的廠商(如英特爾、AMD 將會受益)。

②先進封裝與堆疊式 DRAM(HBM-Like DRAM)

為了縮小 DRAM 與 HBM 的性能差距,非 HBM 但具備垂直堆疊特徵的高密度內存模塊將成為性價比之選。軟銀與英特爾合資的 Saimemory 等類似項目,以及國內在 DRAM 先進封裝領域的先行者。

③CXL 產業鏈的實質性業績兑現

關注 CXL 控制芯片、CXL 存儲擴展模塊。這是實現 DeepSeek Engram 架構的 “血管”,沒有高效的互聯,這一套 “窮人方案” 就跑不通。

④推理側的 “長尾市場”:邊緣計算與私人服務器

既然千億模型不再非 HBM 不可,這意味着邊緣 AI 服務器甚至高配工作站都能跑動完整版 DeepSeek V4。這將極大刺激消費級高性能 DRAM(如 32GB/64GB 模組)的滲透率。

AI 的下一章,將是推理與智能體(Agent)的篇章,是計算從集中訓練走向廣泛部署與自主行動的篇章。CPU 不再是沉默的背景,而是走向舞台中央,成為平衡性能、成本與規模化的關鍵支點。當前 CPU 產業的缺貨與漲價,正是這場深刻變革投下的第一縷影子。CPU 是繼存儲之後,下一個迎來價值重估與需求爆發的核心算力環節,其投資機遇不容忽視。

風險提示及免責條款

市場有風險,投資需謹慎。本文不構成個人投資建議,也未考慮到個別用户特殊的投資目標、財務狀況或需要。用户應考慮本文中的任何意見、觀點或結論是否符合其特定狀況。據此投資,責任自負。