At least nine Chinese AI chip companies have shipments exceeding 10,000 units

Under strict chip export controls, the localization process of China's AI chips has accelerated, with at least nine companies' shipments or order volumes exceeding 10,000 units, including major firms like Huawei and Baidu, as well as several startups. The market share of domestic AI chips is rapidly increasing, and it is expected that by the first half of 2025, the market size will reach $16 billion, with domestic chips accounting for 35% of the market share. As foundry capacity increases, shipments will experience explosive growth, but competition continues, entering the stage of large-scale delivery verification

Under the pressure of strict chip export controls, the process of localization for domestic data center AI chips is accelerating. Currently, domestic AI chips include over a dozen brands such as Huawei Ascend, Baidu Kunlun, Alibaba PingTouGe, and Cambricon.

According to extensive research by Caijing, at least nine Chinese AI chip companies have seen their shipment or order volumes exceed 10,000 units. This includes companies backed by major tech firms like Huawei Ascend and Baidu Kunlun, as well as AI chip companies that are listed or will be listed, such as Cambricon, Muxi, TianShu ZhiXin, and SuiYuan Technology, and even non-listed startups like Sunrise and Qingwei Intelligent that are still in the entrepreneurial stage.

Among the AI chip companies with large shipment volumes, the cumulative shipment has reached the 100,000 unit level . Smaller AI chip companies, such as Sunrise and Qingwei Intelligent, are expected to have shipment volumes or order scales exceeding 10,000 units by 2025.

The current unit price of domestic inference AI chips ranges from approximately 30,000 to 200,000 yuan per unit. Achieving shipment or order volumes at the 10,000 unit scale indicates a certain level of market recognition for the performance, stability, and total cost of ownership of domestic AI chips. This not only initiates competition in scale but also leads to deeper and more comprehensive competition surrounding stability, software ecosystems, and commercialization services.

A number of large and small AI chip companies are rapidly increasing their shipment volumes, resulting in a swift rise in the market share of domestic AI chips.

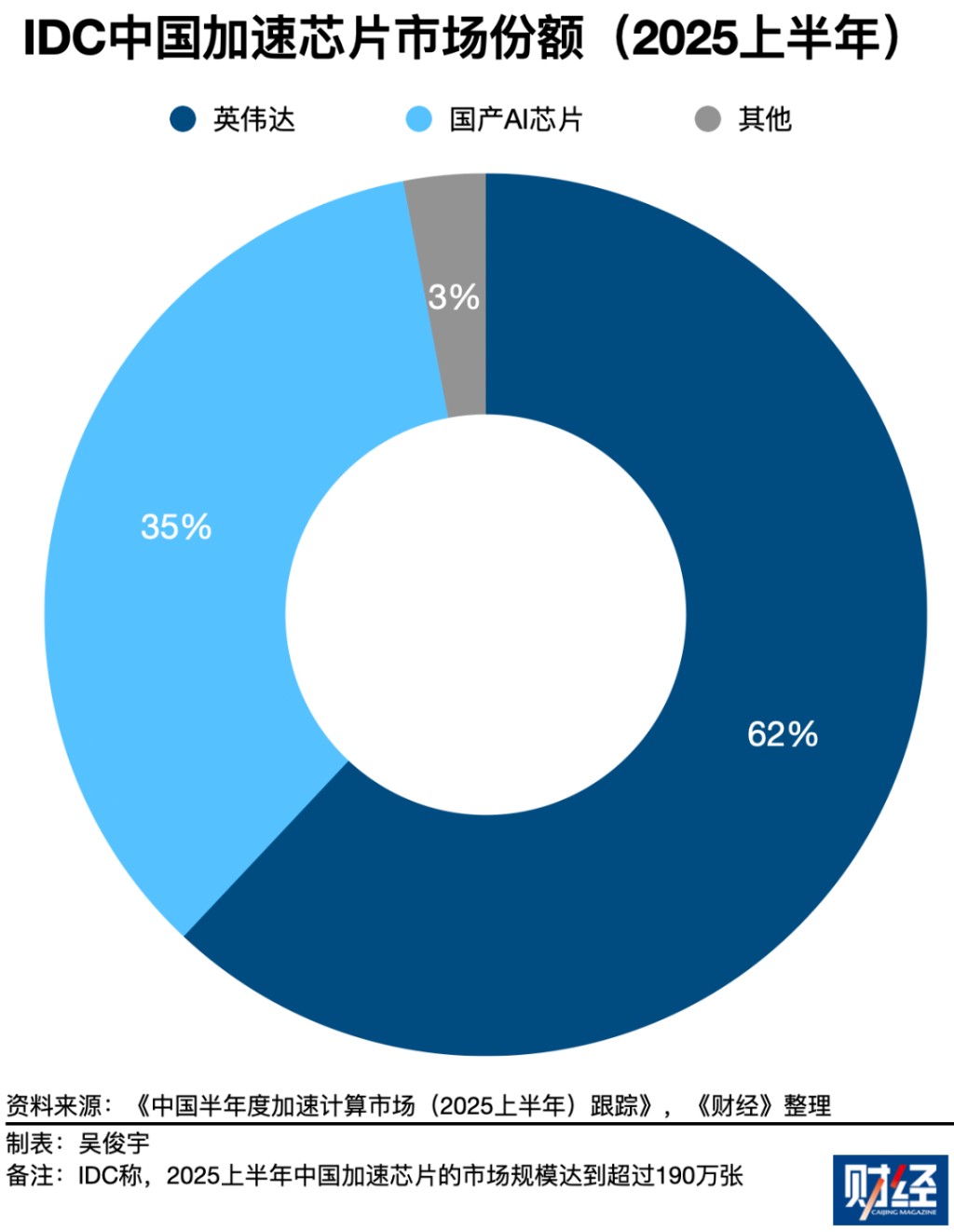

According to data from international market research firm IDC, the market size for accelerated (i.e., AI chip) servers in China is expected to reach $16 billion in the first half of 2025, with shipments exceeding 1.9 million units. Among these, NVIDIA accounts for approximately 62% of the market share, while domestic AI chips account for about 35%. The demand for domestic AI chips continues to grow, with a growth rate far exceeding that of NVIDIA.

Several semiconductor industry insiders told Caijing that as the foundry capacity for domestic AI chips gradually increases in 2026, the shipment volume of domestic AI inference chips is expected to experience explosive growth.

However, the emergence of multiple companies with 10,000 unit shipment volumes does not mean that the competition for domestic AI chips has been decided, but rather indicates that this round of industrial trial and error is entering the "scale delivery verification" phase.

Emergence of Chinese AI Chip Companies with 10,000 Unit Shipments

Domestic AI chip companies with 10,000 unit shipment volumes are emerging in large numbers.

Huawei Ascend and Baidu Kunlun are the largest domestic AI chips by shipment scale. They are backed by large tech companies and have stable customers. Huawei Ascend and Baidu Kunlun have outstanding performance and have even been used in some model training scenarios.

Data from international market research firm IDC shows that in the second half of 2025, among domestic AI chips, Huawei Ascend ranks first in market share, while Baidu Kunlun ranks third in domestic market share Huawei Ascend has been used in multiple domestic WanKa clusters by telecom operators and technology companies. Baidu lit up the Kunlun Chip P800 WanKa cluster in February 2025 and plans to light up a 30,000 WanKa cluster in the future. In addition to Baidu, a number of large enterprises in finance, energy, manufacturing, and other fields are procuring Baidu Kunlun chips.

Cambricon is also one of the largest domestic AI chip manufacturers by shipment volume. Its main customers include large domestic internet companies, telecom operators, and financial institutions.

In the second half of 2025 to early 2026, AI chip startups such as MuXi, Moore Threads, TianShu ZhiXin, and SuiYuan Technology will successively release their prospectuses. The prospectuses show that MuXi, TianShu ZhiXin, and SuiYuan Technology have all exceeded WanKa in cumulative shipments.

The MuXi prospectus reveals that as of the end of August 2025, MuXi AI chips have accumulated sales of over 25,000 WanKa and have achieved large-scale applications in multiple countries' public AI computing power platforms, telecom operators' intelligent computing platforms, and commercial intelligent computing centers.

The TianShu ZhiXin prospectus discloses that as of June 30, 2025, TianShu ZhiXin has delivered 52,000 AI chips to 290 customers in industries such as finance, healthcare, and transportation.

The SuiYuan Technology prospectus reveals the shipment situation of SuiYuan Technology's AI accelerator cards and modules as of September 2025. According to calculations by Caijing, the total sales of SuiYuan Technology's AI accelerator cards and modules amount to 97,200 units.

Caijing has learned that domestic AI chip companies such as XiWang and QingWei Intelligent, which have not yet gone public, have also exceeded WanKa in shipment volume or order volume. However, there is still a significant gap between their shipments and those of leading domestic AI chip companies.

XiWang was formerly the large chip department of SenseTime and became an independent operation at the end of 2024. The company currently focuses on the research and commercialization of AI inference chips. XiWang's investors include Huaxu Fund under SANY Group, Paradigm Intelligence, Hangzhou Data Group, IDG Capital, Gao Rong Venture Capital, Wu Ji Capital, and other well-known institutions, as well as state-owned funds such as Chengtong Mixed Reform Fund, Hangzhou Jin Investment, and Hangzhou High-tech Jin Investment.

XiWang has already sold AI inference chips such as QiWang S1 and QiWang S2, and will mass-produce the QiWang S3 chip in 2026. Customers include companies like SenseTime and Fourth Paradigm. At the product launch on January 28, XiWang disclosed that AI chip deliveries would exceed 10,000 WanKa in 2025, with significant revenue growth.

QingWei Intelligent is a "Tsinghua system" chip startup. It has received investment from the National Integrated Circuit Industry Investment Fund (Big Fund) and is one of the first national-level specialized and innovative "little giants." Caijing has learned that as of January 2026, QingWei Intelligent's AI inference chip order volume has accumulated to at least 20,000 WanKa, with customers including intelligent computing centers in some local cities Some domestic AI chip startups are currently more focused on usability, controllability, and shipment scale, rather than pursuing extreme peak performance.

Some domestic AI chip startups have not adopted advanced processes of 7nm (nanometers) and below, as well as HBM (high bandwidth memory) like NVIDIA and leading domestic AI chip companies. Currently, the capacity for domestic 7nm (nanometers) and below advanced processes and domestic HBM memory is extremely limited. Therefore, they have directly adopted the more mature 12nm process technology and LPDDR (low power double data rate memory) series memory, which can be mass-produced more quickly by the local industry chain in mainland China. The price of these domestic AI chips can even be as low as 30,000 yuan per card.

In December 2025, Wu Jian, founder of AI infrastructure startup Xinzhihui, told Caijing that he has been in contact with more than ten domestic AI chip companies. He expects that multiple domestic AI inference chips will be launched in the Chinese market in 2026-2027, leading to an explosion in this period.

Some domestic AI chips' inference performance has surpassed NVIDIA H20

Currently, there is a significant gap between the peak performance of domestic AI chips and NVIDIA. There is also a huge disparity in the number of AI chips in the Chinese market compared to the U.S. market.

Reducing the inference cost of domestic AI chips is becoming a common effort in the Chinese industry. Because when computing power cannot be directly compared, the difference is determined not by how many chips there are, but by how many Tokens each chip can output.

The main usage scenarios for data center AI chips include training and inference. The design and usage threshold for inference AI chips are relatively low, which presents an important opportunity for domestic AI chips to break through. IDC data shows that in 2025, the generative AI IaaS (Infrastructure as a Service) market in China will have a training proportion of 49.6% and an inference proportion of 50.4%. IDC predicts that by 2029, the training proportion will drop to 23.3%, while the inference proportion will rise to 76.8%.

Therefore, domestic AI chip companies generally focus on improving inference performance, "squeezing" every Token from each chip. Tokens are the basic units of model inference. When computing power is limited, the number of Tokens generated per second (Token/s) directly determines the response speed, throughput, and cost of AI services. This is the core metric for measuring the actual inference efficiency of chips.

Wu Jian stated that by optimizing hardware adaptation and scheduling, it is possible to achieve several times or even tens of times the Token throughput performance with the same chip and model.

Xu Bing, CEO of Xiwang, stated at a product launch on January 28 that the inference cost for one million Tokens in the Chinese market has dropped to 1 yuan by 2025. Xiwang's goal is to reduce the cost of one million Tokens to the level of 0.1 yuan using dedicated inference chips and system architecture. Relevant personnel from Xiwang told Caijing that Xiwang currently focuses on AI inference performance, and the next-generation Qiwang S3 aims to improve AI inference performance by more than ten times compared to the previous generation product In scenarios with lower thresholds and wider usage, the performance of some domestic AI chips has approached or even surpassed NVIDIA's H20. H20 is a "China-specific" AI chip that has been significantly downgraded in performance to comply with U.S. export control policies.

A local state-owned enterprise's intelligent computing technology personnel stated in December 2025 to Caijing that he tested the inference performance of domestic AI chips such as Huawei Ascend 910B, Baidu Kunlun Chip P800, and Alibaba PPU. The Baidu Kunlun Chip P800 and Alibaba PPU, when running optimized models like DeepSeek-R1 and Alibaba Qianwen, showed token throughput efficiency superior to NVIDIA's H20.

However, at the software ecosystem level, domestic AI chips currently face widespread issues of slow and difficult adaptation. Unlike NVIDIA's chips, which can be quickly adapted by developers to most models on the market.

A person from a domestic AI chip startup told Caijing that they are currently mainly adapting mainstream models such as DeepSeek, Alibaba Qianwen, and Meta's Llama series, while other models cannot be addressed in a timely and comprehensive manner. The aforementioned local state-owned enterprise's intelligent computing technology personnel mentioned to Caijing that it usually takes one to two months for their company to adapt new models for domestic AI chips. Therefore, they often cannot use the latest models immediately.

A CEO of an artificial intelligence solutions company even mentioned that Hugging Face (a global AI and large model open-source community) has over 2 million models, while the number of models adapted for a certain domestic AI chip is only in the dozens.

Several semiconductor industry insiders told Caijing that the emergence of companies with tens of thousands of units shipped indicates that this round of industrial trial and error is entering the "scale delivery verification" stage. Some semiconductor industry insiders believe that the domestic AI inference chip market is beginning to follow a path similar to the early photovoltaic industry—driven by industrial policies, industrial guidance funds, and the secondary capital market, the shipment volume of several manufacturers is rapidly increasing.

An optimistic expectation is that the market for China's AI inference chips in the coming years will produce several internationally competitive companies through intense market competition, similar to China's photovoltaic industry.

However, another viewpoint is that photovoltaics are highly standardized manufacturing products, and the outcome is ultimately determined by cost curves and production efficiency. The industrial development logic of AI chips is jointly determined by software, hardware, and ecosystem, and its competitive rhythm and elimination mechanism are fundamentally different from those of the photovoltaic industry.

Domestic AI chips are constrained upstream by the production capacity of chip foundries and downstream by the software ecosystem. Their delivery stability, software stack maturity, and ecosystem migration costs determine the repurchase and survival after "tens of thousands of units." The brutal competition in China's AI chip market has not even truly begun.

Risk Warning and Disclaimer

The market has risks, and investment should be cautious. This article does not constitute personal investment advice and does not take into account the specific investment goals, financial situation, or needs of individual users. Users should consider whether any opinions, views, or conclusions in this article align with their specific circumstances According to this investment, the responsibility lies with the investor.