Bard, Cladue, and OpenAI are starting a "battle" of large-scale models overseas. Is OpenAI beginning to counter the trend of internal competition? Meta Platforms defeats Midjourney | [Hard AI] Weekly Report

Bard、Claude2、ChatGPT 紛紛升級,誰都不閒着;大模型都在追趕 ChatGPT,而 OpenAI 卻準備成為反內卷達人;Meta 擊敗 Midjourney;Stability AI 聯合騰訊推出 Stable Doodle;

AI 界在本週發生了哪些大事呢?

觀點前瞻

大模型都在追趕 ChatGPT,而 OpenAI 卻準備成為反內卷達人;

本週,OpenAI 前腳剛剛更新了插件 “Code interpreter”,兩大最強競爭對手 Anthropic 和谷歌就相繼宣佈更新 Claude 和 Bard;

兩家競對現在的升級趨勢就是讓用户 “免費用上 GPT4 plus”,甚至是超越它;

而反觀 Ai 大模型鼻祖,這邊也是不慌不忙:不僅不卷大模型,甚至是準備停下來等等其它大模型的步伐。

“根據外媒報道,OpenAI 正準備開始創建多個運行成本較低的小型 GPT-4 模型,每個較小的專家模型都在不同的任務和主題領域進行訓練。”

簡而言之,就是 OpenAI 家正打算走降本的輕量化路線,下一目標很可能是推廣多種垂類大模型。

在【硬 AI】看來,OpenAI 這種【混合專家模型】的思路確實會在當下犧牲了一部分回答質量,但也許是更接近產業應用的一條有效路徑。

本週日報你還能獲得以下諮詢:

1、Bard、Claude2、ChatGPT 紛紛升級,誰都不閒着

2、AI 作圖界繼續開卷:

Meta 擊敗 Midjourney;Stability AI 聯合騰訊推出 Stable Doodle;視頻分割大模型【SAM-PT】現身;

3、國內模型大事件:

網信辦給國內大模型 “上保險”;阿里開源國內首個大模型"對齊數據集”;京東發佈言犀大模型;智源超越 DeepMind;王小川大模型再升級

4、海外熱點消息

牛津、劍橋紛紛解除對 ChatGPT 禁令;Meta 要發 AI 模型商用版;馬斯克 “打臉” 現場,從抵制 AI 到成立"xAI";

Bard、Claude2、ChatGPT 都不閒着

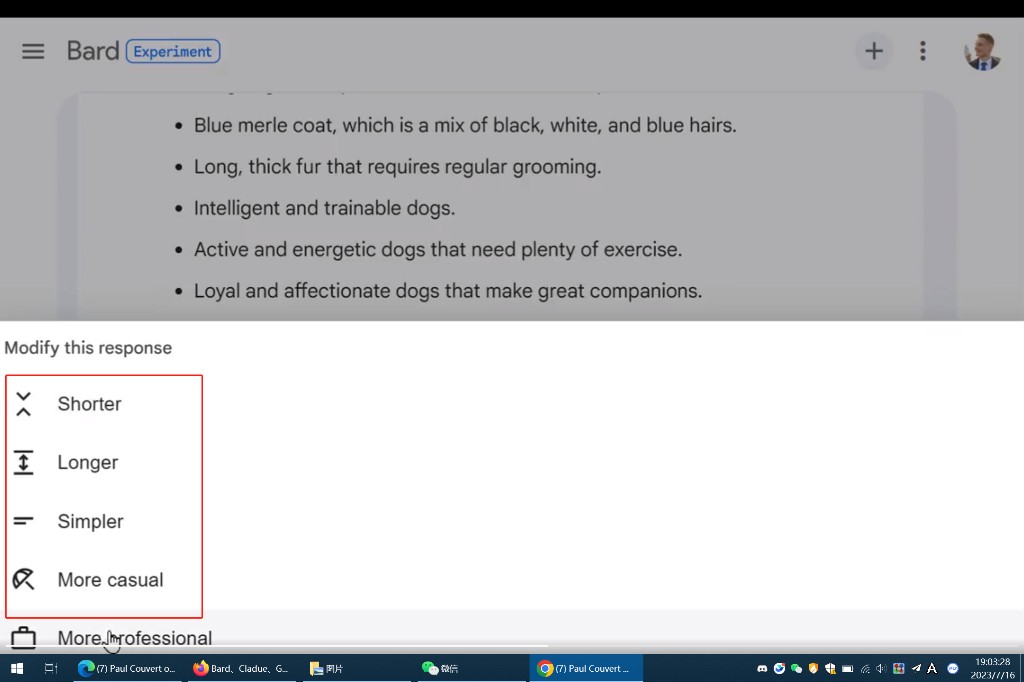

1、Bard 更新:支持中文、圖像理解、語音提問

此前只支持英文提問的 Bard 終於更新了中文等 40 多種語言的輸入,還新增歐盟和巴西地區訪問,

不僅如此,Bard 還更新了以下幾個功能:

- 上傳和理解圖片(tips:僅支持英文版)

- 可以通過語音進行提問;

- 保存歷史記錄和分享對話鏈接(與 GPT 一樣)

- 定製回覆的長度、風格

- 導出代碼功能

2、Claude2:一鍵幫你總結 PDF

Claude 升級的第二代直接用上 GPT plus 會員,支持上傳 PDF,還能幫你查找、總結多文檔內容之間的關係(支持 txt、pdf 多種格式,最高不超過 10MB)

3、ChatGPT 上線最牛插件 - 代碼解釋器

GPT4 最新插件 - 代碼解釋器,起初這個插件被稱作—讓每個人都成為數據分析師 (主要是在數據處理、繪圖方面很厲害);

不過最近又在網友的測試下,解鎖了一些新功能:比如做成小視頻、製作簡易小遊戲、表情包等等;

感覺這個插件的功能還有待網友們繼續探索和解密。

AI 作圖界又發生了哪些大事

1、Meta 突破多模態天花板,打敗 Stable Diffusion、Midjourney

Meta 推出一款單一多模態大模型——CM3leon,問市即巔峯?

現在都説 CM3leon 比 Stable Diffusion、Midjourney、DALL-E 2 還牛,這是為啥?

【有多硬】

CM3leon 採用自迴歸模型獨領風騷,比前期領航梯隊 Stable Diffusion 等多模態採用的擴散模型的計算量少了五倍;

能處理更復雜的提示詞,並且完成作圖任務;

根據任意格式的文本指令對現有圖像進行編輯,比如更改天空顏色,或者在特定位置添加對象。

客觀的説:CM3leon 能達到的能力還真的可以位居多模態市場巔峯,不僅是清晰度更高、還能突破此前多模態的繪畫瓶頸:比如手部細節刻畫、用語言提示詞進行物體、空間細節佈局等;

這可能都要歸功於 CM3leon 的多功能架構,這意味着多模態大模型以後可以實現在文本、圖像、視頻等多任務間自由切換,這是之前多模態所達不到的。

2、Stability AI 推出圖片生成控制模型 Stable Doodle

簡單來説 Stable Doodle 大模型就是給它一張草圖,幫助你實現圖片控制;類似 ControlNET 的功效;

【有多硬】

這個 Stable Doodle 是基於 Stable Diffusion XL 模型與 T2I-Adapter 相結合而成。

而 T2I-Adapter 是騰訊 ARC 實驗室的一款圖文控制器;參數只有 70M 存儲空間 300M,非常小巧,但是能夠更好的理解草圖的輪廓,並幫助 SDXL 做圖片生成進一步的控制;

3、視頻分割大模型【SAM-PT】現身

前段時間,Meta AI 開源了一個非常強大的圖像分割基礎模型 Segment Anything Model(SAM),瞬間引爆了 AI 圈。

現在,來自蘇黎世聯邦理工學院、香港科技大學、瑞士洛桑聯邦理工學院的研究人員發佈了 SAM-PT 模型,能將 SAM 的零樣本能力擴展到動態視頻的跟蹤和分割任務。

也就是説,視頻也能進行細節分割了。

國內大模型事件

1、網信辦出手,國內大模型,有了 “保險”

國家網信辦等七部門聯合公佈《生成式人工智能服務管理暫行辦法》(以下稱《辦法》),自 2023 年 8 月 15 日起施行。

主要包括:

1、要求分類分級監管;

2、明確提出訓練數據處理、標註等要求;

3、明確了提供和使用生成式 AI 服務的要求;

《辦法》的出台相當於給在國內使用、提供生成式 AI 服務的企業上了一個保險,以後哪怕是有問題,也知道去哪裏投訴了。

2、阿里開源國內首個大模型"對齊數據集”

上個月,天貓精靈和通義大模型聯合團隊公佈了一個 100PoisonMpts 大模型治理開源數據集,又稱為 “給 AI 的 100 瓶毒藥”,目的是試圖引導 AI 落入一般人也難以避免的歧視和偏見的陷阱。

這是對多個大模型投毒後的結果評測:在抑鬱症問題上,也還是 GPT4、GPT3.5 以及 Claude 的綜合得分更高;

阿里又開源了一個 15 萬條數據的大模型對齊評測數據集——CValue,主要用於 “大模型對齊” 研究;

對齊是幹嘛的?

簡單來説,大模型對齊研究就是讓 AI 給出符合更人類意圖的答案,主要是在回答更富有情感、具有共情能力,且符合人類價值觀,希望 AI 以後也學會人文關懷。

右側是對齊後的結果:測試 ChatPLUG-100Poison 通過對齊訓練後的回答,確實有點人情味兒啦~

3、京東發佈言犀大模型

京東正式發佈言犀大模型、言犀 AI 開發計算平台,想做最懂產業的服務工具。

目前,言犀已經啓動預約註冊,預計 8 月正式上線。

4、智源超越 DeepMind

智源研究院「悟道·視界」研究團隊開源了全新的統一多模態預訓練模型——Emu。不僅在 8 項基準測試中表現優異,而且還超越了此前的一眾 SOTA。

該預訓練模型最大的特點是:打通多模態輸入—多模態輸出;

實現了:多模態任意圖文任務的內容補全,並對任務進行下一步自迴歸預測;

這一套預訓練模型能幹什麼大事?

可以訓練媲美 Meta 新鮮出爐的 CM3leon 大模型啊。(方法給到了,剩下的全靠個人努力了)

5、王小川大模型再升級

百川智能再次發升級版大模型 Baichuan-13B,參數直接從 70 億飆到了 130 億。

一同出道的還有一個對話模型 Baichuan-13B-Chat,以及它的 INT4/INT8 兩個量化版本。

Baichuan-13B 刷新開源訓練數據天花板:

Baichuan-13B 大模型的訓練數據量有 1.4 萬億 token!是 LLaMA_13B(Meta 知名大模型)的 140%;在中文語言評測中,特別是自然科學、醫學、藝術、數學等領域直接跑贏 GPT。

其它 AI 海外消息

- 牛津、劍橋紛紛解除對 ChatGPT 禁令;

- Meta 要發 AI 模型商用版;

- 馬斯克 “打臉” 現場,曾高調抵制生成式 AI,如今宣佈成立"xAI";

本文作者:韓楓,來源:硬 AI,原文標題:《Bard、Cladue、GPT 掀起海外大模型"混戰",OpenAI 開始反內卷?Meta 擊敗 Midjourney |【硬 AI】週報》