2026's Harsh AI Question: after bns AI Capex, Where will Returns Come From?

In the prior piece ‘AI’s Original Sin: Is NVIDIA the AI market’s addictive stimulant?’, Dolphin Research argued that by 2025 the AI value chain has skewed sharply upstream. Under FOMO, investment keeps climbing while the health of the chain deteriorates, and competition among core players has intensified.

As the internet moves into the AI era, however the factors reshape bargaining power along the stack, AI still faces a final exam: after a front‑loaded, capex‑heavy, tech‑ and talent‑intensive buildout on a roughly five‑year depreciation cycle, is this a bubble or not? The ultimate yardstick is simple: does the ROI add up.

Here, Dolphin Research rough‑cuts the ROI hurdle for this AI investment feast. We also flag what to focus on in 2026 and beyond to get there.

1) How much incremental revenue makes North America’s AI capex ROI work?

Start with the basics: how much revenue must this feverish AI capex generate for a reasonable ROI. We make steady‑state, conservative assumptions:

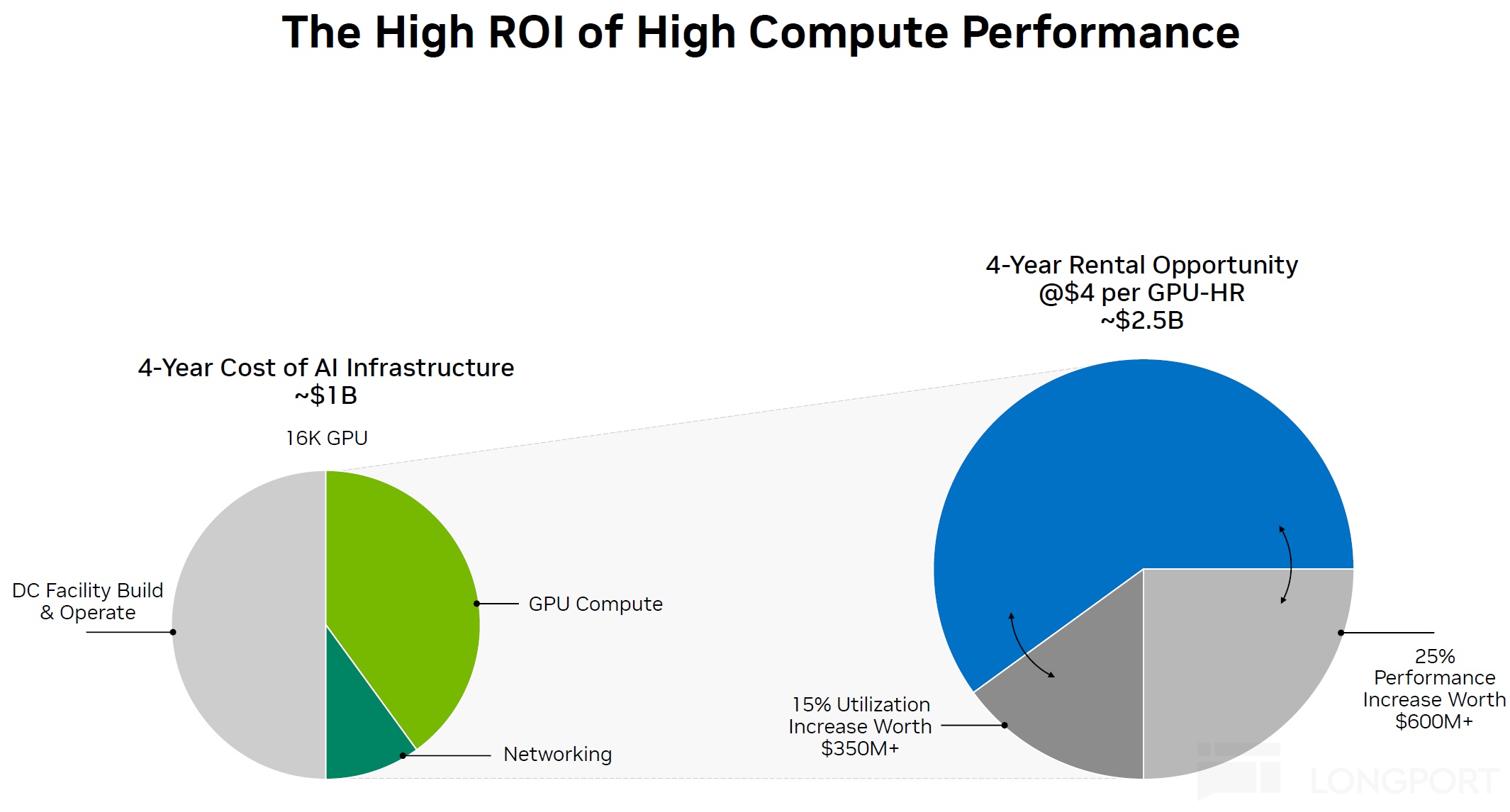

a) For every $1 of AI chips plus networking sold by $NVIDIA(NVDA.US), cloud providers invest $2 in data centers.

b) At a 50% GPM for cloud, $2 of data‑center capex needs to support $4 of revenue for ROI coverage.

c) Downstream end users (the ultimate customers of AI/Model‑based services) also at a 50% GPM must generate $8 of revenue to justify $4 of cloud spend.

Along this long chain, each $1 of chip and networking revenue at the designer level needs end‑market use cases to produce roughly 1×2×2×2=8 in revenue to clear a reasonable ROI. That is the implied hurdle.

Source: NVIDIA NDR materials

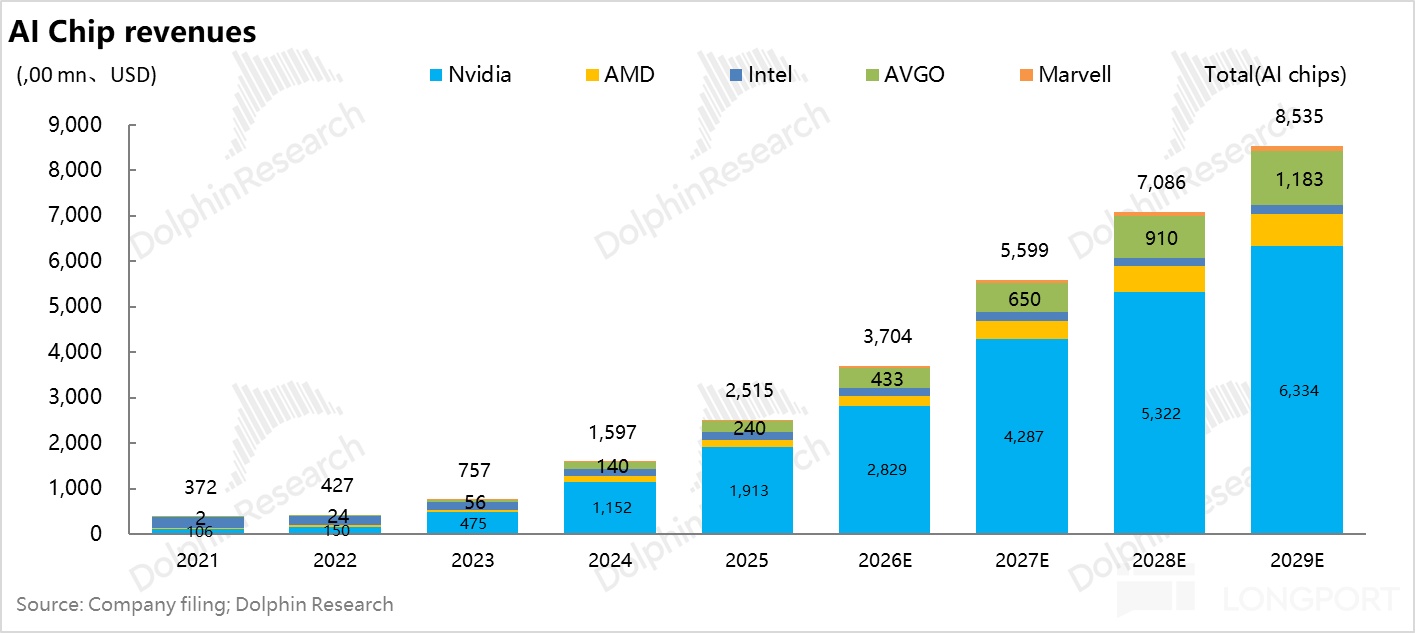

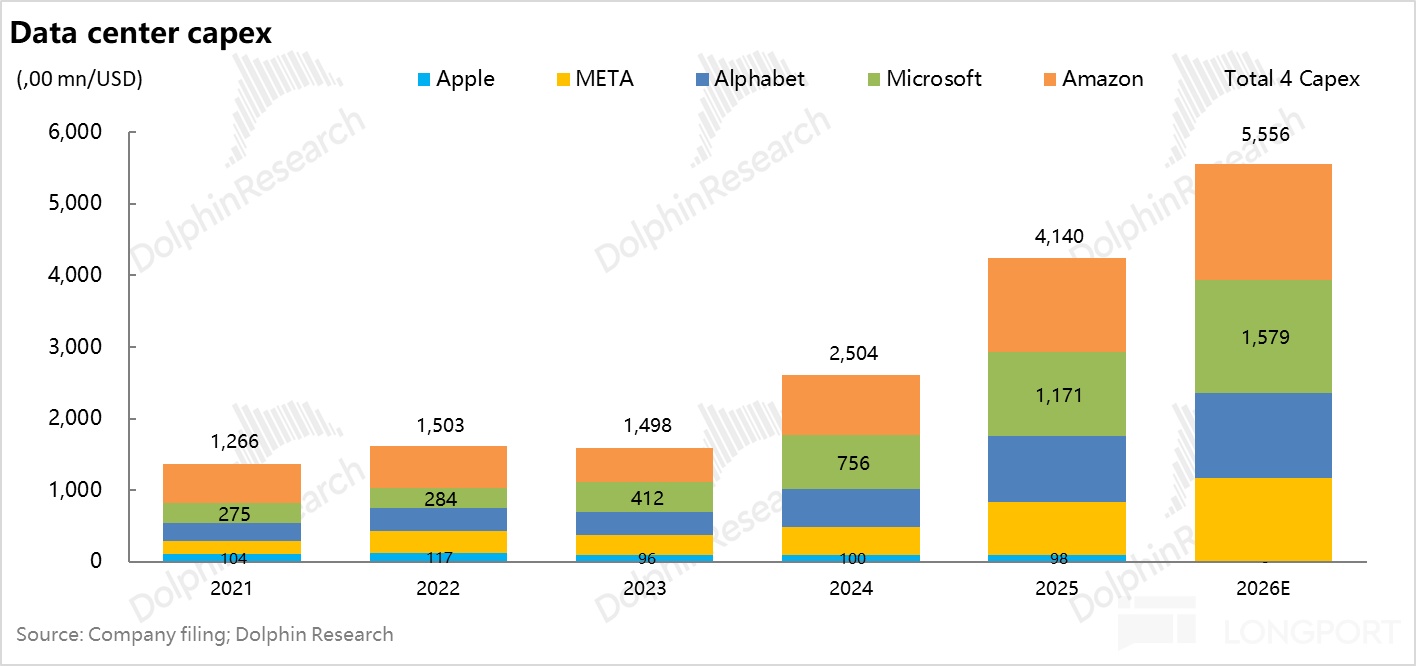

Market consensus for leading chip designers’ 2026 revenue (aka cloud AI chips + networking procurement) is about $370bn. This roughly maps to $640bn of 2026 data‑center capex by cloud service providers (CSPs), also in line with current consensus.

Over a five‑year amortization cycle, that capex must support $640bn×2 ≈ $1.2tn of CSP revenue to make ROI work. On customer bills, that is $1.2tn of AI cloud spend that end customers must recoup with roughly $2.4tn of incremental economic value (via new revenue or cost take‑out).

Spread evenly, that $2.4tn over five years is ~$500bn per year, which does not sound impossible. But most forecasts assume AI investment keeps compounding from this base for years.

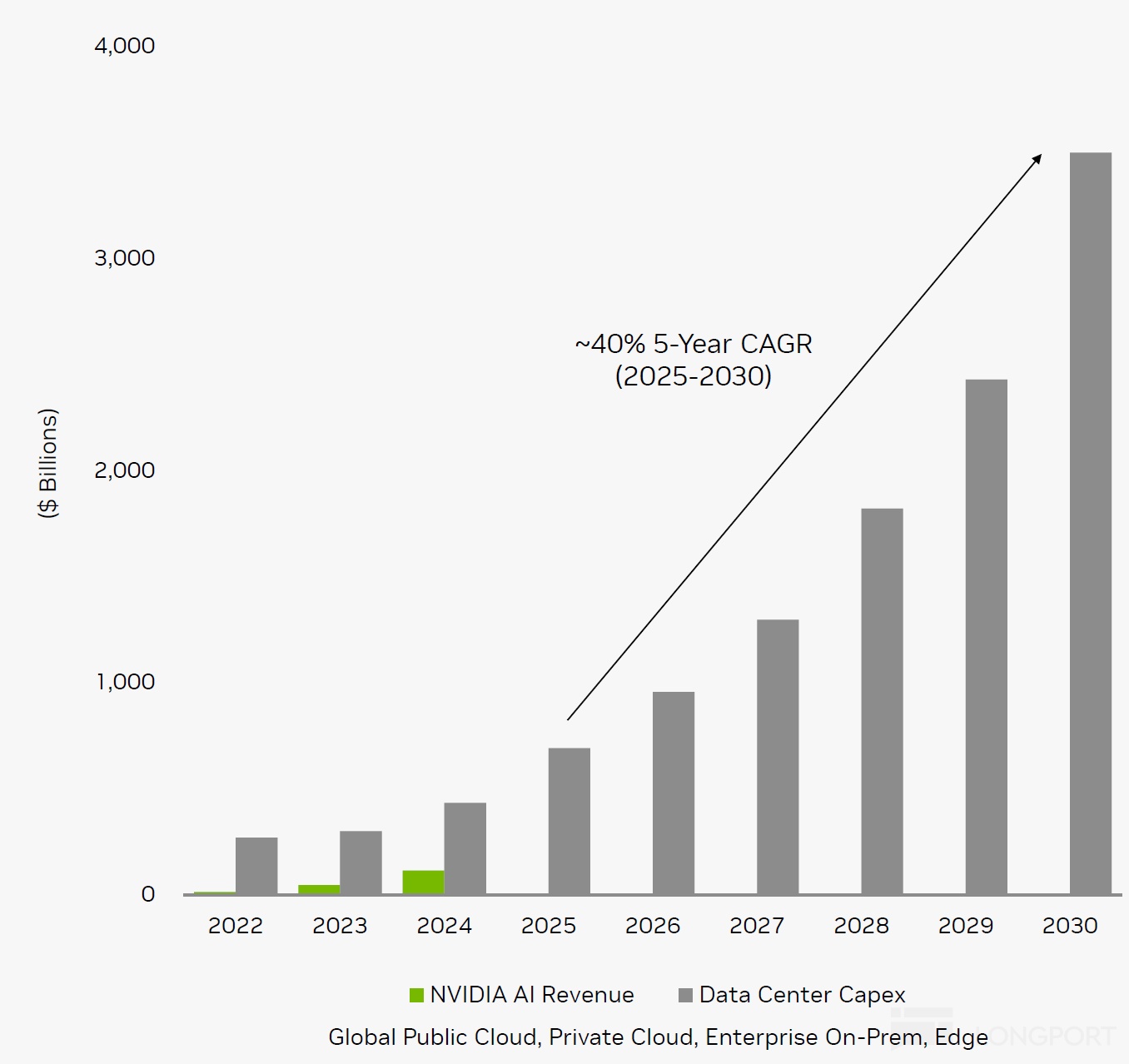

NVIDIA itself projects cloud AI capex could reach $3.5tn by 2030. Even if that level simply flat‑lines for five years, spreading the $2.4tn target evenly becomes moot.

Put differently, the $640bn of 2026 capex implies a one‑year economic value requirement of ~$2.4tn. With U.S. nominal GDP at ~$31tn, that $2.4tn target is already 7.5%+ of 2025 nominal GDP.

In other words, U.S. AI capex must diffuse globally and also lift domestic productivity. Per Kevin Hassett, an oft‑mentioned Fed chair candidate, AI would need to add ~4% to U.S. productivity in 2026; real GDP growth at or below 3% would not be enough.

With a >$2tn end‑market value hurdle in mind, what should we watch in 2026 to judge whether the AI bubble bursts or not? Dolphin Research suggests tracking three lines:

1) Aggregators of AI utility: can U.S. internet giants re‑accelerate profit growth?

2) Can OpenAI, the spearhead, truly become the next internet behemoth?

3) Device‑side AI: when does it finally inflect?

2) Aggregators of AI utility: can U.S. internet giants re‑accelerate profit growth?

U.S. internet giants are both cloud providers and owners of key distribution, making them the main vehicles for downstream use cases. AI recommender systems are being deployed to upgrade legacy rec engines and boost matching between users and goods, content, and ads.

AI impact should first show up as revenue acceleration, or as opex efficiencies and labor substitution. If revenue does not accelerate, heavy‑asset capex intensity will rise; even if AI trims some R&D headcount costs, higher depreciation/amortization will compress margins.

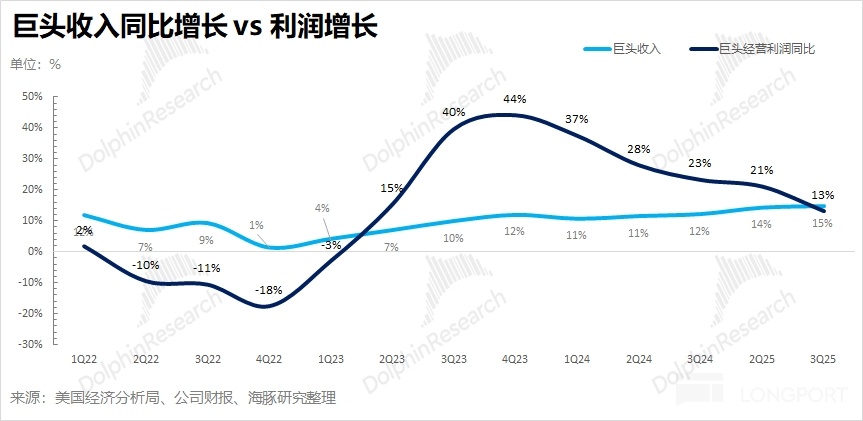

When revenue growth stalls or slows while margins compress, EPS will decelerate, undermining the past three years’ outperformance pillars: faster revenue and even faster EPS via scale.

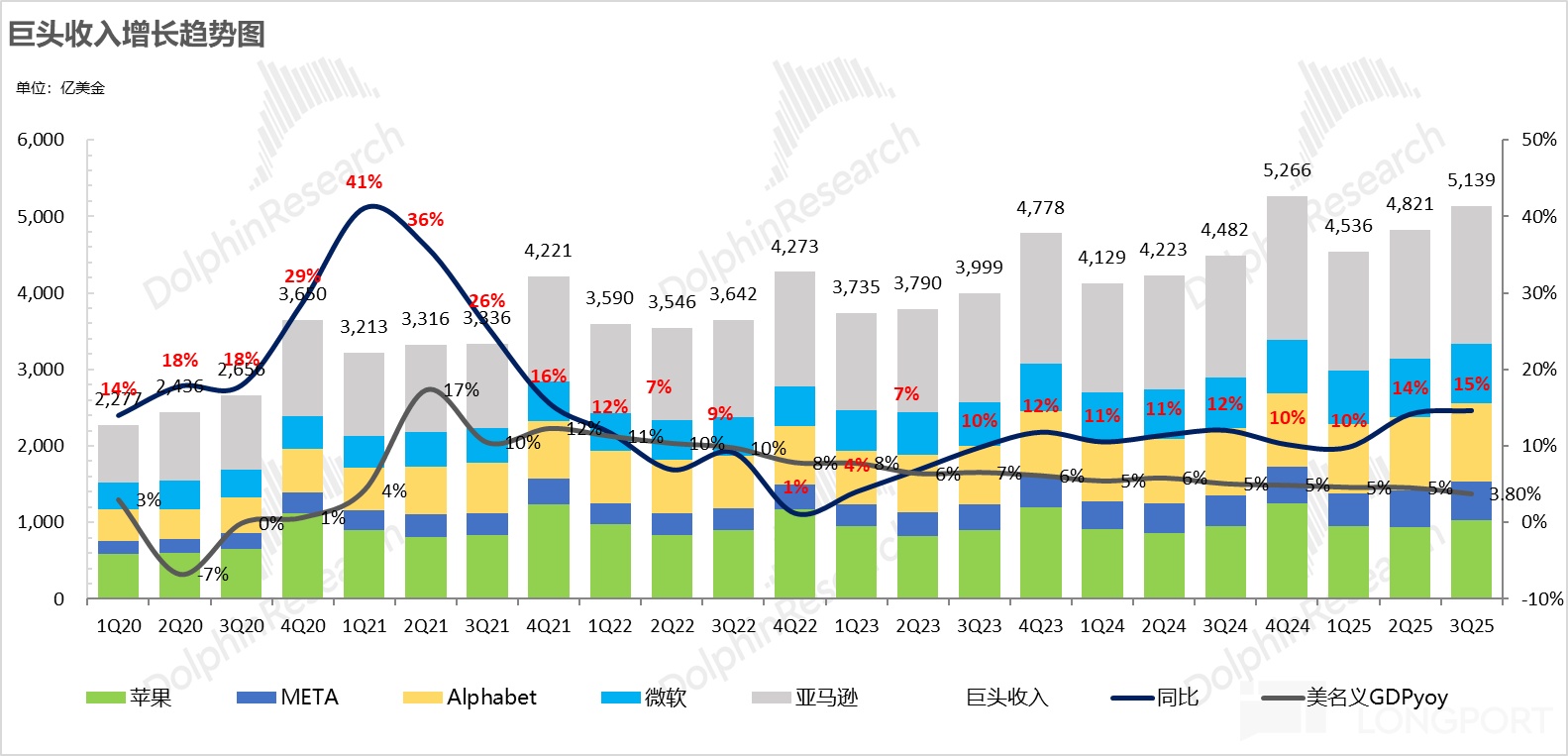

Over recent quarters, the big four (Microsoft, Google, Meta, Amazon) have largely held high revenue growth. Thanks to better ad monetization from AI‑driven recommendations, led by Meta, growth has even shown signs of acceleration.

In early AI investment stages, depreciation was rising (ex‑Microsoft), but stronger top‑line growth mostly absorbed the added costs. That kept operating leverage intact.

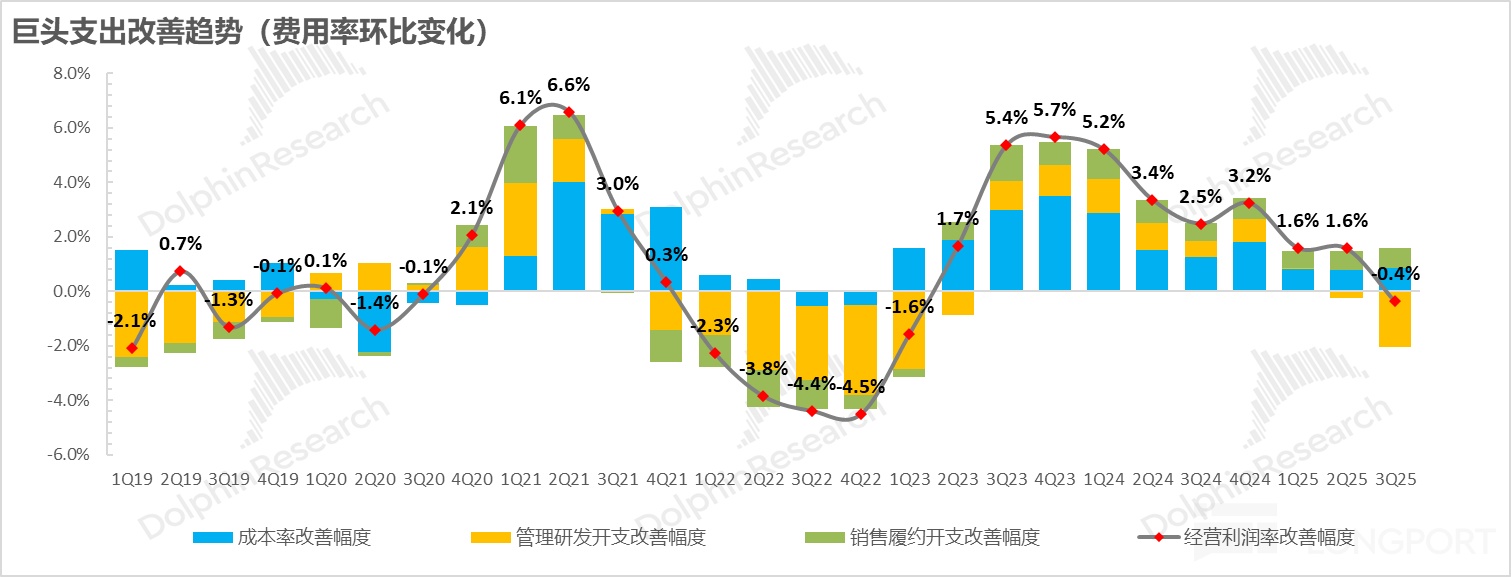

From Q3 2025, the R&D land grab (AI talent) has visibly eaten into margins. It has offset the operating leverage from revenue acceleration.

The result: after nine quarters, operating margin declined, and profit growth lagged revenue growth for the first time. That inflection is notable.

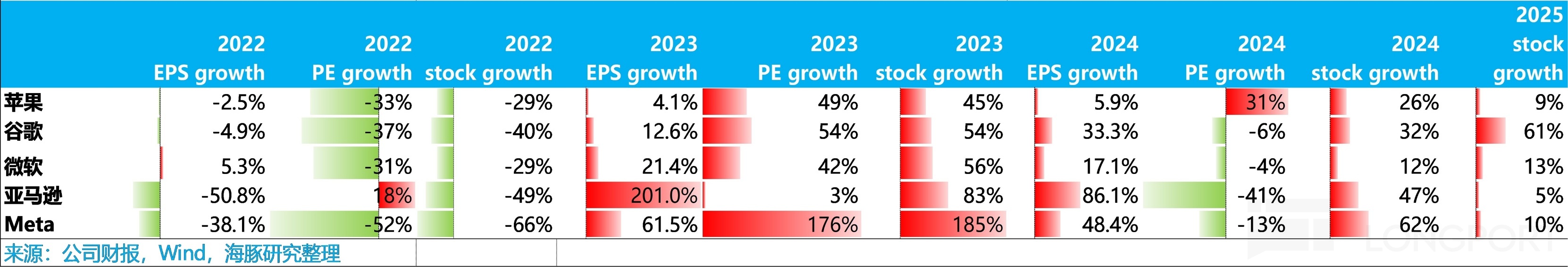

Historically, when growth slows and margins roll over together (e.g., in 2022), EPS takes a double hit and multiples compress sharply.

Based on Q3 outlooks, $Meta Platforms(META.US) has already seen a de‑rating as guidance implied a Q4 growth slowdown. For other giants (ex‑$Apple(AAPL.US)), the market expects deceleration in legacy internet (e‑commerce, ads) and only a modest acceleration in cloud.

So can profit growth re‑accelerate in 2026? From Q3 signals, unless AI productivity proves overwhelming, the odds look slim, and even sustaining revenue acceleration will be tough.

If 2026 plays out that way, AI would not be adding meaningful incremental revenue to legacy businesses, and cloud would not be accelerating enough. Margins would likely drift down further with EPS decelerating.

Most giants, including $Microsoft(MSFT.US), appear headed into a mismatch: flattish revenue vs. heavier investment. Into late‑2025’s elevated multiples, 2026 could bring higher AI capex, visibly higher D&A in P&L, and R&D ‘inflation’ from the AI hiring war.

If AI payback (via revenue lift or cost cuts) does not dilute these added costs, some U.S. mega‑caps may face multiple compression. Even without re‑rating risk, outperformance would be hard to deliver.

3) Can OpenAI, the end‑market spearhead, become the next behemoth?

AI monetization today concentrates at the consumer and enterprise ends. That is where adoption is most visible.

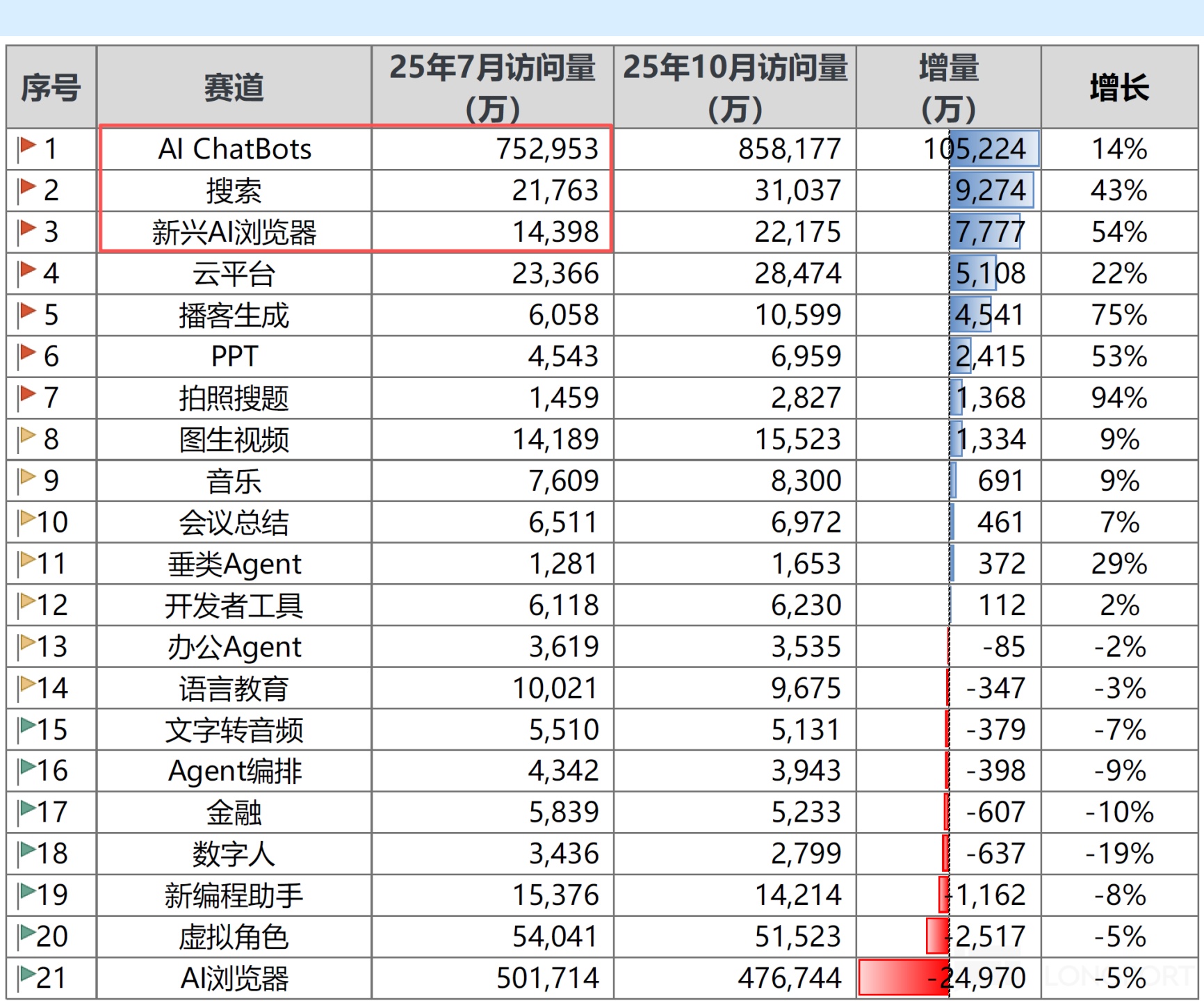

On the consumer side, the main use cases are chatbots and AI search, plus browsers. But without a new interaction paradigm, browsers will be hard to disrupt.

On the enterprise side, adoption centers on productivity/agentic tools. Growth is fast, but the base remains small.

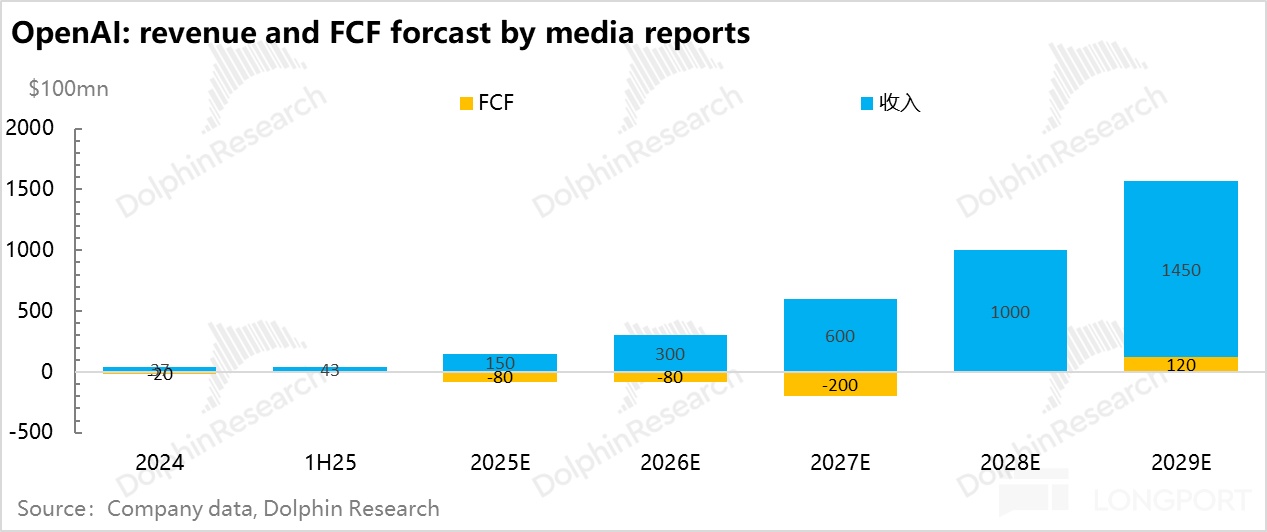

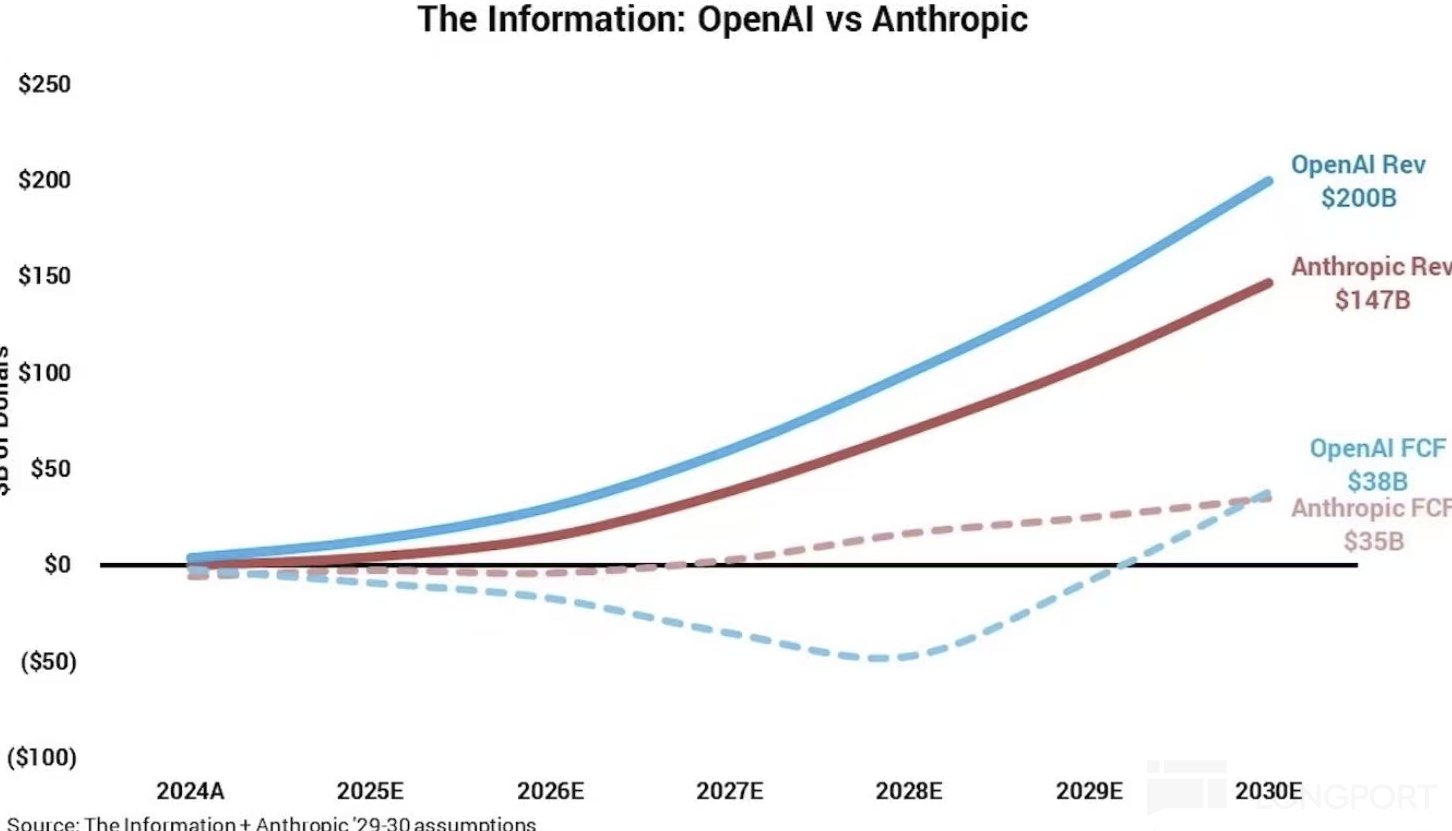

As the pioneer, OpenAI must honor sizable 2025 capex commitments, creating the most intense monetization pressure. Its 2026 pace and mix are key trackers for the $2.4tn end‑market value target.

Media reports suggest OAI is running at a $20bn annualized revenue pace as of Oct, with ~$13bn for full‑year 2025. Revenue comprises consumer (ChatGPT app subscriptions) and enterprise (OpenAI API), shifting from ~70:30 to ~65:35 in 2025.

If 2026 revenue reaches only ~$30bn, it would fall well short of cloud payment commitments (an extra ~$50bn in 2026). The gap would persist.

1) Consumer internet: ChatGPT

OpenAI’s sole consumer product at scale is ChatGPT. Other internet products in testing — browser, Sora, etc. — show weak retention and no clear traction yet.

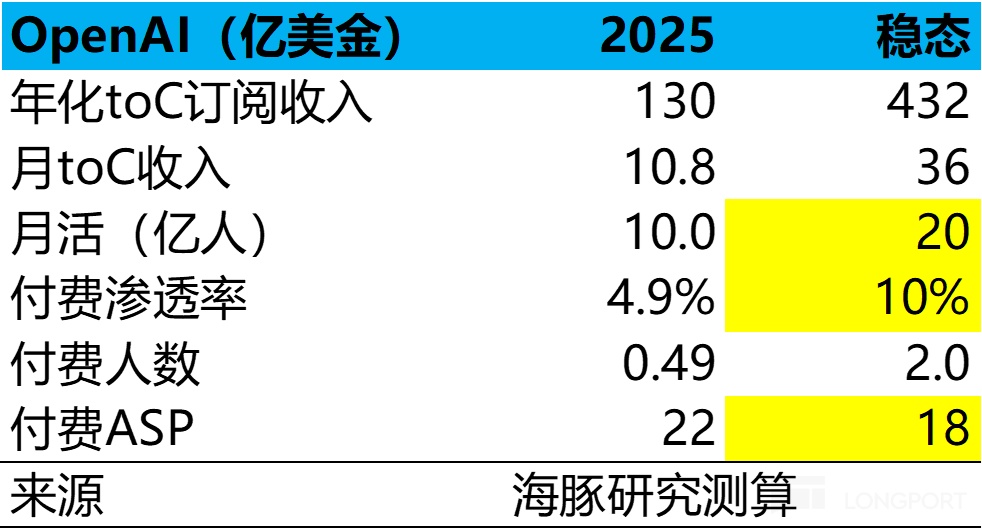

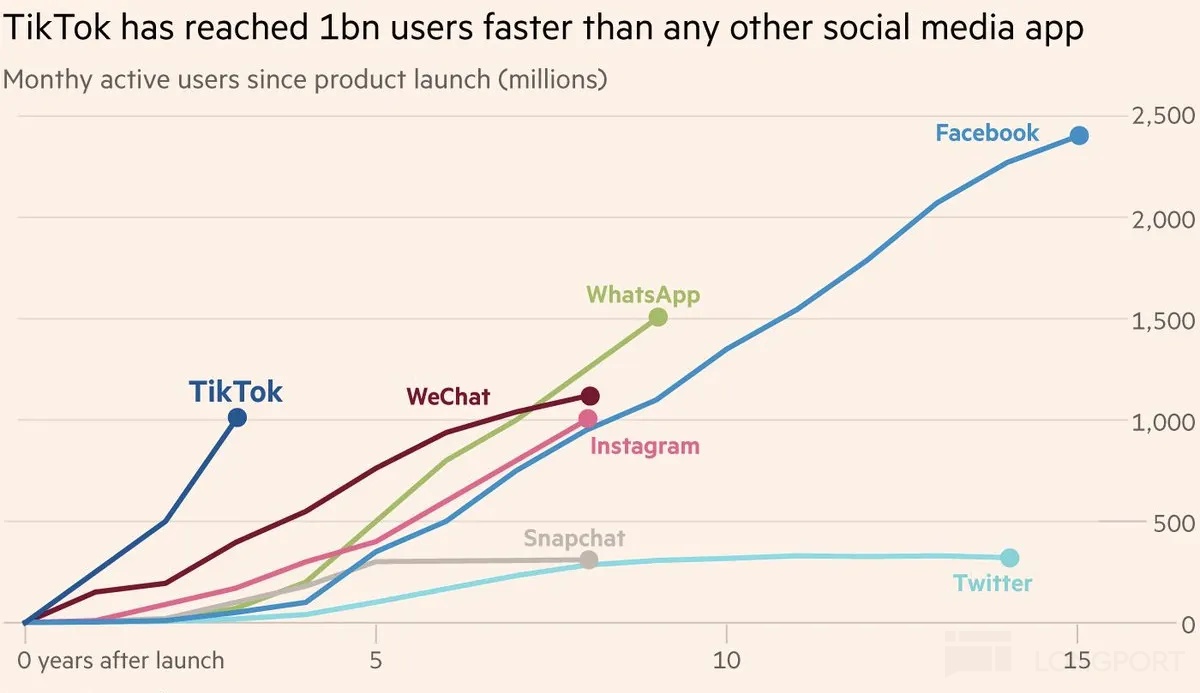

ChatGPT has roughly 0.8–0.9bn WAU, implying about $12bn annualized revenue. If it ultimately reaches 2bn MAU (YouTube scale) and sustains a 10% paid conversion on the app, subscription revenue could settle around ~$45bn.

Pure ads look difficult since injecting ads into model outputs risks polluting results and eroding trust. Commerce‑based monetization is still experimental, with revenue hard to size.

Given current product evolution, the model skews toward subscriptions. If the end‑state resembles a subscription app and transaction‑based revenue contributes ~25% (by peer paid‑app benchmarks), implied transaction revenue could be ~$15bn.

That would put total consumer revenue (subscriptions + transactions) around ~$60bn. The math rests on ChatGPT reaching 2bn users globally.

History suggests that, excluding social, most standalone apps struggle to sustain hyper‑growth beyond 1bn users without a parent‑app funnel. Scaling a single app much further is hard.

If an ecosystem moat fails to form during the tech‑lead window, and a rival with stronger distribution achieves model parity — e.g., $Alphabet(GOOGL.US) with Gemini iterations and vertically integrated cost advantages — low‑price competition could keep OpenAI’s paid conversion stubbornly low. That would cap monetization.

A major change in 2025: $Alphabet - C(GOOG.US) with Gemini + Nano Banana is catching up fast, already threatening ChatGPT’s edge in conversational AI.

4) What is the true unit economics of the AI internet?

Beyond revenue uncertainty, while mapping OpenAI’s economics and profit path, we hit a deeper issue that forces a rethink of consumer‑facing AI internet unit economics. Despite nearing $20bn annualized revenue, losses appear to steepen with scale.

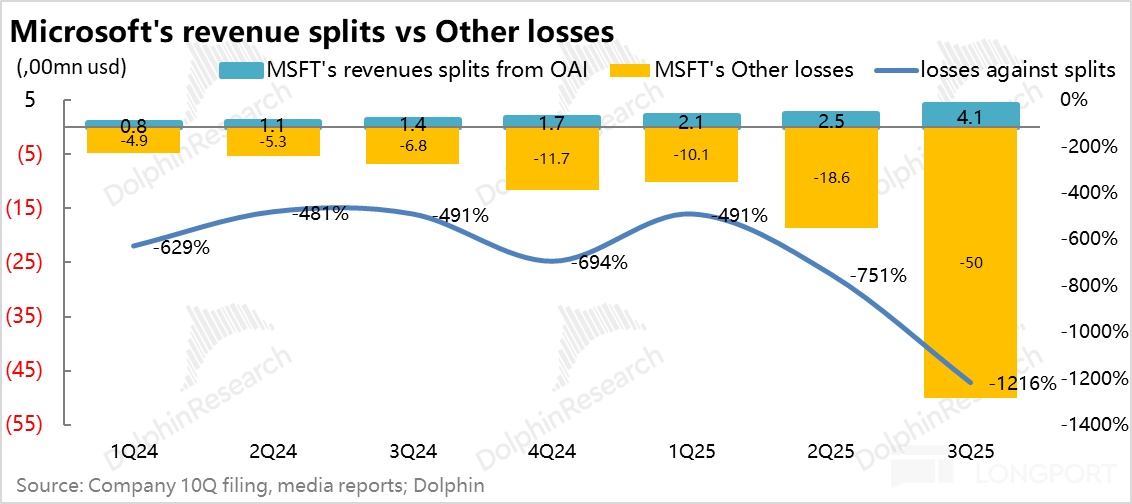

From media reports, we compiled Microsoft’s take rate from OpenAI revenue. Assuming a stable take‑rate vs. OpenAI revenue and, pre‑Q3, a steady share of OpenAI’s losses attributable to Microsoft’s ~40% interest, the delta between Microsoft’s recognized revenue from OpenAI and its share of OpenAI losses provides a lens on OpenAI’s loss trajectory.

Placed side by side, the pattern looks like an anti‑scale effect: as OpenAI revenue grows, loss ratio does not converge; it worsens. That is the opposite of classic internet scaling.

In PC and mobile — even video, which is heavier on bandwidth, CPU, and storage — services could scale on free usage early on. No subscription was required.

Free apps ramp user bases quickly, with advertisers and merchants footing the bill. That unlocked extreme traffic‑scale effects.

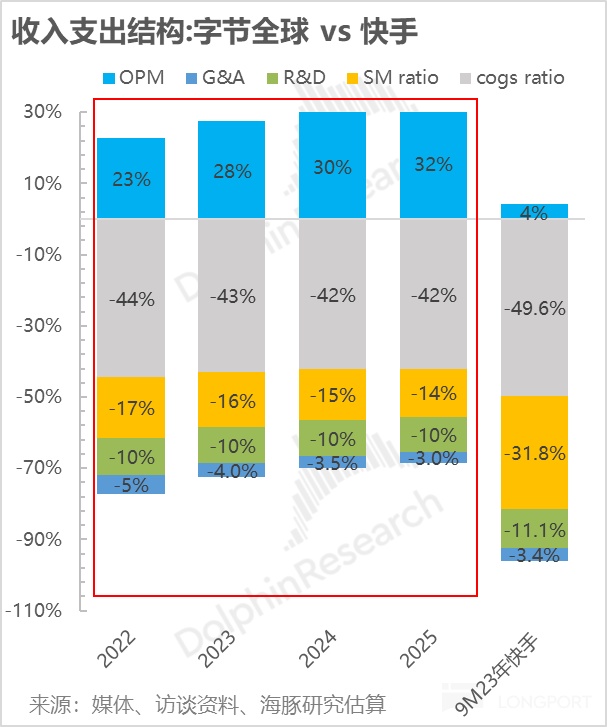

Short video, for instance, demands more storage and bandwidth than text, but can build massive users and time‑spent. High‑efficiency, multi‑channel monetization dilutes IT cost.

With diversified monetization, revenue outgrows cost and margin structure lifts — the magic of internet economics. Scale begets profitability.

In the legacy internet, a search query recombines a common knowledge base for display. The marginal cost of serving content is very low.

The factor stack was effectively CPU + fiber + base stations = traffic = digitization. After the 2000 bubble and multiple rounds of mobile buildout and telco consolidation, the stack commoditized and excess profits were arbitraged away.

In the AI internet, each Q&A is personalized generation off the base knowledge store. Every interaction invokes GPU compute, and longer context windows consume more tokens.

The core production mix has shifted to GPU + power = tokens = intelligent everything. GPU usage is heavy, supply concentrated, and pricing power high.

From the lens of personalized Q&A, AI internet shows an ‘anti‑scale’ tendency; early user accumulation is unusually costly. Upfront opex and capex are high.

Hence consumer AI needs subscriptions from the start, unlike Meta’s model of amassing global users with free access funded by ads. The old playbook does not port over cleanly.

As OpenAI scales revenue while losses deepen, is this due to free users growing too fast, or a structural shift in production factors? Will consumer AI still enjoy the unbeatable scale effects of the PC/mobile eras?

This deserves deeper study, and Dolphin Research will continue to search for answers as AI economics evolve. We will keep updating our view.

2) Enterprise internet: model APIs

Turning from consumer internet to enterprise AI, OpenAI’s B2B model is comparatively cleaner with two revenue lines. a) Enterprise subscriptions: ~5mn users at ~$22 monthly implies ~$1.5bn annualized (akin to Office — employee use, enterprise pays).

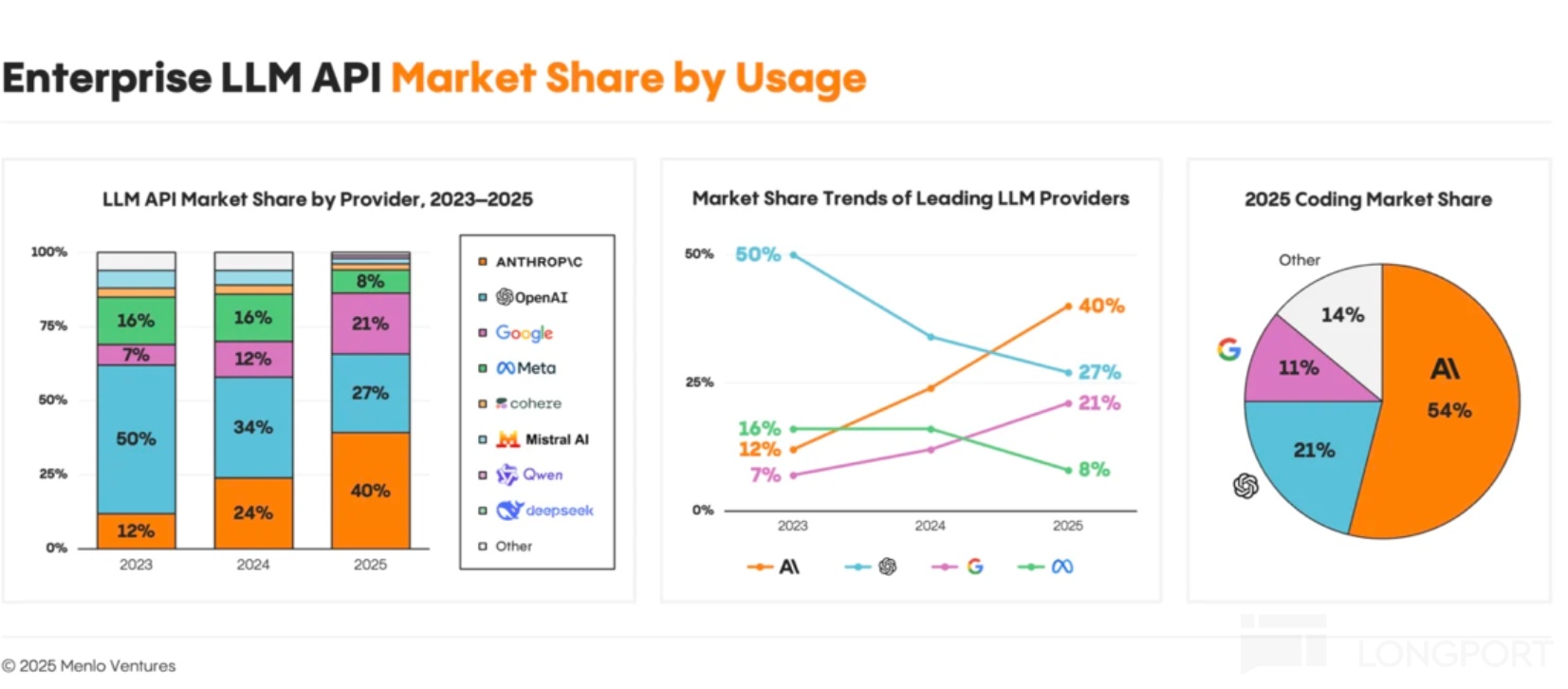

b) PaaS/API: model APIs distributed exclusively via Azure — we estimate ~$6bn annualized revenue. Anthropic’s API revenue is already running at ~$9bn annualized.

Competitive trends suggest Anthropic’s share in model APIs is rising rapidly in 2025 and has surpassed OpenAI. OpenAI has ceded ground here.

Because API distribution sits with Microsoft, OpenAI must share API revenue with it. This lowers OpenAI’s prioritization of that line.

Still, this business has stronger economic certainty: enterprises have higher willingness to pay, pricing scales with token consumption, and input–output is easier to underwrite vs. consumer internet. On risk‑adjusted ROI, B2B is far more tractable.

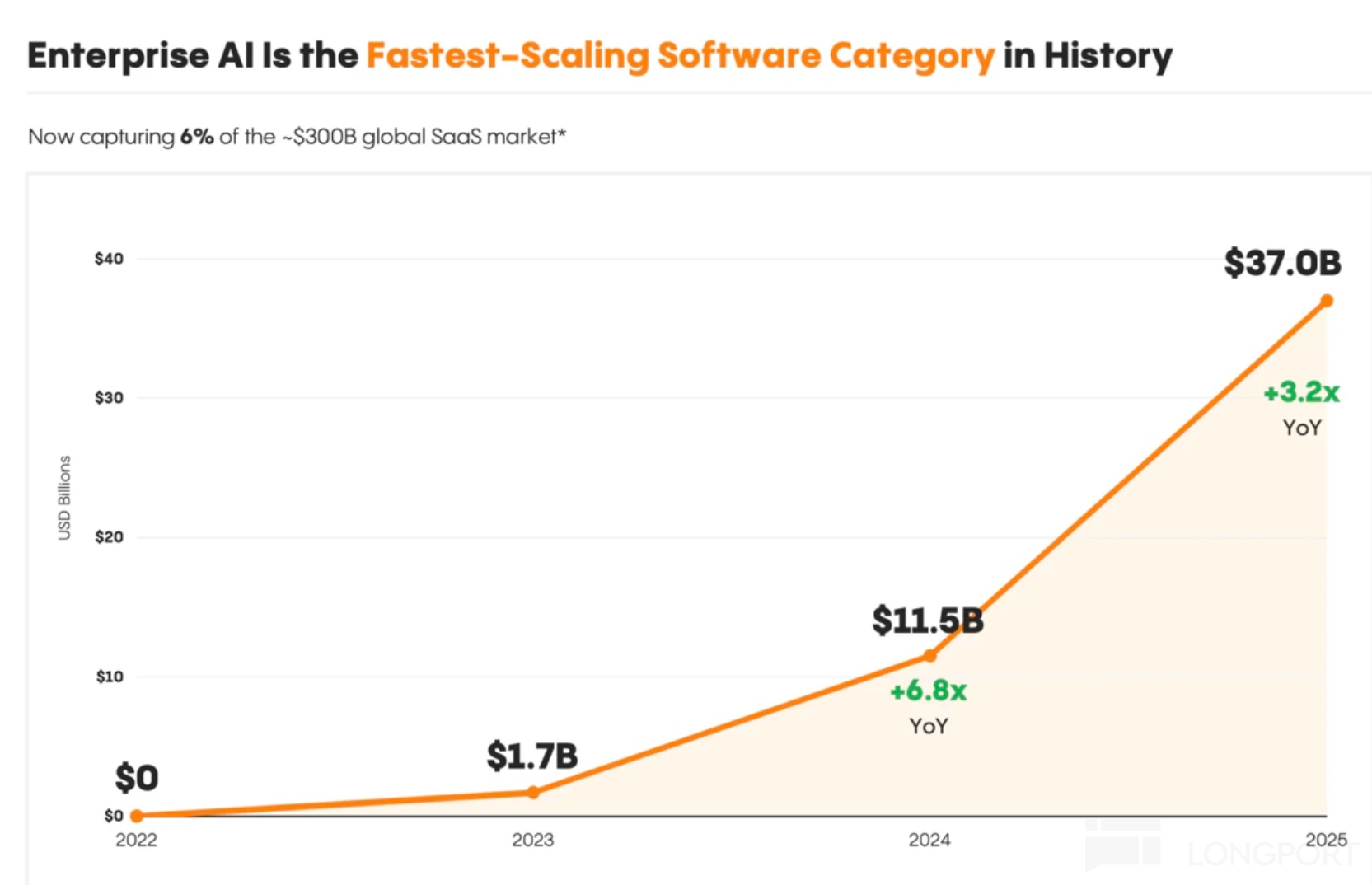

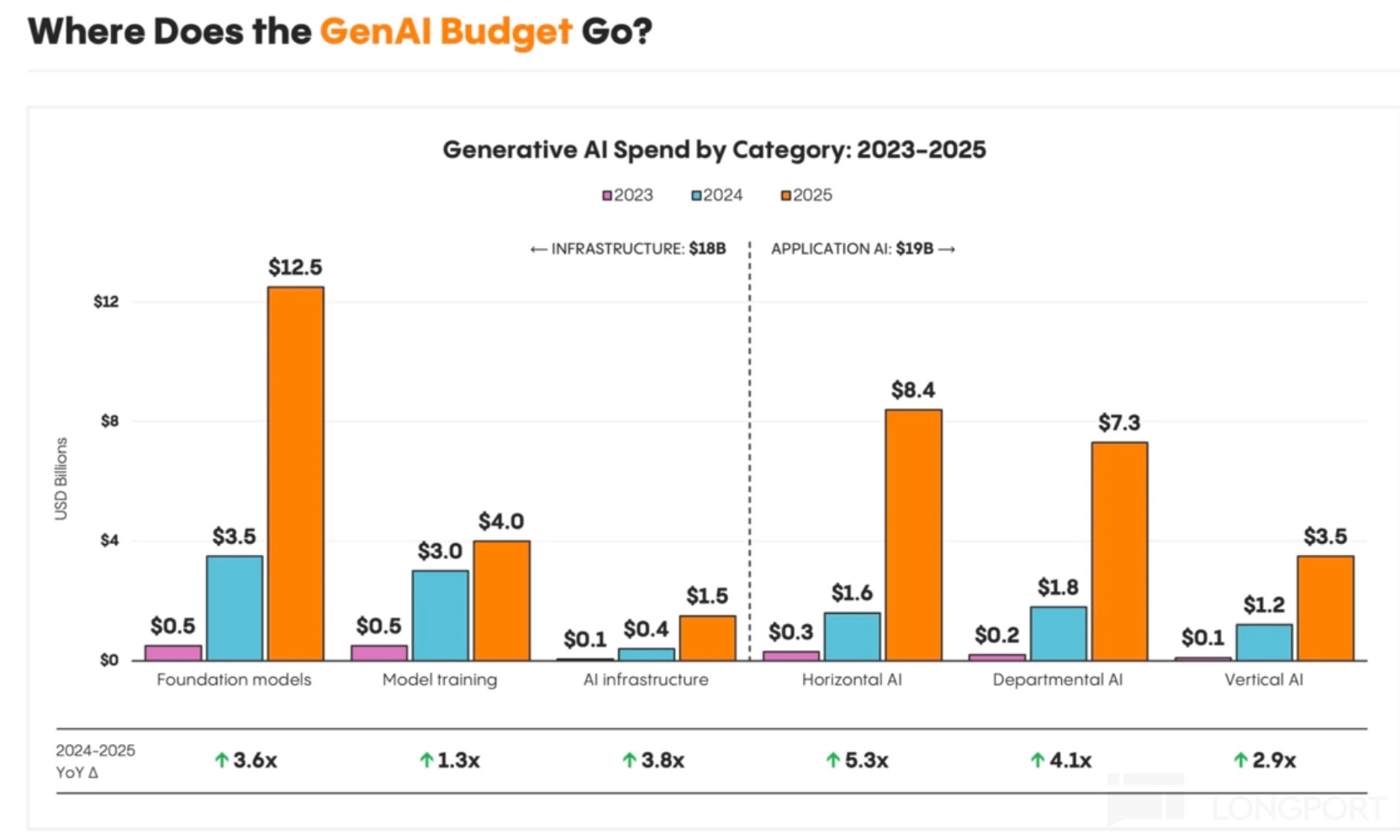

Industry‑wide, per third‑party data (Menio Ventures), downstream third‑party customers of AI cloud generated about $37bn revenue in 2025, up ~320% YoY. Growth is off a small base but steep.

At the current slope, 2026 could grow 150–200% YoY to roughly $100bn for the overall B2B stack (foundation models + AI SaaS). The ramp looks faster because of the base effect.

Note: ‘Horizontal’ refers to general‑purpose workplace AI (ERP, Office, etc.); verticals include sectors like healthcare; departmental AI covers sales, finance, and other specific corporate functions.

In total, downstream monetization in 2026 is estimated at ~$300bn on the consumer side (incremental revenue by U.S. internet giants) plus ~$100bn on B2B, or roughly ~$400bn incremental. That is the current ballpark.

Especially in consumer, competition is not only about tech but about ecosystem, users, channels, capital, and capacity under tight time constraints. With models leapfrogging on a quarterly cadence, birthing a brand‑new consumer internet giant looks unlikely.

Hence the ultimate bearer of years of heavy AI capex likely shifts to device‑side AI and the new IoT enterprise opportunities it enables. That is where the baton may pass.

3) Device‑side AI — the real bearer of the $2.4tn challenge?

Two device‑side tracks stand out: labor‑substitution tools (AI robots) and AI consumer electronics. The latter resembles smartphones in the mobile era by creating new demand.

a) AI robots

Today they face a classic chicken‑and‑egg problem. Data scarcity and utility both constrain progress.

Intelligence: online history has visual and audio data, but little tactile data. Versus autos, robot R&D is much harder on raw data accumulation and needs hardware shipments to collect data.

Hardware shipments: without sufficient intelligence, robots offer limited utility beyond novelty and take up space. Real‑world usefulness is thin.

Unlike phones and cars, which shipped at scale pre‑‘smart’ (phones offered communications; cars offered transport), robots lack a pre‑smart use case that scales. That makes cold‑start much harder.

Phones and cars could ship at scale first, collect behavior/context data, and iterate intelligence via OTA. Robots, with low shipments, will take much longer to gather tactile data for training.

b) New AI hardware: vying for the next interface

Near term, AI consumer electronics look more viable than robots. We are already seeing AI glasses, AI toys, AI rings, bands, earbuds, and AI‑first phones (e.g., Doubao’s AI phone) launching into late‑2025.

Takeaways: three 2026 focus lines — device‑side AI, enterprise AI, and compute cost down

After three years of AI since late‑2022 and a multi‑year run‑up centered on compute, 2026 should pivot to opportunities under a compute cost‑down regime. At the same time, with internet giants in a spend‑revenue mismatch, look for device‑side AI commercialization and opportunities from Agentic vs. traditional SaaS in enterprise AI.

In 2026, Dolphin Research will deep‑dive along these three vectors. Stay tuned.

<End>

Past pieces by Dolphin Research:

‘AI’s Original Sin: Is NVIDIA the AI market’s addictive stimulant?’

‘All Tied to the Mast: Is OpenAI a pearl or a demon pill?’

‘From AI darling to money‑burner overnight — can Meta fight back?’

Risk disclosure and statement: Dolphin Research disclaimer and general disclosures

The copyright of this article belongs to the original author/organization.

The views expressed herein are solely those of the author and do not reflect the stance of the platform. The content is intended for investment reference purposes only and shall not be considered as investment advice. Please contact us if you have any questions or suggestions regarding the content services provided by the platform.